Let be a compact interval of positive length (thus

). Recall that a function

is said to be differentiable at a point

if the limit

exists. In that case, we call the strong derivative, classical derivative, or just derivative for short, of

at

. We say that

is everywhere differentiable, or differentiable for short, if it is differentiable at all points

, and differentiable almost everywhere if it is differentiable at almost every point

. If

is differentiable everywhere and its derivative

is continuous, then we say that

is continuously differentiable.

Remark 1 Much later in this sequence, when we cover the theory of distributions, we will see the notion of a weak derivative or distributional derivative, which can be applied to a much rougher class of functions and is in many ways more suitable than the classical derivative for doing “Lebesgue” type analysis (i.e. analysis centred around the Lebesgue integral, and in particular allowing functions to be uncontrolled, infinite, or even undefined on sets of measure zero). However, for now we will stick with the classical approach to differentiation.

Exercise 2 If

is everywhere differentiable, show that

is continuous and

is measurable. If

is almost everywhere differentiable, show that the (almost everywhere defined) function

is measurable (i.e. it is equal to an everywhere defined measurable function on

outside of a null set), but give an example to demonstrate that

need not be continuous.

Exercise 3 Give an example of a function

which is everywhere differentiable, but not continuously differentiable. (Hint: choose an

that vanishes quickly at some point, say at the origin

, but which also oscillates rapidly near that point.)

In single-variable calculus, the operations of integration and differentiation are connected by a number of basic theorems, starting with Rolle’s theorem.

Theorem 4 (Rolle’s theorem) Let

be a compact interval of positive length, and let

be a differentiable function such that

. Then there exists

such that

.

Proof: By subtracting a constant from (which does not affect differentiability or the derivative) we may assume that

. If

is identically zero then the claim is trivial, so assume that

is non-zero somewhere. By replacing

with

if necessary, we may assume that

is positive somewhere, thus

. On the other hand, as

is continuous and

is compact,

must attain its maximum somewhere, thus there exists

such that

for all

. Then

must be positive and so

cannot equal either

or

, and thus must lie in the interior. From the right limit of (1) we see that

, while from the left limit we have

. Thus

and the claim follows.

Remark 5 Observe that the same proof also works if

is only differentiable in the interior

of the interval

, so long as it is continuous all the way up to the boundary of

.

Exercise 6 Give an example to show that Rolle’s theorem can fail if

is merely assumed to be almost everywhere differentiable, even if one adds the additional hypothesis that

is continuous. This example illustrates that everywhere differentiability is a significantly stronger property than almost everywhere differentiability. We will see further evidence of this fact later in these notes; there are many theorems that assert in their conclusion that a function is almost everywhere differentiable, but few that manage to conclude everywhere differentiability.

Remark 7 It is important to note that Rolle’s theorem only works in the real scalar case when

is real-valued, as it relies heavily on the least upper bound property for the domain

. If, for instance, we consider complex-valued scalar functions

, then the theorem can fail; for instance, the function

defined by

vanishes at both endpoints and is differentiable, but its derivative

is never zero. (Rolle’s theorem does imply that the real and imaginary parts of the derivative

both vanish somewhere, but the problem is that they don’t simultaneously vanish at the same point.) Similar remarks to functions taking values in a finite-dimensional vector space, such as

.

One can easily amplify Rolle’s theorem to the mean value theorem:

Corollary 8 (Mean value theorem) Let

be a compact interval of positive length, and let

be a differentiable function. Then there exists

such that

.

Proof: Apply Rolle’s theorem to the function .

Remark 9 As Rolle’s theorem is only applicable to real scalar-valued functions, the more general mean value theorem is also only applicable to such functions.

Exercise 10 (Uniqueness of antiderivatives up to constants) Let

be a compact interval of positive length, and let

and

be differentiable functions. Show that

for every

if and only if

for some constant

and all

.

We can use the mean value theorem to deduce one of the fundamental theorems of calculus:

Theorem 11 (Second fundamental theorem of calculus) Let

be a differentiable function, such that

is Riemann integrable. Then the Riemann integral

of

is equal to

. In particular, we have

whenever

is continuously differentiable.

Proof: Let . By the definition of Riemann integrability, there exists a finite partition

such that

for every choice of .

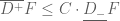

Fix this partition. From the mean value theorem, for each one can find

such that

and thus by telescoping series

Since was arbitrary, the claim follows.

Remark 12 Even though the mean value theorem only holds for real scalar functions, the fundamental theorem of calculus holds for complex or vector-valued functions, as one can simply apply that theorem to each component of that function separately.

Of course, we also have the other half of the fundamental theorem of calculus:

Theorem 13 (First fundamental theorem of calculus) Let

be a compact interval of positive length. Let

be a continuous function, and let

be the indefinite integral

. Then

is differentiable on

, with derivative

for all

. In particular,

is continuously differentiable.

Proof: It suffices to show that

for all , and

for all . After a change of variables, we can write

for any and any sufficiently small

, or any

and any sufficiently small

. As

is continuous, the function

converges uniformly to

on

as

(keeping

fixed). As the interval

is bounded,

thus converges to

, and the claim follows.

Corollary 14 (Differentiation theorem for continuous functions) Let

be a continuous function on a compact interval. Then we have

for all

,

for all

, and thus

for all

.

In these notes we explore the question of the extent to which these theorems continue to hold when the differentiability or integrability conditions on the various functions are relaxed. Among the results proven in these notes are

- The Lebesgue differentiation theorem, which roughly speaking asserts that Corollary 14 continues to hold for almost every

if

is merely absolutely integrable, rather than continuous;

- A number of differentiation theorems, which assert for instance that monotone, Lipschitz, or bounded variation functions in one dimension are almost everywhere differentiable; and

- The second fundamental theorem of calculus for absolutely continuous functions.

The material here is loosely based on Chapter 3 of Stein-Shakarchi.

— 1. The Lebesgue differentiation theorem in one dimension —

The main objective of this section is to show

Theorem 15 (Lebesgue differentiation theorem, one-dimensional case) Let

be an absolutely integrable function, and let

be the definite integral

. Then

is continuous and almost everywhere differentiable, and

for almost every

.

This can be viewed as a variant of Corollary 14; the hypotheses are weaker because is only assumed to be absolutely integrable, rather than continuous (and can live on the entire real line, and not just on a compact interval); but the conclusion is weaker too, because

is only found to be almost everywhere differentiable, rather than everywhere differentiable. (But such a relaxation of the conclusion is necessary at this level of generality; consider for instance the example when

.)

The continuity is an easy exercise:

Exercise 16 Let

be an absolutely integrable function, and let

be the definite integral

. Show that

is continuous.

The main difficulty is to show that for almost every

. This will follow from

Theorem 17 (Lebesgue differentiation theorem, second formulation) Let

be an absolutely integrable function. Then

for almost every

.

We will just prove the first fact (2); the second fact (3) is similar (or can be deduced from (2) by replacing with the reflected function

.

We are taking to be complex valued, but it is clear from taking real and imaginary parts that it suffices to prove the claim when

is real-valued, and we shall thus assume this for the rest of the argument.

The conclusion (2) we want to prove is a convergence theorem – an assertion that for all functions in a given class (in this case, the class of absolutely integrable functions

), a certain sequence of linear expressions

(in this case, the right averages

) converge in some sense (in this case, pointwise almost everywhere) to a specified limit (in this case,

). There is a general and very useful argument to prove such convergence theorems, known as the density argument. This argument requires two ingredients, which we state informally as follows:

- A verification of the convergence result for some “dense subclass” of “nice” functions

, such as continuous functions, smooth functions, simple functions, etc.. By “dense”, we mean that a general function

in the original class can be approximated to arbitrary accuracy in a suitable sense by a function in the nice subclass.

- A quantitative estimate that upper bounds the maximal fluctuation of the linear expressions

in terms of the “size” of the function

(where the precise definition of “size” depends on the nature of the approximation in the first ingredient).

Once one has these two ingredients, it is usually not too hard to put them together to obtain the desired convergence theorem for general functions (not just those in the dense subclass). We illustrate this with a simple example:

Proposition 19 (Translation is continuous in

) Let

be an absolutely integrable function, and for each

, let

be the shifted function

Then

converges in

norm to

as

, thus

Proof: We first verify this claim for a dense subclass of , namely the functions

which are continuous and compactly supported (i.e. they vanish outside of a compact set). Such functions are continuous, and thus

converges uniformly to

as

. Furthermore, as

is compactly supported, the support of

stays uniformly bounded for

in a bounded set. From this we see that

also converges to

in

norm as required.

Next, we observe the quantitative estimate

for any . This follows easily from the triangle inequality

together with the translation invariance of the Lebesgue integral:

Now we put the two ingredients together. Let be absolutely integrable, and let

be arbitrary. Applying Littlewood’s second principle (Theorem 15 from Notes 2) to the absolutely integrable function

, we can find a continuous, compactly supported function

such that

Applying (4), we conclude that

which we rearrange as

By the dense subclass result, we also know that

for all sufficiently close to zero. From the triangle inequality, we conclude that

for all sufficiently close to zero, and the claim follows.

Remark 20 In the above application of the density argument, we proved the required quantitative estimate directly for all functions

in the original class of functions. However, it is also possible to use the density argument a second time and initially verify the quantitative estimate just for functions

in a nice subclass (e.g. continuous functions of compact support). In many cases, one can then extend that estimate to the general case by using tools such as Fatou’s lemma, which are particularly suited for showing that upper bound estimates are preserved with respect to limits.

Exercise 21 Let

,

be Lebesgue measurable functions such that

is absolutely integrable and

is essentially bounded (i.e. bounded outside of a null set). Show that the convolution

defined by the formula

is well-defined (in the sense that the integrand on the right-hand side is absolutely integrable) and that

is a bounded, continuous function.

The above exercise is illustrative of a more general intuition, which is that convolutions tend to be smoothing in nature; the convolution of two functions is usually at least as regular as, and often more regular than, either of the two factors

.

This smoothing phenomenon gives rise to an important fact, namely the Steinhaus theorem:

Exercise 22 (Steinhaus theorem) Let

be a Lebesgue measurable set of positive measure. Show that the set

contains an open neighbourhood of the origin. (Hint: reduce to the case when

is bounded, and then apply the previous exercise to the convolution

, where

.)

Exercise 23 A homomorphism

is a map with the property that

for all

.

- Show that all measurable homomorphisms are continuous. (Hint: for any disk

centered at the origin in the complex plane, show that

has positive measure for at least one

, and then use the Steinhaus theorem from the previous exercise.)

- Show that

is a measurable homomorphism if and only if it takes the form

for all

and some complex coefficients

. (Hint: first establish this for rational

, and then use the previous part of this exercise.)

- (For readers familiar with Zorn’s lemma) Show that there exist homomorphisms

which are not of the form in the previous exercise. (Hint: view

(or

) as a vector space over the rationals

, and use the fact (from Zorn’s lemma) that every vector space – even an infinite-dimensional one – has at least one basis.) This gives an alternate construction of a non-measurable set to that given in previous notes.

Remark 24 One drawback with the density argument is it gives convergence results which are qualitative rather than quantitative – there is no explicit bound on the rate of convergence. For instance, in Proposition 19, we know that for any

, there exists

such that

whenever

, but we do not know exactly how

depends on

and

. Actually, the proof does eventually give such a bound, but it depends on “how measurable” the function

is, or more precisely how “easy” it is to approximate

by a “nice” function. To illustrate this issue, let’s work in one dimension and consider the function

, where

is a large integer. On the one hand,

is bounded in the

norm uniformly in

:

(indeed, the left-hand side is equal to

). On the other hand, it is not hard to see that

for some absolute constant

. Thus, if one force

to drop below

, one has to make

at most

from the origin. Making

large, we thus see that the rate of convergence of

to zero can be arbitrarily slow, even though

is bounded in

. The problem is that as

gets large, it becomes increasingly difficult to approximate

well by a “nice” function, by which we mean a uniformly continuous function with a reasonable modulus of continuity, due to the increasingly oscillatory nature of

. See this blog post for some further discussion of this issue, and what quantitative substitutes are available for such qualitative results.

Now we return to the Lebesgue differentiation theorem, and apply the density argument. The dense subclass result is already contained in Corollary 14, which asserts that (2) holds for all continuous functions . The quantitative estimate we will need is the following special case of the Hardy-Littlewood maximal inequality:

Lemma 25 (One-sided Hardy-Littlewood maximal inequality) Let

be an absolutely integrable function, and let

. Then

We will prove this lemma shortly, but let us first see how this, combined with the dense subclass result, will give the Lebesgue differentiation theorem. Let be absolutely integrable, and let

be arbitrary. Then by Littlewood’s second principle, we can find a function

which is continuous and compactly supported, with

Applying the one-sided Hardy-Littlewood maximal inequality, we conclude that

In a similar spirit, from Markov’s inequality we have

By subadditivity, we conclude that for all outside of a set

of measure at most

, one has both

for all .

Now let . From the dense subclass result (Corollary 14) applied to the continuous function

, we have

whenever is sufficiently close to

. Combining this with (5), (6), and the triangle inequality, we conclude that

for all sufficiently close to zero. In particular we have

for all outside of a set of measure

. Keeping

fixed and sending

to zero, we conclude that

for almost every . If we then let

go to zero along a countable sequence (e.g.

for

), we conclude that

for almost every , and the claim follows.

The only remaining task is to establish the one-sided Hardy-Littlewood maximal inequality. We will do so by using the rising sun lemma:

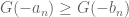

Lemma 26 (Rising sun lemma) Let

be a compact interval, and let

be a continuous function. Then one can find an at most countable family of disjoint non-empty open intervals

in

with the following properties:

- For each

, either

, or else

and

.

- If

does not lie in any of the intervals

, then one must have

for all

.

Remark 27 To explain the name “rising sun lemma”, imagine the graph

of

as depicting a hilly landscape, with the sun shining horizontally from the rightward infinity

(or rising from the east, if you will). Those

for which

are the locations on the landscape which are illuminated by the sun. The intervals

then represent the portions of the landscape that are in shadow.

This lemma is proven using the following basic fact:

Exercise 28 Show that any open subset

of

can be written as the union of at most countably many disjoint non-empty open intervals, whose endpoints lie outside of

. (Hint: first show that every

in

is contained in a maximal open subinterval

of

, and that these maximal open subintervals are disjoint, with each such interval containing at least one rational number.)

Proof: (Proof of rising sun lemma) Let be the set of all

such that

for at least one

. As

is continuous,

is open, and so

is the union of at most countably many disjoint non-empty open intervals

, with the endpoints

lying outside of

.

The second conclusion of the rising sun lemma is clear from construction, so it suffices to establish the first. Suppose first that is such that

. As the endpoint

does not lie in

, we must have

for all

; similarly we have

for all

. In particular we have

. By the continuity of

, it will then suffice to show that

for all

.

Suppose for contradiction that there was with

. Let

, then

is a closed set that contains

but is disjoint from

, since

for all

. Set

, then

, and thus there exists

such that

. Since

, and

for all

, we see that

cannot exceed

, and thus lies in

, but this contradicts the fact that

is the supremum of

.

The case when is similar and is left to the reader; the only difference is that we can no longer assert that

for all

, and so do not have the upper bound

.

Now we can prove the one-sided Hardy-Littlewood maximal inequality. By upwards monotonicity, it will suffice to show that

for any compact interval . By modifying

by an epsilon, we may replace the non-strict inequality here with strict inequality:

Fix . We apply the rising sun lemma to the function

defined as

By Lemma 16, is continuous, and so we can find an at most countable sequence of intervals

with the properties given by the rising sun lemma. From the second property of that lemma, we observe that

since the property can be rearranged as

. By countable additivity, we may thus upper bound the left-hand side of (7) by

. On the other hand, since

, we have

and thus

As the are disjoint intervals in

, we may apply monotone convergence and monotonicity to conclude that

and the claim follows.

Exercise 29 (Two-sided Hardy-Littlewood maximal inequality) Let

be an absolutely integrable function, and let

. Show that

where the supremum ranges over all intervals

of positive length that contain

.

Exercise 30 (Rising sun inequality) Let

be an absolutely integrable function, and let

be the one-sided signed Hardy-Littlewood maximal function

Establish the rising sun inequality

for all real

(note here that we permit

to be zero or negative), and show that this inequality implies Lemma 25. (Hint: First do the

case, by invoking the rising sun lemma.) See these lecture notes for some further discussion of inequalities of this type, and applications to ergodic theory (and in particular the maximal ergodic theorem).

Exercise 31 Show that the left and right-hand sides in Exercise 30 are in fact equal when

. (Hint: one may first wish to try this in the case when

has compact support, in which case one can apply the rising sun lemma to a sufficiently large interval containing the support of

.)

— 2. The Lebesgue differentiation theorem in higher dimensions —

Now we extend the Lebesgue differentiation theorem to higher dimensions. Theorem 15 does not have an obvious high-dimensional analogue, but Theorem 17 does:

Theorem 32 (Lebesgue differentiation theorem in high dimensions) Let

be an absolutely integrable function. Then for almost every

, one has

and

where

is the open ball of radius

centred at

.

From the triangle inequality we see that

so we see that the first conclusion of Theorem 32 implies the second. A point for which (8) holds is called a Lebesgue point of

; thus, for an absolutely integrable function

, almost every point in

will be a Lebesgue point for

.

Exercise 33 Call a function

locally integrable if, for every

, there exists an open neighbourhood of

on which

is absolutely integrable.

- Show that

is locally integrable if and only if

for all

.

- Show that Theorem 32 implies a generalisation of itself in which the condition of absolute integrability of

is weakened to local integrability.

Exercise 34 For each

, let

be a subset of

with the property that

for some

independent of

. Show that if

is locally integrable, and

is a Lebesgue point of

, then

To prove Theorem 32, we use the density argument. The dense subclass case is easy:

Exercise 35 Show that Theorem 32 holds whenever

is continuous.

The quantitative estimate needed is the following:

Theorem 36 (Hardy-Littlewood maximal inequality) Let

be an absolutely integrable function, and let

. Then

for some constant

depending only on

.

Remark 37 The expression

is known as the Hardy-Littlewood maximal function of

, and is often denoted

. It is an important function in the field of (real-variable) harmonic analysis.

Exercise 38 Use the density argument to show that Theorem 36 implies Theorem 32.

In the one-dimensional case, this estimate was established via the rising sun lemma. Unfortunately, that lemma relied heavily on the ordered nature of , and does not have an obvious analogue in higher dimensions. Instead, we will use the following covering lemma. Given an open ball

in

and a real number

, we write

for the ball with the same centre as

, but

times the radius. (Note that this is slightly different from the set

– why?) Note that

for any open ball

and any

.

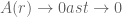

Lemma 39 (Vitali-type covering lemma) Let

be a finite collection of open balls in

(not necessarily disjoint). Then there exists a subcollection

of disjoint balls in this collection, such that

In particular, by finite subadditivity,

Proof: We use a greedy algorithm argument, selecting the balls to be as large as possible while remaining disjoint. More precisely, we run the following algorithm:

- Step 0. Initialise

(so that, initially, there are no balls

in the desired collection).

- Step 1. Look at all the balls

that do not already intersect one of the

(which, initially, will be all the balls

). If there are no such balls, STOP. Otherwise, go on to Step 2.

- Step 2. Locate the largest ball

that does not already intersect one of the

. (If there are multiple largest balls with exactly the same radius, break the tie arbitrarily.) Add this ball to the collection

by setting

and then incrementing

to

. Then return to Step 1.

Note that at each iteration of this algorithm, the number of available balls amongst the drops by at least one (since each ball selected certainly intersects itself and so cannot be selected again). So this algorithm terminates in finite time. It is also clear from construction that the

are a subcollection of the

consisting of disjoint balls. So the only task remaining is to verify that (9) holds at the completion of the algorithm, i.e. to show that each ball

in the original collection is covered by the triples

of the subcollection.

For this, we argue as follows. Take any ball in the original collection. Because the algorithm only halts when there are no more balls that are disjoint from the

, the ball

must intersect at least one of the balls

in the subcollection. Let

be the first ball with this property, thus

is disjoint from

, but intersects

. Because

was chosen to be largest amongst all balls that did not intersect

, we conclude that the radius of

cannot exceed that of

. From the triangle inequality, this implies that

, and the claim follows.

Exercise 40 Technically speaking, the above algorithmic argument was not phrased in the standard language of formal mathematical deduction, because in that language, any mathematical object (such as the natural number

) can only be defined once, and not redefined multiple times as is done in most algorithms. Rewrite the above argument in a way that avoids redefining any variable. (Hint: introduce a “time” variable

, and recursively construct families

of balls that represent the outcome of the above algorithm after

iterations (or

iterations, if the algorithm halted at some previous time

). For this particular algorithm, there are also more ad hoc approaches that exploit the relatively simple nature of the algorithm to allow for a less notationally complicated construction.) More generally, it is possible to use this time parameter trick to convert any construction involving a provably terminating algorithm into a construction that does not redefine any variable. (It is however dangerous to work with any algorithm that has an infinite run time, unless one has a suitably strong convergence result for the algorithm that allows one to take limits, either in the classical sense or in the more general sense of jumping to limit ordinals; in the latter case, one needs to use transfinite induction in order to ensure that the use of such algorithms is rigorous.)

Remark 41 The actual Vitali covering lemma is slightly different to this one, as the linked Wikipedia page shows. Actually there is a family of related covering lemmas which are useful for a variety of tasks in harmonic analysis, see for instance this book by de Guzmán for further discussion.

Now we can prove the Hardy-Littlewood inequality, which we will do with the constant . It suffices to verify the claim with strict inequality,

as the non-strict case then follows by perturbing slightly and then taking limits.

Fix and

. By inner regularity, it suffices to show that

whenever is a compact set that is contained in

.

By construction, for every , there exists an open ball

such that

By compactness of , we can cover

by a finite number

of such balls. Applying the Vitali-type covering lemma, we can find a subcollection

of disjoint balls such that

By (10), on each ball we have

summing in and using the disjointness of the

we conclude that

Since the cover

, we obtain Theorem 36 as desired.

Exercise 42 Improve the constant

in the Hardy-Littlewood maximal inequality to

. (Hint: observe that with the construction used to prove the Vitali covering lemma, the centres of the balls

are contained in

and not just in

. To exploit this observation one may need to first create an epsilon of room, as the centers are not by themselves sufficient to cover the required set.)

Remark 43 The optimal value of

is not known in general, although a fairly recent result of Melas gives the surprising conclusion that the optimal value of

is

. It is known that

grows at most linearly in

, thanks to a result of Stein and Strömberg, but it is not known if

is bounded in

or grows as

. See this blog post for some further discussion.

Exercise 44 (Dyadic maximal inequality) If

is an absolutely integrable function, establish the dyadic Hardy-Littlewood maximal inequality

where the supremum ranges over all dyadic cubes

that contain

. (Hint: the nesting property of dyadic cubes will be useful when it comes to the covering lemma stage of the argument, much as it was in Exercise 8 of Notes 1.)

Exercise 45 (Besicovitch covering lemma in one dimension) Let

be a finite family of open intervals in

(not necessarily disjoint). Show that there exist a subfamily

of intervals such that

; and

- Each point

is contained in at most two of the

.

(Hint: First refine the family of intervals so that no interval

is contained in the union of the the other intervals. At that point, show that it is no longer possible for a point to be contained in three of the intervals.) There is a variant of this lemma that holds in higher dimensions, known as the Besicovitch covering lemma.

Exercise 46 Let

be a Borel measure (i.e. a countably additive measure on the Borel

-algebra) on

, such that

for every interval

of positive length. Assume that

is inner regular, in the sense that

for every Borel measurable set

. (As it turns out, from the theory of Radon measures, all locally finite Borel measures have this property, but we will not prove this here; see Exercise 12 of these notes.) Establish the Hardy-Littlewood maximal inequality

for any absolutely integrable function

, where the supremum ranges over all open intervals

that contain

. Note that this essentially generalises Exercise 29, in which

is replaced by Lebesgue measure. (Hint: Repeat the proof of the usual Hardy-Littlewood maximal inequality, but use the Besicovitch covering lemma in place of the Vitali-type covering lemma. Why do we need the former lemma here instead of the latter?)

Exercise 47 (Cousin’s theorem) Prove Cousin’s theorem: given any function

on a compact interval

of positive length, there exists a partition

with

, together with real numbers

for each

and

. (Hint: use the Heine-Borel theorem, which asserts that any open cover of

has a finite subcover, followed by the Besicovitch covering lemma.) This theorem is useful in a variety of applications related to the second fundamental theorem of calculus, as we shall see below. The positive function

is known as a gauge function.

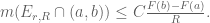

Now we turn to consequences of the Lebesgue differentiation theorem. Given a Lebesgue measurable set , call a point

a point of density for

if

as

. Thus, for instance, if

, then every point in

(including the boundary point

) is a point of density for

, but the endpoints

(as well as the exterior of

) are not points of density. One can think of a point of density as being an “almost interior” point of

; it is not necessarily the case that one can fit an small ball

centred at

inside of

, but one can fit most of that small ball inside

.

Exercise 48 If

is Lebesgue measurable, show that almost every point in

is a point of density for

, and almost every point in the complement of

is not a point of density for

.

Exercise 49 Let

be a measurable set of positive measure, and let

.

- Using Exercise 34 and Exercise 48, show that there exists a cube

of positive sidelength such that

.

- Give an alternate proof of the above claim that avoids the Lebesgue differentiation theorem. (Hint: reduce to the case when

is bounded, then approximate

by an almost disjoint union of cubes.)

- Use the above result to give an alternate proof of the Steinhaus theorem (Exercise 22).

Of course, one can replace cubes here by other comparable shapes, such as balls. (Indeed, a good principle to adopt in analysis is that cubes and balls are “equivalent up to constants”, in that a cube of some sidelength can be contained in a ball of comparable radius, and vice versa. This type of mental equivalence is analogous to, though not identical with, the famous dictum that a topologist cannot distinguish a doughnut from a coffee cup.)

Exercise 50

- Give an example of a compact set

of positive measure such that

for every interval

of positive length. (Hint: first construct an open dense subset of

of measure strictly less than

.)

- Give an example of a measurable set

such that

for every interval

of positive length. (Hint: first work in a bounded interval, such as

. The complement of the set

in the first example is the union of at most countably many open intervals, thanks to Exercise 28. Now fill in these open intervals and iterate.)

Exercise 51 (Approximations to the identity) Define a good kernel to be a measurable function

which is non-negative, radial (which means that there is a function

such that

), radially non-increasing (so that

is a non-increasing function), and has total mass

equal to

. The functions

for

are then said to be a good family of approximations to the identity.

- Show that the heat kernels

and Poisson kernels

are good families of approximations to the identity, if the constant

is chosen correctly (in fact one has

, but you are not required to establish this). (Note that we have modified the usual formulation of the heat kernel by replacing

with

in order to make it conform to the notational conventions used in this exercise.)

- Show that if

is a good kernel, then

for some constants

depending only on

. (Hint: compare

with such “horizontal wedding cake” functions as

.)

- Establish the quantitative upper bound

for any absolutely integrable function

and some constant

depending only on

.

- Show that if

is absolutely integrable and

is a Lebesgue point of

, then the convolution

converges to

as

. (Hint: split

as the sum of

and

.) In particular,

converges pointwise almost everywhere to

.

— 3. Almost everywhere differentiability —

As we see in undergraduate real analysis, not every continuous function is differentiable, with the standard example being the absolute value function

, which is continuous not differentiable at the origin

. Of course, this function is still almost everywhere differentiable. With a bit more effort, one can construct continuous functions that are in fact nowhere differentiable:

Exercise 52 (Weierstrass function) Let

be the function

- Show that

is well-defined (in the sense that the series is absolutely convergent) and that

is a bounded continuous function.

- Show that for every interval

with

and

integer, one has

for some absolute constant

.

- Show that

is not differentiable at any point

. (Hint: argue by contradiction and use the previous part of this exercise.) Note that it is not enough to formally differentiate the series term by term and observe that the resulting series is divergent – why not?

The difficulty here is that a continuous function can still contain a large amount of oscillation, which can lead to breakdown of differentiability. However, if one can somehow limit the amount of oscillation present, then one can often recover a fair bit of differentiability. For instance, we have

Theorem 53 (Monotone differentiation theorem) Any function

which is monotone (either monotone non-decreasing or monotone non-increasing) is differentiable almost everywhere.

Exercise 54 Show that every monotone function is measurable.

To prove this theorem, we just treat the case when is monotone non-decreasing, as the non-increasing case is similar (and can be deduced from the non-decreasing case by replacing

with

).

We also first focus on the case when is continuous, as this allows us to use the rising sun lemma. To understand the differentiability of

, we introduce the four Dini derivatives of

at

:

- The upper right derivative

;

- The lower right derivative

;

- The upper left derivative

;

- The lower right derivative

.

Regardless of whether is differentiable or not (or even whether

is continuous or not), the four Dini derivatives always exist and take values in the extended real line

. (If

is only defined on an interval

, rather than on the endpoints, then some of the Dini derivatives may not exist at the endpoints, but this is a measure zero set and will not impact our analysis.)

Exercise 55 If

is monotone, show that the four Dini derivatives of

are measurable. (Hint: the main difficulty is to reformulate the derivatives so that

ranges over a countable set rather than an uncountable one.)

A function is differentiable at

precisely when the four derivatives are equal and finite:

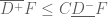

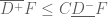

We also have the trivial inequalities

If is non-decreasing, all these quantities are non-negative, thus

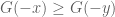

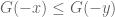

The one-sided Hardy-Littlewood maximal inequality has an analogue in this setting:

Lemma 56 (One-sided Hardy-Littlewood inequality) Let

be a continuous monotone non-decreasing function, and let

. Then we have

Similarly for the other three Dini derivatives of

.

Ifis not assumed to be continuous, then we have the weaker inequality

for some absolute constant

.

Remark 57 Note that if one naively applies the fundamental theorems of calculus, one can formally see that the first part of Lemma 56 is equivalent to Lemma 25. We cannot however use this argument rigorously because we have not established the necessary fundamental theorems of calculus to do this. Nevertheless, we can borrow the proof of Lemma 25 without difficulty to use here, and this is exactly what we will do.

Proof: We just prove the continuous case and leave the discontinuous case as an exercise.

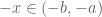

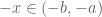

It suffices to prove the claim for ; by reflection (replacing

with

, and

with

), the same argument works for

, and then this trivially implies the same inequalities for

and

. By modifying

by an epsilon, and dropping the endpoints from

as they have measure zero, it suffices to show that

We may apply the rising sun lemma (Lemma 26) to the continuous function . This gives us an at most countable family of intervals

in

, such that

for each

, and such that

whenever

and

lies outside of all of the

.

Observe that if , and

for all

, then

. Thus we see that the set

is contained in the union of the

, and so by countable additivity

But we can rearrange the inequality as

. From telescoping series and the monotone nature of

we have

(this is easiest to prove by first working with a finite subcollection of the intervals

, and then taking suprema), and the claim follows.

The discontinuous case is left as an exercise.

Exercise 58 Prove Lemma 56 in the discontinuous case. (Hint: the rising sun lemma is no longer available, but one can use either the Vitali-type covering lemma (which will give

) or the Besicovitch lemma (which will give

), by modifying the proof of Theorem 36.

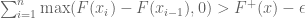

Sending in the above lemma (cf. Exercise 18 from Notes 2), and then sending

to

, we conclude as a corollary that all the four Dini derivatives of a continuous monotone non-decreasing function are finite almost everywhere. So to prove Theorem 53 for continuous monotone non-decreasing functions, it suffices to show that (11) holds for almost every

. In view of the trivial inequalities, it suffices to show that

and

for almost every

. We will just show the first inequality, as the second follows by replacing

with its reflection

. It will suffice to show that for every pair

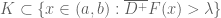

of real numbers, the set

is a null set, since by letting range over rationals with

and taking countable unions, we would conclude that the set

is a null set (recall that the Dini derivatives are all non-negative when

is non-decreasing), and the claim follows.

Clearly is a measurable set. To prove that it is null, we will establish the following estimate:

Lemma 59 (

has density less than one) For any interval

and any

, one has

.

Indeed, this lemma implies that has no points of density, which by Exercise 48 forces

to be a null set.

Proof: We begin by applying the rising sun lemma to the function on

; the large number of negative signs present here is needed in order to properly deal with the lower left Dini derivative

. This gives an at most countable family of disjoint intervals

in

, such that

for all

, and such that

whenever

and

lies outside of all of the

. Observe that if

, and

for all

, then

. Thus we see that

is contained inside the union of the intervals

. On the other hand, from the first part of Lemma 56 we have

But we can rearrange the inequality as

. From countable additivity, one thus has

But the are disjoint inside

, so from countable additivity again, we have

, and the claim follows.

Remark 60 Note if

was not assumed to be continuous, then one would lose a factor of

here from the second part of Lemma 56, and one would then be unable to prevent

from being up to

times as large as

. So sometimes, even when all one is seeking is a qualitative result such as differentiability, it is still important to keep track of constants. (But this is the exception rather than the rule: for a large portion of arguments in analysis, the constants are not terribly important.)

This concludes the proof of Theorem 53 in the continuous monotone non-decreasing case. Now we work on removing the continuity hypothesis (which was needed in order to make the rising sun lemma work properly). If we naively try to run the density argument as we did in previous sections, then (for once) the argument does not work very well, as the space of continuous monotone functions are not sufficiently dense in the space of all monotone functions in the relevant sense (which, in this case, is in the total variation sense, which is what is needed to invoke such tools as Lemma 56.). To bridge this gap, we have to supplement the continuous monotone functions with another class of monotone functions, known as the jump functions.

Definition 61 (Jump function) A basic jump function

is a function of the form

for some real numbers

and

; we call

the point of discontinuity for

and

the fraction. Observe that such functions are monotone non-decreasing, but have a discontinuity at one point. A jump function is any absolutely convergent combination of basic jump functions, i.e. a function of the form

, where

ranges over an at most countable set, each

is a basic jump function, and the

are positivereals with

. If there are only finitely many

involved, we say that

is a piecewise constant jump function.

Thus, for instance, if is any enumeration of the rationals, then

is a jump function.

Clearly, all jump functions are monotone non-decreasing. From the absolute convergence of the we see that every jump function is the uniform limit of piecewise constant jump functions, for instance

is the uniform limit of

. One consequence of this is that the points of discontinuity of a jump function

are precisely those of the individual summands

, i.e. of the points

where each

jumps.

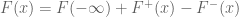

The key fact is that these functions, together with the continuous monotone functions, essentially generate all monotone functions, at least in the bounded case:

Lemma 62 (Continuous-singular decomposition for monotone functions) Let

be a monotone non-decreasing function.

- The only discontinuities of

are jump discontinuities. More precisely, if

is a point where

is discontinuous, then the limits

and

both exist, but are unequal, with

.

- There are at most countably many discontinuities of

.

- If

is bounded, then

can be expressed as the sum of a continuous monotone non-decreasing function

and a jump function

.

Remark 63 This decomposition is part of the more general Lebesgue decomposition, which we will discuss later in this course.

Proof: By monotonicity, the limits and

always exist, with

for all

. This gives 1.

By 1., whenever there is a discontinuity of

, there is at least one rational number

strictly between

and

, and from monotonicity, each rational number can be assigned to at most one discontinuity. This gives 2.

Now we prove 3. Let be the set of discontinuities of

, thus

is at most countable. For each

, we define the jump

, and the fraction

. Thus

Note that is the measure of the interval

. By monotonicity, these intervals are disjoint; by the boundedness of

, their union is bounded. By countable additivity, we thus have

, and so if we let

be the basic jump function with point of discontinuity

and fraction

, then the function

is a jump function.

As discussed previously, is discontinuous only at

, and for each

one easily checks that

where , and

. We thus see that the difference

is continuous. The only remaining task is to verify that

is monotone non-decreasing. By continuity it suffices to verify this away from the (countably many) jump discontinuities, thus we need

for all that are not jump discontinuities. But the left-hand side can be rewritten as

, while the right-hand side is

. As each

is the measure of the interval

, and these intervals for

are disjoint and lie in

, the claim follows from countable additivity.

Exercise 64 Show that the decomposition of a bounded monotone non-decreasing function

into continuous

and jump components

given by the above lemma is unique.

Exercise 65 Find a suitable generalisation of the notion of a jump function that allows one to extend the above decomposition to unbounded monotone functions, and then prove this extension. (Hint: the notion to shoot for here is that of a “locally jump function”.)

Now we can finish the proof of Theorem 53. As noted previously, it suffices to prove the claim for monotone non-decreasing functions. As differentiability is a local condition, we can easily reduce to the case of bounded monotone non-decreasing functions, since to test differentiability of a monotone non-decreasing function in any compact interval

we may replace

by the bounded monotone non-decreasing function

with no change in the differentiability in

(except perhaps at the endpoints

, but these form a set of measure zero). As we have already proven the claim for continuous functions, it suffices by Lemma 62 (and linearity of the derivative) to verify the claim for jump functions.

Now, finally, we are able to use the density argument, using the piecewise constant jump functions as the dense subclass, and using the second part of Lemma 56 for the quantitative estimate; fortunately for us, the density argument does not particularly care that there is a loss of a constant factor in this estimate.

For piecewise constant jump functions, the claim is clear (indeed, the derivative exists and is zero outside of finitely many discontinuities). Now we run the density argument. Let be a bounded jump function, and let

and

be arbitrary. As every jump function is the uniform limit of piecewise constant jump functions, we can find a piecewise constant jump function

such that

for all

. Indeed, by taking

to be a partial sum of the basic jump functions that make up

, we can ensure that

is also a monotone non-decreasing function. Applying the second part of Lemma 56, we have

for some absolute constant , and similarly for the other four Dini derivatives. Thus, outside of a set of measure at most

, all of the Dini derivatives of

are less than

. Since

is almost everywhere differentiable, we conclude that outside of a set of measure at most

, all the Dini derivatives of

lie within

of

, and in particular are finite and lie within

of each other. Sending

to zero (holding

fixed), we conclude that for almost every

, the Dini derivatives of

are finite and lie within

of each other. If we then send

to zero, we see that for almost every

, the Dini derivatives of

agree with each other and are finite, and the claim follows. This concludes the proof of Theorem 53.

Just as the integration theory of unsigned functions can be used to develop the integration theory of the absolutely convergent functions (see Notes 2), the differentiation theory of monotone functions can be used to develop a parallel differentiation theory for the class of functions of bounded variation:

Definition 66 (Bounded variation) Let

be a function. The total variation

(or

for short) of

is defined to be the supremum

where the supremum ranges over all finite increasing sequences

of real numbers with

; this is a quantity in

. We say that

has bounded variation (on

) if

is finite. (In this case,

is often written as

or just

.)

Given any interval, we define the total variation

of

on

as

thus the definition is the same, but the points

are restricted to lie in

. Thus for instance

. We say that a function

has bounded variation on

if

is finite.

Exercise 67 If

is a monotone function, show that

for any interval

, and that

has bounded variation on

if and only if it is bounded.

Exercise 68 For any functions

, establish the triangle property

and the homogeneity property

for any

. Also show that

if and only if

is constant.

Exercise 69 If

is a function, show that

whenever

.

Exercise 70

- Show that every function

of bounded variation is bounded, and that the limits

and

, are well-defined.

- Give a counterexample of a bounded, continuous, compactly supported function

that is not of bounded variation.

Exercise 71 Let

be an absolutely integrable function, and let

be the indefinite integral

. Show that

is of bounded variation, and that

. (Hint: the upper bound

is relatively easy to establish. To obtain the lower bound, use the density argument.)

Much as an absolutely integrable function can be expressed as the difference of its positive and negative parts, a bounded variation function can be expressed as the difference of two bounded monotone functions:

Proposition 72 A function

is of bounded variation if and only if it is the difference of two bounded monotone functions.

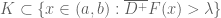

Proof: It is clear from Exercises 67, 68 that the difference of two bounded monotone functions is bounded. Now define the positive variation of

by the formula

It is clear from construction that this is a monotone increasing function, taking values between and

, and is thus bounded. To conclude the proposition, it suffices to (by writing

to show that

is non-decreasing, or in other words to show that

If is negative then this is clear from the monotone non-decreasing nature of

, so assume that

. But then the claim follows because any sequence of real numbers

can be extended by one or two elements by adding

and

, thus increasing the sum

by at least

.

Exercise 73 Let

be of bounded variation. Define the positive variation

by (12), and the negative variation

by

Establish the identities

and

for every interval

, where

,

, and

. (Hint: The main difficulty comes from the fact that a partition

that is good for

need not be good for

, and vice versa. However, this can be fixed by taking a good partition for

and a good partition for

and combining them together into a common refinement.)

From Proposition 72 and Theorem 53 we immediately obtain

Corollary 74 (BV differentiation theorem) Every bounded variation function is differentiable almost everywhere.

Exercise 75 Call a function locally of bounded variation if it is of bounded variation on every compact interval

. Show that every function that is locally of bounded variation is differentiable almost everywhere.

Exercise 76 (Lipschitz differentiation theorem, one-dimensional case) A function

is said to be Lipschitz continuous if there exists a constant

such that

for all

; the smallest

with this property is known as the Lipschitz constant of

. Show that every Lipschitz continuous function

is locally of bounded variation, and hence differentiable almost everywhere. Furthermore, show that the derivative

, when it exists, is bounded in magnitude by the Lipschitz constant of

.

Remark 77 The same result is true in higher dimensions, and is known as the Radamacher differentiation theorem, but we will defer the proof of this theorem to subsequent notes, when we have the powerful tool of the Fubini-Tonelli theorem available, that is particularly useful for deducing higher-dimensional results in analysis from lower-dimensional ones.

Exercise 78 A function

is said to be convex if one has

for all

and

. Show that if

is convex, then it is continuous and almost everywhere differentiable, and its derivative

is equal almost everywhere to a monotone non-decreasing function, and so is itself almost everywhere differentiable. (Hint: Drawing the graph of

, together with a number of chords and tangent lines, is likely to be very helpful in providing visual intuition.) Thus we see that in some sense, convex functions are “almost everywhere twice differentiable”. Similar claims also hold for concave functions, of course.

— 4. The second fundamental theorem of calculus —

We are now finally ready to attack the second fundamental theorem of calculus in the cases where is not assumed to be continuously differentiable. We begin with the case when

is monotone non-decreasing. From Theorem 53 (extending

to the rest of the real line if needed), this implies that

is differentiable almost everywhere in

, so

is defined a.e.; from monotonicity we see that

is non-negative whenever it is defined. Also, an easy modification of Exercise 2 shows that

is measurable.

One half of the second fundamental theorem is easy:

Proposition 79 (Upper bound for second fundamental theorem) Let

be monotone non-decreasing (so that, as discussed above,

is defined almost everywhere, is unsigned, and is measurable). Then

In particular,

is absolutely integrable.

Proof: It is convenient to extend to all of

by declaring

for

and

for

, then

is now a bounded monotone function on

, and

vanishes outside of

. As

is almost everywhere differentiable, the Newton quotients

converge pointwise almost everywhere to . Applying Fatou’s lemma (Corollary 16 of Notes 3), we conclude that

The right-hand side can be rearranged as

which can be rearranged further as

Since is equal to

for the first integral and is at least

for the second integral, this expression is at most

and the claim follows.

Exercise 80 Show that any function of bounded variation has an (almost everywhere defined) derivative that is absolutely integrable.

In the Lipschitz case, one can do better:

Exercise 81 (Second fundamental theorem for Lipschitz functions) Let

be Lipschitz continuous. Show that

. (Hint: Argue as in the proof of Proposition 79, but use the dominated convergence theorem in place of Fatou’s lemma.)

Exercise 82 (Integration by parts formula) Let

be Lipschitz continuous functions. Show that

(Hint: first show that the product of two Lipschitz continuous functions on

is again Lipschitz continuous.)

Now we return to the monotone case. Inspired by the Lipschitz case, one may hope to recover equality in Proposition 79 for such functions . However, there is an important obstruction to this, which is that all the variation of

may be concentrated in a set of measure zero, and thus undetectable by the Lebesgue integral of

. This is most obvious in the case of a discontinuous monotone function, such as the (appropriately named) Heaviside function

; it is clear that

vanishes almost everywhere, but

is not equal to

if

and

lie on opposite sides of the discontinuity at

. In fact, the same problem arises for all jump functions:

Exercise 83 Show that if

is a jump function, then

vanishes almost everywhere. (Hint: use the density argument, starting from piecewise constant jump functions and using Proposition 79 as the quantitative estimate.)

One may hope that jump functions – in which all the fluctuation is concentrated in a countable set – are the only obstruction to the second fundamental theorem of calculus holding for monotone functions, and that as long as one restricts attention to continuous monotone functions, that one can recover the second fundamental theorem. However, this is still not true, because it is possible for all the fluctuation to now be concentrated, not in a countable collection of jump discontinuities, but instead in an uncountable set of zero measure, such as the middle thirds Cantor set (Exercise 10 from Notes 1). This can be illustrated by the key counterexample of the Cantor function, also known as the Devil’s staircase function. The construction of this function is detailed in the exercise below.

Exercise 84 (Cantor function) Define the functions

recursively as follows:

- Set

for all

.

- For each

in turn, define

- Graph

,

,

, and

(preferably on a single graph).

- Show that for each

,

is a continuous monotone non-decreasing function with

and

. (Hint: induct on

.)

- Show that for each

, one has

for each

. Conclude that the

converge uniformly to a limit

. This limit is known as the Cantor function.

- Show that the Cantor function

is continuous and monotone non-decreasing, with

and

.

- Show that if

lies outside the middle thirds Cantor set (Exercise 10 from Notes 1), then

is constant in a neighbourhood of

, and in particular

. Conclude that

, so that the second fundamental theorem of calculus fails for this function.

- Show that

for any digits

. Thus the Cantor function, in some sense, converts base three expansions to base two expansions.

- Let

be one of the intervals used in the

cover

of

(see Exercise 10 from Notes 1), thus

and

. Show that

is an interval of length

, but

is an interval of length

.

- Show that

is not differentiable at any element of the Cantor set

.

Remark 85 This example shows that the classical derivative

of a function has some defects; it cannot “see” some of the variation of a continuous monotone function such as the Cantor function. Much later in this series, we will rectify this by introducing the concept of the weak derivative of a function, which despite the name, is more able than the strong derivative to detect this type of singular variation behaviour. (We will also encounter the Riemann-Stieltjes integral in later notes, which is another (closely related) way to capture all of the variation of a monotone function, and which is related to the classical derivative via the Lebesgue-Radon-Nikodym theorem.)

In view of this counterexample, we see that we need to add an additional hypothesis to the continuous monotone non-increasing function before we can recover the second fundamental theorem. One such hypothesis is absolute continuity. To motivate this definition, let us recall two existing definitions:

- A function

is continuous if, for every

and

, there exists a

such that

whenever

is an interval of length at most

that contains

.

- A function

is uniformly continuous if, for every

, there exists a

such that

whenever

is an interval of length at most

.

Definition 86 A function

is said to be absolutely continuous if, for every

, there exists a

such that

whenever

is a finite collection of disjoint intervals of total length

at most

.

We define absolute continuity for a functiondefined on an interval

similarly, with the only difference being that the intervals

are of course now required to lie in the domain

of

.

The following exercise places absolute continuity in relation to other regularity properties:

- Show that every absolutely continuous function is uniformly continuous and therefore continuous.

- Show that every absolutely continuous function is of bounded variation on every compact interval

. (Hint: first show this is true for any sufficiently small interval.) In particular (by Exercise 75), absolutely continuous functions are differentiable almost everywhere.

- Show that every Lipschitz continuous function is absolutely continuous.

- Show that the function

is absolutely continuous, but not Lipschitz continuous, on the interval

.

- Show that the Cantor function from Exercise 84 is continuous, monotone, and uniformly continuous, but not absolutely continuous, on

.

- If

is absolutely integrable, show that the indefinite integral

is absolutely continuous, and that

is differentiable almost everywhere with

for almost every

.

- Show that the sum or product of two absolutely continuous functions on an interval

remains absolutely continuous. What happens if we work on

instead of on

?

Exercise 88

- Show that absolutely continuous functions map null sets to null sets, i.e. if

is absolutely continuous and

is a null set then

is also a null set.

- Show that the Cantor function does not have this property.

For absolutely continuous functions, we can recover the second fundamental theorem of calculus:

Theorem 89 (Second fundamental theorem for absolutely continuous functions) Let

be absolutely continuous. Then

.

Proof: Our main tool here will be Cousin’s theorem (Exercise 47).

By Exercise 80, is absolutely integrable. By Exercise 8 of Notes 4,

is thus uniformly integrable. Now let

. By Exercise 11 of Notes 4, we can find

such that

whenever

is a measurable set of measure at most

. (Here we adopt the convention that

vanishes outside of

.) By making

small enough, we may also assume from absolute continuity that

whenever

is a finite collection of disjoint intervals of total length

at most

.

Let be the set of points

where

is not differentiable, together with the endpoints

, as well as the points where

is not a Lebesgue point of

. thus

is a null set. By outer regularity (or the definition of outer measure) we can find an open set

containing

of measure

. In particular,

.

Now define a gauge function as follows.

- If

, we define

to be small enough that the open interval

lies in

.

- If

, then

is differentiable at

and

is a Lebesgue point of

. We let

be small enough that

holds whenever

, and such that

whenever

is an interval containing

of length at most

; such a

exists by the definition of differentiability, and of Lebesgue point. We rewrite these properties using big-O notation as

and

.

Applying Cousin’s theorem, we can find a partition with

, together with real numbers

for each

and

.

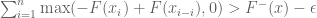

We can express as a telescoping series

To estimate the size of this sum, let us first consider those for which

. Then, by construction, the intervals

are disjoint in

. By construction of

, we thus have

and thus

Next, we consider those for which

. By construction, for those

we have

and

and thus

On the other hand, from construction again we have

and thus

Summing in , we conclude that

where is the union of all the

with

. By construction, this set is contained in

and contains

. Since

, we conclude that

Putting everything together, we conclude that

Since was arbitrary, the claim follows.

Combining this result with Exercise 87, we obtain a satisfactory classification of the absolutely continuous functions:

Exercise 90 Show that a function

is absolutely continuous if and only if it takes the form

for some absolutely integrable

and a constant

.

Exercise 91 (Compatibility of the strong and weak derivatives in the absolutely continuous case) Let

be an absolutely continuous function, and let

be a continuously differentiable function supported in a compact subset of

. Show that

.

Inspecting the proof of Theorem 89, we see that the absolute continuity was used primarily in two ways: firstly, to ensure the almost everywhere existence, and to control an exceptional null set . It turns out that one can achieve the latter control by making a different hypothesis, namely that the function

is everywhere differentiable rather than merely almost everywhere differentiable. More precisely, we have

Proposition 92 (Second fundamental theorem of calculus, again) Let

be a compact interval of positive length, let

be a differentiable function, such that

is absolutely integrable. Then the Lebesgue integral

of

is equal to

.

Proof: This will be similar to the proof of Theorem 89, the one main new twist being that we need several open sets instead of just one. Let

be the set of points

which are not Lebesgue points of

, together with the endpoints

. This is a null set. Let

, and then let

be small enough that

whenever

is measurable with

. We can also ensure that

.

For every natural number we can find an open set

containing

of measure

. In particular we see that

and thus

.

Now define a gauge function as follows.

- If

, we define

to be small enough that the open interval

lies in

, where

is the first natural number such that

, and also small enough that

holds whenever

. (Here we crucially use the everywhere differentiability to ensure that

exists and is finite here.)

- If

, we let

be small enough that

holds whenever

, and such that

whenever

is an interval containing

of length at most

, exactly as in the proof of Theorem 89.

Applying Cousin’s theorem, we can find a partition with

, together with real numbers

for each

and

.

As before, we express as a telescoping series

For the contributions of those with

, we argue exactly as in the proof of Theorem 89 to conclude eventually that

where is the union of all the

with

. Since

we thus have

Now we turn to those with

. By construction, we have

fir these intervals, and so

Next, for each we have

and

for some natural number

, by construction. By countable additivity, we conclude that

Putting all this together, we again have

Since was arbitrary, the claim follows.

Remark 93 The above proposition is yet another illustration of how the property of everywhere differentiability is significantly better than that of almost everywhere differentiability. In practice, though, the above proposition is not as useful as one might initially think, because there are very few methods that establish the everywhere differentiability of a function that do not also establish continuous differentiability (or at least Riemann integrability of the derivative), at which point one could just use Theorem 11 instead.

Exercise 94 Let

be the function defined by setting

when

is non-zero, and

. Show that

is everywhere differentiable, but the deriative

is not absolutely integrable, and so the second fundamental theorem of calculus does not apply in this case (at least if we interpret

using the absolutely convergent Lebesgue integral). See however the next exercise.

Exercise 95 (Henstock-Kurzweil integral) Let

be a compact interval of positive length. We say that a function

is Henstock-Kurzweil integrable with integral

if for every

there exists a gauge function

such that one has

whenever

and

and

are such that

and

for every

. When this occurs, we call

the Henstock-Kurzweil integral of

and write it as

.

- Show that if a function is Henstock-Kurzweil integrable, it has a unique Henstock-Kurzweil integral. (Hint: use Cousin’s theorem.)

- Show that if a function is Riemann integrable, then it is Henstock-Kurzweil integrable, and the Henstock-Kurzweil integral

is equal to the Riemann integral

.

- Show that if a function

is everywhere defined, everywhere finite, and is absolutely integrable, then it is Henstock-Kurzweil integrable, and the Henstock-Kurzweil integral

is equal to the Lebesgue integral

. (Hint: this is a variant of the proof of Theorem 89 or Proposition 92.)

- Show that if

is everywhere differentiable, then

is Henstock-Kurzweil integrable, and the Henstock-Kurzweil integral

is equal to

. (Hint: this is a variant of the proof of Theorem 89 or Proposition 92.)

- Explain why the above results give an alternate proof of Exercise 10 and of Proposition 92.

Remark 96 As the above exercise indicates, the Henstock-Kurzweil integral (also known as the Denjoy integral or Perron integral) extends the Riemann integral and the absolutely convergent Lebesgue integral, at least as long as one restricts attention to functions that are defined and are finite everywhere (in contrast to the Lebesgue integral, which is willing to tolerate functions being infinite or undefined so long as this only occurs on a null set). It is the notion of integration that is most naturally associated with the fundamental theorem of calculus for everywhere differentiable functions, as seen in part 4 of the above exercise; it can also be used as a unified framework for all the proofs in this section that invoked Cousin’s theorem. The Henstock-Kurzweil integral can also integrate some (highly oscillatory) functions that the Lebesgue integral cannot, such as the derivative

of the function

appearing in Exercise 94. This is analogous to how conditional summation

can sum conditionally convergent series

, even if they are not absolutely integrable. However, much as conditional summation is not always well-behaved with respect to rearrangement, the Henstock-Kurzweil integral does not always react well to changes of variable; also, due to its reliance on the order structure of the real line

, it is difficult to extend the Henstock-Kurzweil integral to more general spaces, such as the Euclidean space

, or to abstract measure spaces.

197 comments

Comments feed for this article

12 June, 2023 at 2:19 pm

Anonymous

Dear professor Tao: to be continuous in Exercise

to be continuous in Exercise  ?(showing the Dini derivatives are measurable)

?(showing the Dini derivatives are measurable)

Do we assume

[No; montonicity is sufficient here. Note that monotone functions have only countably many discontinuities in any event. -T]

16 June, 2023 at 4:12 pm

Anonymous

Thank you professor, also in the proof of lemma 59, I think the statement “Thus we see that is contained inside the union of the intervals … ” should be “Thus we see that

is contained inside the union of the intervals … ” should be “Thus we see that  is contained inside the union of the intervals … ”

is contained inside the union of the intervals … ”

[Corrected, thanks – T.]

17 June, 2023 at 5:19 pm

Sam

Dear professor Tao: was not assumed to be continuous, then one would lose a factor of

was not assumed to be continuous, then one would lose a factor of  here from the second part of Lemma 56, and one would then be unable to prevent

here from the second part of Lemma 56, and one would then be unable to prevent  from being up to

from being up to  times as large as

times as large as  .”? Do mean that

.”? Do mean that  on a set of positive measure? What is referred to by “lose a factor of

on a set of positive measure? What is referred to by “lose a factor of  “? Thank you so much.

“? Thank you so much.

Can you elaborate a bit more on your statement in Remark 60: “Note if

[Yes, this is the sort of bound one would get from using the second part of Lemma 56. -T]

18 June, 2023 at 9:30 am

Sam

Dear professor Tao: How does one derive the given bound then?

How does one derive the given bound then?

I see that the second part of Lemma 56 gives

20 June, 2023 at 9:48 am

Sam

Dear professor Tao: in Remark 60 a consequence of part 2 of Lemma 56?

in Remark 60 a consequence of part 2 of Lemma 56?

I wonder why is the bound

[Modify the proof of Lemma 59 by using the second part of Lemma 56 instead of the first part, which causes an additional loss of in the conclusion of that lemma. Then repeat the arguments preceding Lemma 59 to conclude that

in the conclusion of that lemma. Then repeat the arguments preceding Lemma 59 to conclude that  holds outside of a null set. -T]

holds outside of a null set. -T]

21 June, 2023 at 12:35 am

Sam

Thank you so much professor, I cannot appreciate your warm patience more.

21 June, 2023 at 9:09 pm

Anonymous

Dear professor Tao: , of total measure at most

, of total measure at most  . I feel like the fact that we can find

. I feel like the fact that we can find  arbitrarily close to the right of

arbitrarily close to the right of  such that