Mertens’ theorems are a set of classical estimates concerning the asymptotic distribution of the prime numbers:

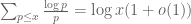

Theorem 1 (Mertens’ theorems) In the asymptotic limit

, we have

where

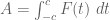

is the Euler-Mascheroni constant, defined by requiring that

in the limit

.

The third theorem (3) is usually stated in exponentiated form

but in the logarithmic form (3) we see that it is strictly stronger than (2), in view of the asymptotic .

Remarkably, these theorems can be proven without the assistance of the prime number theorem

which was proven about two decades after Mertens’ work. (But one can certainly use versions of the prime number theorem with good error term, together with summation by parts, to obtain good estimates on the various errors in Mertens’ theorems.) Roughly speaking, the reason for this is that Mertens’ theorems only require control on the Riemann zeta function in the neighbourhood of the pole at

, whereas (as discussed in this previous post) the prime number theorem requires control on the zeta function on (a neighbourhood of) the line

. Specifically, Mertens’ theorem is ultimately deduced from the Euler product formula

valid in the region (which is ultimately a Fourier-Dirichlet transform of the fundamental theorem of arithmetic), and following crude asymptotics:

Proposition 2 (Simple pole) For

sufficiently close to

with

, we have

and

Proof: For as in the proposition, we have

for any natural number

and

, and hence

Summing in and using the identity

, we obtain the first claim. Similarly, we have

and by summing in and using the identity

(the derivative of the previous identity) we obtain the claim.

The first two of Mertens’ theorems (1), (2) are relatively easy to prove, and imply the third theorem (3) except with replaced by an unspecified absolute constant. To get the specific constant

requires a little bit of additional effort. From (4), one might expect that the appearance of

arises from the refinement

that one can obtain to (6). However, it turns out that the connection is not so much with the zeta function, but with the Gamma function, and specifically with the identity (which is of course related to (7) through the functional equation for zeta, but can be proven without any reference to zeta functions). More specifically, we have the following asymptotic for the exponential integral:

Proposition 3 (Exponential integral asymptotics) For sufficiently small

, one has

A routine integration by parts shows that this asymptotic is equivalent to the identity

which is the identity mentioned previously.

Proof: We start by using the identity to express the harmonic series

as

or on summing the geometric series

Since , we thus have

making the change of variables , this becomes

As ,

converges pointwise to

and is pointwise dominated by

. Taking limits as

using dominated convergence, we conclude that

or equivalently

The claim then follows by bounding the portion of the integral on the left-hand side.

Below the fold I would like to record how Proposition 2 and Proposition 3 imply Theorem 1; the computations are utterly standard, and can be found in most analytic number theory texts, but I wanted to write them down for my own benefit (I always keep forgetting, in particular, how the third of Mertens’ theorems is proven).

— 1. Proof of Mertens’ theorems —

Let be as in Proposition 2. Taking logarithms of

using (5), we obtain the asymptotic

using the standard branch of the logarithm; if we instead compute the logarithmic derivative , we obtain the closely related asymptotic

which by the approximation also gives

These are already very close to Mertens’ theorems, except that the sharp cutoff has been replaced by a smoother cutoff

. To pass from the smooth cutoff to the rough, we use Fourier analysis:

Proposition 4 Let

be a compactly supported, Riemann integrable function independent of

. Then as

, we have

Proof: By approximating above and below by smooth functions, we may assume without loss of generality that

is smooth. Applying the Fourier inversion formula to the function

, we thus have a Fourier representation of the form

for some rapidly decreasing function . The left-hand side of (10) may then be rewritten as

The summation here may be crudely bounded in magnitude by

thanks to (9). From this and the rapid decrease of , we see that the contribution of the integrand for which

(say) is

. In the remaining region, we may apply (9) to estimate the left-hand side of (10) as

The net contribution of the error is again

, so from the rapid decrease of

again we may rewrite this expression as

and the claim then follows by integrating (11) on .

Setting in the above proposition gives a weak version of the first Mertens’ theorem (1), in which the

error term has worsened to

. To recover the full

term by this Fourier-analytic method requires a more precise accounting of error terms in the above estimates. Firstly, if one uses (7) instead of (6), one eventually can improve (9) to

for some absolute constant , and for

sufficiently close to

with

. Next, we recall the analogue of (11) for the function

, namely

which can be easily verified through contour integration. This formula is not ideal for our purposes (it would require controlling up and down the line

), so we truncate it, introducing the function

where is a smooth cutoff to a sufficiently small interval

around the origin, that equals one on

, for some small fixed

. Repeating the proof of Proposition 4, and using (12) instead of (9), we conclude (if

is small enough) that

Routine calculation shows that the right-hand side is . On the other hand, Littlewood-Paley theory can be used to show that

(say). From this we have that

and (1) follows.

Remark 1 A more elementary proof of (1) can be obtained by starting with the identity

and summing to conclude that

On the one hand, Stirling’s formula gives

. On the other hand, we have

and

, hence

from which (1) easily follows.

We now skip to the third Mertens’ theorem (3), since as observed previously the second Mertens’ theorem (2) follows as a corollary (and also follows from the first theorem through summation by parts). Let be a fixed quantity (independent of

). From (8) with

we have

where the asymptotic notation refers to the limit . Let us first consider the contribution to this sum from those

with

:

By Taylor expansion we have , and hence the above sum is equal to

or equivalently

where . From Proposition 4 we have

for any fixed . One can bound the tail error from

from the first Mertens’ theorem (1) and dyadic decomposition, and on taking limits as

we conclude that

scaling by

and then using Proposition 3, we conclude that

subtracting this from (13) we conclude that

From the mean value theorem we have

so from the first Mertens’ theorem (1), we can write the left-hand side of (14) as

Putting all this together, we see that

Since may be chosen arbitrarily small, we obtain the third Mertens’ theorem (3) as required.

30 comments

Comments feed for this article

18 December, 2013 at 3:28 pm

The beauty of the Riemann sphere | cartesian product

[…] Mertens’ theorems (terrytao.wordpress.com) […]

26 December, 2013 at 10:41 am

MrCactu5 (@MonsieurCactus)

So let me get this straight, Prof Tao. In order to do analysis on the primes we need a good scaling for our graph paper:

Rescale the primes as

Weight our function by

And it is just like the Riemann integral. The closest I can find is logarithmic graph paper. http://www.printablepaper.net/category/log

7 January, 2014 at 6:48 am

Mizar

Dear Prof. Tao, thank you for this great post! , right?

, right? twice, instead of

twice, instead of  .

.

Using Proposition 4 we obtain a slightly weaker statement than that in the first Theorem, namely

Moreover I want to point out two (negligible) typos: after you talk about dyadic decomposition, you write

[Corrected, thanks – T.]

28 January, 2014 at 9:19 pm

Polymath8b, VII: Using the generalised Elliott-Halberstam hypothesis to enlarge the sieve support yet further | What's new

[…] from Mertens’ theorem one easily verifies […]

6 June, 2014 at 12:23 pm

jaspgus

Hello,

I have been finding some difficulty when I try to fill in some of the details in the proof of proposition 4, specifically when applying it to the smooth approximations of![1_{[0,1]}](https://s0.wp.com/latex.php?latex=1_%7B%5B0%2C1%5D%7D&bg=ffffff&fg=545454&s=0&c=20201002) . Namely, the implied constants I’ve been getting depend on the magnitude of the derivatives of the approximations, which becomes a problem since I think these get very large as the approximations get better.

. Namely, the implied constants I’ve been getting depend on the magnitude of the derivatives of the approximations, which becomes a problem since I think these get very large as the approximations get better.

Do you have any tips I could try? The only kind of rapid decay on the fourier transform I’ve really tried come from repeated integration by parts, perhaps there is a stronger type of decay?

6 June, 2014 at 4:10 pm

Terence Tao

Oops, you’re right, the argument provided only gives the weaker error term of . I’ve now put in the details of the more complicated argument that gives the O(1) error term this way (as well as a more elementary proof of the O(1) term that avoids all Fourier analysis and zeta function estimates).

. I’ve now put in the details of the more complicated argument that gives the O(1) error term this way (as well as a more elementary proof of the O(1) term that avoids all Fourier analysis and zeta function estimates).

5 July, 2014 at 8:58 am

sdwkn__lsha

May will be useful

21 August, 2014 at 11:46 am

Large gaps between consecutive prime numbers | What's new

[…] compute the sum here, we first observe from Mertens’ theorem (discussed in this previous blog post) […]

5 September, 2014 at 7:10 pm

David Metzler

A somewhat idle question inspired by your comments here and elsewhere about asymptotics for almost primes: given an arbitrary prime signature , is a PNT-style asymptotic result known for the set of primes with that signature? I would guess so, and I would think that it just takes a bit more work with zeta (but I’m a geometer, not a number theorist!). Or does this require sieving, and sometimes run into the parity problem? I’d also be interested in the case where the prime signature is ordered, i.e. where we separate 2^2 * 3 = 12 from 2 * 3^2 = 18, for example. Perhaps that makes it much harder?

, is a PNT-style asymptotic result known for the set of primes with that signature? I would guess so, and I would think that it just takes a bit more work with zeta (but I’m a geometer, not a number theorist!). Or does this require sieving, and sometimes run into the parity problem? I’d also be interested in the case where the prime signature is ordered, i.e. where we separate 2^2 * 3 = 12 from 2 * 3^2 = 18, for example. Perhaps that makes it much harder?

I’m planning to get my young kids interested in a “race” between the cumulative counts of the sets of primes with various signatures (for small n). E.g. they can find out when semiprimes start to beat primes. It would be nice to know what the asymptotic story is in general for this “race”. Thanks!

6 September, 2014 at 8:07 am

Terence Tao

Yes, there are asymptotics for these sorts of numbers, see e.g. http://mathoverflow.net/questions/35927/asymptotic-density-of-k-almost-primes for some discussion of the case . As far as asymptotics are concerned, pretty much everything is known about the average prime factorisation of a single large number

. As far as asymptotics are concerned, pretty much everything is known about the average prime factorisation of a single large number  , ultimately thanks to multiplicative number theory techniques such as the theory of the Riemann zeta function. (The error term in these asymptotics is, of course, a completely different story, being very sensitive to the Riemann hypothesis and related conjectures.) Multiplicative number theory is one of the few powerful techniques we have that is not subject to the parity barrier, ultimately coming from the fundamental theorem of arithmetic that multiplicatively links the natural numbers to the primes (which leads to the Euler product factorisation of zeta, etc.). Unfortunately, multiplicative techniques fail completely once one starts looking at the primality of tuples such as

, ultimately thanks to multiplicative number theory techniques such as the theory of the Riemann zeta function. (The error term in these asymptotics is, of course, a completely different story, being very sensitive to the Riemann hypothesis and related conjectures.) Multiplicative number theory is one of the few powerful techniques we have that is not subject to the parity barrier, ultimately coming from the fundamental theorem of arithmetic that multiplicatively links the natural numbers to the primes (which leads to the Euler product factorisation of zeta, etc.). Unfortunately, multiplicative techniques fail completely once one starts looking at the primality of tuples such as  rather than a single number

rather than a single number  ; for instance, there is no useful fundamental theorem of arithmetic that only involves twin primes. (Well, one could make one tautologically, by introducing the set of natural numbers with only twin primes in their prime factorisation, but we have no useful additional information about that set (e.g. its asymptotic density) which one could then use to say something about twin primes.)

; for instance, there is no useful fundamental theorem of arithmetic that only involves twin primes. (Well, one could make one tautologically, by introducing the set of natural numbers with only twin primes in their prime factorisation, but we have no useful additional information about that set (e.g. its asymptotic density) which one could then use to say something about twin primes.)

8 September, 2014 at 8:41 am

arch1

Is it also the case that we have no useful additional info about the set of natural numbers whose *largest* prime factor is a twin?

10 September, 2014 at 7:27 pm

David Metzler

Terry—thanks very much for the response. I’ll take a look at that Math Overflow thread.

8 April, 2015 at 4:54 am

Anonymous

For a different proof of Proposition 4 and some general results see the very recent paper: Dumitru Popa, Polya-Radoux type results for some arithmetical functions, J. M.A.A, 2015, now article In Press, Accepted manuscript.

Dumitru Popa.

11 July, 2017 at 1:46 am

Stephen Glasby

I am interested to know more about the constant in equation (1). Landau proved it lies between -2 and 2. For x up to 10^7 it lies between -2 and 0.

Has progress been made since Landau’s proof of Merten’s equation (1)?

11 July, 2017 at 8:28 am

Terence Tao

The most recent result in this direction I know of is by Dusart, see Theorem 6.11 of https://arxiv.org/abs/1002.0442 .

Best,

Terry

11 July, 2017 at 11:29 am

Stephen Glasby

Many thanks Terry. Dusart’s approximation will suffice for my group theoretic application. (BTW: the only time I met you was January 1985 at the Australian Mathematics Summer School in Canberra, and even then you had an interest in number theory.)

6 March, 2018 at 2:30 pm

John Dienes

Dear Professor Tao:

I am writing a review about fragmentation and such, making much use of statistical concepts. To illustrate the power of such ideas I show that the density of primes is given by w= 1/log n. To derive this I note that the probability that w is prime is given by (1-1/2)(1-1/3)(1-1/5)…(1-1/n). Then some more “rough and ready” math shows this is equal to 1/log n. Saying that the probability is the same as the density is not much of a stretch. To confirm this neat result I used the table on p. 3 of Edwards giving the number of primes between 2,500,000 and 3,000,000: 216,816 – 183,072= 33,744. Thus in the neighborhood of 2,750,000 the density is 0.067488, while 1/log 2,750,000 is 0.067400, which passes for pretty good confirmation in engineering.

But then I ran across Mertens’ third theorem in Hardy and Wright and they would have me take exp(-gamma)/log n, smaller by 0.561. Clearly there is a problem with my example, but I hate to give up on it, especially since the conclusion checks out nicely.

The same calculation for 10**24 and 10**25 yields 0.01761 while 1/log n = 0.01737. So why does Mertens fail me? I have read and reread your discussion on the internet, but my math is not so sophisticated, having quit in 1950. It did not help. (I do a lot of applied math, which is somewhat different.)

I would greatly appreciate some explanation of this awful contradiction.

John Dienes

7 March, 2018 at 7:48 am

Terence Tao

This is known as the Mertens paradox, and is discussed on my blog at https://terrytao.wordpress.com/2015/01/04/254a-supplement-4-probabilistic-models-and-heuristics-for-the-primes-optional/ . Basically, the resolution comes from the fact that the events “p divides n”, for randomly selected n between 1 and x, do not behave independently once p becomes large. For instance, if p and q are primes between sqrt(x) and x, it is not possible for p and q to both divide n simultaneously, so the events “p divides n” and “q divides n” are far from independent. As a consequence one cannot simply multiply together the probabilities 1-1/p to predict the density of primes up to x; there is a correction at large primes which ends up contributing the factor.

factor.

6 October, 2018 at 8:45 am

Anonymous

Basically, the proof of the third theorem splits one infinity log(s-1) into two infinities log(epslon) and log(log x). The first cancels with log(epslon) in the upper sum over p>x, and the second left in the final result of the lower sum over p<=x.

The problem is: how can the upper sum keeps providing the full Euler constant value as x going to infinity? As I think, both upper sum and lower sum provide part of the Euler constant value, and the upper sum provides decreasing portion (to 0) when x increases (to infinity).

6 October, 2018 at 10:17 am

Anonymous

Or put it this way: Denote by gamma the Euler constant. Calculate the limit as x->infinity, while epsilon is fixed.

lim(UpperSum) = log(epsilon) + gamma +O(epsilon)

The limit of an empty sum must be 0. So we must have

0 = log(epsilon) + gamma +O(epsilon) which is absurd.

19 October, 2019 at 6:47 am

Juan Orozco (@JuanOrozcoNT)

Professor Tao, when exponentiating the log version of the third theorem, shouldn’t “+O(1)” become “times O(1)”, meaning the error is a constant factor? Thanks for this post. It has been a great resource throughout the years.

The exponential of is

is  – T.]

– T.]

7 October, 2020 at 7:09 am

A.S.Roy

Sorry I am not very comfortable with Fourier analysis and this is probably a really naive question, but I had to ask it, since this is the only one which I could not resolve (despite my best attempts) in the proof of Proposition 4: on applying the Fourier inversion formula on the function , I am getting something like

, I am getting something like

where the function is given by the following integral:

is given by the following integral:

It is not clear to me why $f$ decreases rapidly: I tried using integration by parts (taking into account the smoothness of ) to obtain

) to obtain

where![\text{supp }F \subset [-c,c]](https://s0.wp.com/latex.php?latex=%5Ctext%7Bsupp+%7DF+%5Csubset+%5B-c%2Cc%5D&bg=ffffff&fg=545454&s=0&c=20201002) and

and  for some

for some  since

since  has compact support. While the integral occurring in the previous expression is

has compact support. While the integral occurring in the previous expression is  the first term seems to be

the first term seems to be  , so all I keep getting is

, so all I keep getting is  . Could you kindly let me know what I am doing wrong? I would be really obliged for the same.

. Could you kindly let me know what I am doing wrong? I would be really obliged for the same.

7 October, 2020 at 11:48 am

Terence Tao

The boundary terms involve and

and  rather than

rather than  , and hence vanish.

, and hence vanish.

13 June, 2021 at 6:38 am

Webspinner

Dear Prof. Tao,

you use a simple sentence to claim that in the proof of Prop. 2, but one does have to use the real derivative of the absolute value, or am I mistaken? I failed to immediately find an algebraic manipulation making that clear.

in the proof of Prop. 2, but one does have to use the real derivative of the absolute value, or am I mistaken? I failed to immediately find an algebraic manipulation making that clear.

[Yes, this is easiest to see using an estimation of the derivative of . -T]

. -T]

13 June, 2021 at 6:55 am

Webspinner

And Stirling’s formula may be replaced by a simple summation by parts in Remark 1.

13 January, 2022 at 4:19 am

Ian Petrow

Apologies for the pedantry, but: Shouldn’t it either be “Mertens Theorem” or “Mertens’s Theorem”, since “Mertens'” is not the proper way to make a possessive out of Mertens. And yet, it seems to be common to write “Mertens'” in the literature.

13 January, 2022 at 10:03 am

Anonymous

Idiot. Mertens is the last name of the mathematician “Franz Mertens”.

13 January, 2022 at 8:38 pm

N is a number

Yes that is why Mertens’s could have made sense.

But in any case the applicability of the result would remain the same, its power and nature would also not change if we call it by some other name.

Afterall, what’s in a name? What people call rose would still smell sweet if called by some other name. -a reworded quote of Shakespeare.

13 January, 2022 at 8:34 pm

Anonymous

Mertens’ is the correct possessive form of Mertens. When a word ends with a ‘s’, such as “Mertens”, just need to add an ‘ to get its possessive form. This is basic English grammar.

13 January, 2022 at 8:52 pm

Aditya Guha Roy

If I recall correctly, then Mertens’ theorem is also useful in a step to establish Chebyshev bounds which is the starting point of almost any proof of the prime number theorem.

Also if I recall correctly, then I read on the internet a trustworthy article on how Euler knew/ foresighted this result almost a century before Franz Mertens actually published this paper.