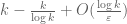

This is the second thread for the Polymath8b project to obtain new bounds for the quantity

either for small values of (in particular

) or asymptotically as

. The previous thread may be found here. The currently best known bounds on

are:

- (Maynard)

.

- (Polymath8b, tentative)

.

- (Polymath8b, tentative)

for sufficiently large

.

- (Maynard) Assuming the Elliott-Halberstam conjecture,

,

, and

.

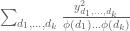

Following the strategy of Maynard, the bounds on proceed by combining four ingredients:

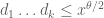

- Distribution estimates

or

for the primes (or related objects);

- Bounds for the minimal diameter

of an admissible

-tuple;

- Lower bounds for the optimal value

to a certain variational problem;

- Sieve-theoretic arguments to convert the previous three ingredients into a bound on

.

Accordingly, the most natural routes to improve the bounds on are to improve one or more of the above four ingredients.

Ingredient 1 was studied intensively in Polymath8a. The following results are known or conjectured (see the Polymath8a paper for notation and proofs):

- (Bombieri-Vinogradov)

is true for all

.

- (Polymath8a)

is true for

.

- (Polymath8a, tentative)

is true for

.

- (Elliott-Halberstam conjecture)

is true for all

.

Ingredient 2 was also studied intensively in Polymath8a, and is more or less a solved problem for the values of of interest (with exact values of

for

, and quite good upper bounds for

for

, available at this page). So the main focus currently is on improving Ingredients 3 and 4.

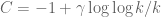

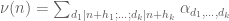

For Ingredient 3, the basic variational problem is to understand the quantity

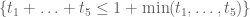

for bounded measurable functions, not identically zero, on the simplex

with being the quadratic forms

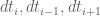

and

Equivalently, one has

where is the positive semi-definite bounded self-adjoint operator

so is the operator norm of

. Another interpretation of

is that the probability that a rook moving randomly in the unit cube

stays in simplex

for

moves is asymptotically

.

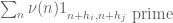

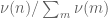

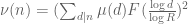

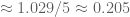

We now have a fairly good asymptotic understanding of , with the bounds

holding for sufficiently large . There is however still room to tighten the bounds on

for small

; I’ll summarise some of the ideas discussed so far below the fold.

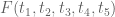

For Ingredient 4, the basic tool is this:

Thus, for instance, it is known that and

, and this together with the Bombieri-Vinogradov inequality gives

. This result is proven in Maynard’s paper and an alternate proof is also given in the previous blog post.

We have a number of ways to relax the hypotheses of this result, which we also summarise below the fold.

— 1. Improved sieving —

A direct modification of the proof of Theorem 1 also shows:

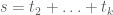

Here is defined for the truncated simplex

in the obvious fashion. This allows us to use the MPZ-type bounds obtained in Polymath8a, at the cost of requiring the test functions

to have somewhat truncated support. Fortunately, in the large

setting, the functions we were using had such a truncated support anyway. It looks likely that we can replace the cube

by significantly larger regions by using the (multiple) dense divisibility versions of

, but we have not yet looked into this.

It also appears that if one generalises the Elliott-Halberstam conjecture to also encompass more general Dirichlet convolutions

than the von Mangoldt function

(see e.g. Conjecture 1 of Bombieri-Friedlander-Iwaniec), then one can enlarge the simplex

in Theorem 1 (and probably for Theorem 2 also) to the slightly larger region

Basically, the reason for this is that the restriction to the simplex (as opposed to

) is only needed to control the sum

, but by splitting

into products of simpler divisor sums, and using the Elliott-Halberstam hypothesis to control one of the factors, it looks like one can still control error terms in the larger region

(but this will have to be checked at some point, if we end up using this refinement). This is only likely to give a slight improvement, except when

is small; from the inclusions

and a scaling argument we see that

Assume EH. To improve the bound to

, it suffices to obtain a bound of the form

where

and

With given in terms of a cutoff function

, the left-hand side

can be computed as usual as

while we have the upper bound

and other bounds may be possible. (This is discussed in this comment.)

For higher , it appears that similar maneuvers will have a relatively modest impact, perhaps shaving

or so off of the current values of

.

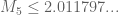

— 2. Upper bound on —

We have the upper bound

for any . To see this, observe from Cauchy-Schwarz that

The final factor is , and so

Summing in and noting that

on the simplex we have

and the claim follows.

Setting , we conclude that

for sufficiently large . There may be some room to improve these bounds a bit further.

— 3. Lower bounds on —

For small , one can optimise the quadratic form

by specialising to a finite-dimensional space and then performing the appropriate linear algebra. It is known that we may restrict without loss of generality to symmetric

; one could in principle also restrict to the functions of the form

for some symmetric function (indeed, morally at least

should be an eigenfunction of

), although we have not been able to take much advantage of this yet.

For large , we can use the bounds

for any and any

with

; we can also start with a given

and improve it by replacing it with

(normalising in

if desired), and perhaps even iterating and accelerating this process.

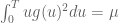

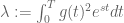

The basic functions we have been using take the form

where

and

Then , and

where are iid random variables on

with density

. By Chebyshev’s inequality we then have

if , where

and

A lengthier computation for gives

assuming .

129 comments

Comments feed for this article

22 November, 2013 at 7:51 pm

Terence Tao

It might be time to start collecting upper and lower bounds for for small k (say, up to k=105) to get a sense of how much room there is for improvement here, and what one can hope to achieve from the MPZ estimates (or from using fancier symmetric functions). The fact that

for small k (say, up to k=105) to get a sense of how much room there is for improvement here, and what one can hope to achieve from the MPZ estimates (or from using fancier symmetric functions). The fact that  is now known to not grow any faster than

is now known to not grow any faster than  is a little disappointing for the large

is a little disappointing for the large  theory, but perhaps there is yet another way to modify the sieve to break this barrier (much as the GPY sieve, for which M_k never exceeded 4, was modified into the current sieve introduced by James). The only “hard” obstruction I know of is the parity obstruction, which basically stems from the belief that

theory, but perhaps there is yet another way to modify the sieve to break this barrier (much as the GPY sieve, for which M_k never exceeded 4, was modified into the current sieve introduced by James). The only “hard” obstruction I know of is the parity obstruction, which basically stems from the belief that  is negligible for just about any sieve under consideration, which means in probabilistic terms that

is negligible for just about any sieve under consideration, which means in probabilistic terms that

and in particular

This translates to the trivial bound in the current context, which is well short of the truth; it seems there is a substantial gap between “having an odd number of prime factors” and “is prime” when is working with k-tuple sieves.

in the current context, which is well short of the truth; it seems there is a substantial gap between “having an odd number of prime factors” and “is prime” when is working with k-tuple sieves.

22 November, 2013 at 9:24 pm

Pace Nielsen

I thought I’d play around with your heuristics for .

.

If we plug in Maynard’s function

(where are the first two power-symmetric polynomials) into the upper-bound for

are the first two power-symmetric polynomials) into the upper-bound for  (and dropping little-o terms) we get that

(and dropping little-o terms) we get that  . We needed to beat

. We needed to beat  to force

to force  down one.

down one.

I imagine that there are better choices for in this context, and I’ll start thinking about that.

in this context, and I’ll start thinking about that.

22 November, 2013 at 10:11 pm

Terence Tao

One can presumably get some gain by enlarging the support of F from the simplex to the larger (but more complicated) region . (The cutoff

. (The cutoff  in the formula for

in the formula for  would be similarly modified.) One would have to do a certain amount of fiddly numerics though to integrate on this more messy polytope.

would be similarly modified.) One would have to do a certain amount of fiddly numerics though to integrate on this more messy polytope.

Also, we’ve broken the symmetry a bit, and it’s no longer necessarily optimal to use F that are symmetric in all five variables; instead, it should just be symmetric in and in

and in  . But perhaps we get a bit more flexibility with this broken symmetry.

. But perhaps we get a bit more flexibility with this broken symmetry.

23 November, 2013 at 8:07 am

Terence Tao

In a previous comment, James indicated that if we simply inserted our best confirmed value into the low k machinery, ignoring delta, we could potentially reduce k from 105 to 68 (and presumably we do a little better than this if we use the tentative value

into the low k machinery, ignoring delta, we could potentially reduce k from 105 to 68 (and presumably we do a little better than this if we use the tentative value  ). The main thing blocking us from making this a rigorous argument is that in order to use

). The main thing blocking us from making this a rigorous argument is that in order to use ![MPZ[\varpi,\delta]](https://s0.wp.com/latex.php?latex=MPZ%5B%5Cvarpi%2C%5Cdelta%5D&bg=ffffff&fg=545454&s=0&c=20201002) , we have to use test functions F that are not only restricted to the simplex, but also to the cube

, we have to use test functions F that are not only restricted to the simplex, but also to the cube ![[0, \frac{\delta}{1/4+\varpi}]^k](https://s0.wp.com/latex.php?latex=%5B0%2C+%5Cfrac%7B%5Cdelta%7D%7B1%2F4%2B%5Cvarpi%7D%5D%5Ek&bg=ffffff&fg=545454&s=0&c=20201002) . This is not a serious problem for the large k argument (because the test functions were already by design supported on the cube

. This is not a serious problem for the large k argument (because the test functions were already by design supported on the cube ![[0,T/k]^k](https://s0.wp.com/latex.php?latex=%5B0%2CT%2Fk%5D%5Ek&bg=ffffff&fg=545454&s=0&c=20201002) ) but is so for the low k argument (because we are instead using polynomials of the symmetric polynomials P_1 and P_2.

) but is so for the low k argument (because we are instead using polynomials of the symmetric polynomials P_1 and P_2.

However, it could be, as was the experience in Zhang and Polymath8a, that the contributions outside of this cube are exponentially small and thus hopefully negligible (although we have to be careful because our values of k are now small enough that “exponentially small” does not _automatically_ imply negligibility any longer). Basically we could follow arguments similar to that in Section 4 of the Polymath8a paper, writing the truncated F as where

where  is untruncated and

is untruncated and  is the portion outside of the cube, and use the inequality

is the portion outside of the cube, and use the inequality

and upper bound the error term by controlling the contribution when a given coordinate

by controlling the contribution when a given coordinate  exceeds

exceeds  . Here the point is that this is a small portion of the simplex; by volume, it is something like

. Here the point is that this is a small portion of the simplex; by volume, it is something like  .

.

At present, are so small that this is actually not so negligible, but we can then pull out the other Polymath8a trick, which is to exploit dense divisibility and introduce another parameter

are so small that this is actually not so negligible, but we can then pull out the other Polymath8a trick, which is to exploit dense divisibility and introduce another parameter  . This allows us to enlarge the cube

. This allows us to enlarge the cube ![[0, \frac{\delta}{1/4+\varpi}]^k](https://s0.wp.com/latex.php?latex=%5B0%2C+%5Cfrac%7B%5Cdelta%7D%7B1%2F4%2B%5Cvarpi%7D%5D%5Ek&bg=ffffff&fg=545454&s=0&c=20201002) to something like the portion of

to something like the portion of ![[0, \frac{\delta'}{1/4+\varpi}]^k](https://s0.wp.com/latex.php?latex=%5B0%2C+%5Cfrac%7B%5Cdelta%27%7D%7B1%2F4%2B%5Cvarpi%7D%5D%5Ek&bg=ffffff&fg=545454&s=0&c=20201002) in which

in which  (this is basically Lemma 4.12(iv) from Polymath8a), which should lead to better error terms as per Polymath8a.

(this is basically Lemma 4.12(iv) from Polymath8a), which should lead to better error terms as per Polymath8a.

23 November, 2013 at 12:50 pm

Terence Tao

A little bit more elaboration of the above idea, using some of the Cauchy-Schwarz arguments from the upper bound theory.

Let be some symmetric test function on the simplex, and let

be some symmetric test function on the simplex, and let  be the restriction of

be the restriction of  to the cube

to the cube ![[0,\tilde \delta]^k](https://s0.wp.com/latex.php?latex=%5B0%2C%5Ctilde+%5Cdelta%5D%5Ek&bg=ffffff&fg=545454&s=0&c=20201002) where

where  . Then

. Then  where

where  is the portion of

is the portion of  outside of the cube. We then have

outside of the cube. We then have

The last term we can bound by . Now we try to upper bound

. Now we try to upper bound  . By symmetry this is

. By symmetry this is

The integrand is only non-zero when and

and  . By Cauchy-Schwarz we have

. By Cauchy-Schwarz we have

and

and so by the AM-GM inequality we can bound (1) by

for any , which symmetrises to

, which symmetrises to

(here we use the disjoint supports of the various integrals) so on optimising in

integrals) so on optimising in  we obtain a bound of

we obtain a bound of

so one just need a reasonably good bound on (compared with

(compared with  ) to be able to neglect these errors. As I said before, the ratio of

) to be able to neglect these errors. As I said before, the ratio of  to

to  is likely to be something like

is likely to be something like  ; this doesn’t look too good for the values of k we are considering (i.e. below 105), but maybe if we split into two deltas as before, then it won’t be so bad.

; this doesn’t look too good for the values of k we are considering (i.e. below 105), but maybe if we split into two deltas as before, then it won’t be so bad.

23 November, 2013 at 8:32 am

Eytan Paldi

In the above definition of , the subscript

, the subscript  in

in

should be replaced by

should be replaced by  .

. .)

.)

(with similar correction in the proof of the upper bound on

[Corrected, thanks – T.]

23 November, 2013 at 10:29 am

Eytan Paldi

This typo still appears in several places

1. In the definition of ,

,  should be

should be  and the last

and the last  in the integral should be

in the integral should be  .

.

2. In the proof of the upper bound on , in the first two integrals

, in the first two integrals

should be

should be  .

.

[Corrected, thanks – T.]

23 November, 2013 at 9:30 am

Eytan Paldi

In the proof of the upper bound on , it should be

, it should be “.

“.

“noting that

[Corrected, thanks – T.]

23 November, 2013 at 9:58 am

Terence Tao

Here is an idea that might not pan out, but I’m throwing it out there anyways.

Take k=3 for brevity. Right now, we need to control sums such as , where

, where  is a divisor sum basically of the form

is a divisor sum basically of the form

We can then set and are looking at a sum of the form

and are looking at a sum of the form

We can compare the inner sum against its expected value![E_{d_2,d_3} = \frac{x}{\phi([d_2,d_3])}](https://s0.wp.com/latex.php?latex=E_%7Bd_2%2Cd_3%7D+%3D+%5Cfrac%7Bx%7D%7B%5Cphi%28%5Bd_2%2Cd_3%5D%29%7D+&bg=ffffff&fg=545454&s=0&c=20201002) , and are left with dealing with an error term

, and are left with dealing with an error term

Now at this point, what Zhang and Polymath8a do is to take absolute values and start looking at something roughly of the shape

where came from

came from ![[d_2,d_3]](https://s0.wp.com/latex.php?latex=%5Bd_2%2Cd_3%5D&bg=ffffff&fg=545454&s=0&c=20201002) and

and  is something formed from the Chinese remainder theorem (and thus could potentially be quite large). But this throws away two things: firstly, that the residue class

is something formed from the Chinese remainder theorem (and thus could potentially be quite large). But this throws away two things: firstly, that the residue class  came from two residue classes which were bounded, and secondly, that the coefficients

came from two residue classes which were bounded, and secondly, that the coefficients  were not completely arbitrary, but instead had a somewhat multiplicative structure (basically of the form

were not completely arbitrary, but instead had a somewhat multiplicative structure (basically of the form  ). It may be that if we delve into the Type I/II estimates, we can exploit this extra structure to go beyond the exponents that we currently do. In particular, the coefficients

). It may be that if we delve into the Type I/II estimates, we can exploit this extra structure to go beyond the exponents that we currently do. In particular, the coefficients  that show up in Polymath8a roughly seem to have the shape

that show up in Polymath8a roughly seem to have the shape  (times smooth factors), and so

(times smooth factors), and so  has some smoothness in r which looks helpful (but somewhat difficult to exploit).

has some smoothness in r which looks helpful (but somewhat difficult to exploit).

23 November, 2013 at 11:38 am

Aubrey de Grey

Am I right in assuming that the recent “Deligne-free” values from Pace that are shown on the “world records” page are derived assuming only B-V, following James’s paper? If so, perhaps it would be of interest to do as in Polymath8a and have a look at what k0 values the Deligne-free [varpi,delta] derived on July 30th gives rise to in the post-Maynard universe?

25 November, 2013 at 8:19 am

Terence Tao

This is something we can certainly do without much difficulty once our results have stabilised, but at present the four benchmarks we have (m=1 from BV, m=1 using Polymath8a, m=2 from BV, m=2 using Polymath8a) are covering enough of the “parameter space” that any further benchmarking would probably just be confusing (although perhaps a m=3 case, say using the best Polymath8a result, might be worth looking at).

23 November, 2013 at 2:40 pm

Terence Tao’s ”What’s New”- The Polymath. | Edgar's Creative

[…] Polymath8b, II: Optimising the variational problem and the sieve. […]

23 November, 2013 at 6:44 pm

Eytan Paldi

The upper and lower bounds on may be improved (in particular for small

may be improved (in particular for small  ) by generalizing the basic function

) by generalizing the basic function  (e.g. by

(e.g. by  ) and optimizing over the additional parameters (e.g.

) and optimizing over the additional parameters (e.g.  ) as well.

) as well.

24 November, 2013 at 5:05 am

Eytan Paldi

I don’t understand (8.9) in Maynard’s paper (the integral is divergent.)

24 November, 2013 at 5:39 am

Pace Nielsen

There is a minor typo. He just dropped from the integral.

from the integral.

24 November, 2013 at 5:50 am

Pace Nielsen

To see the equality in (8.9) after that factor is reinserted, just integrate each summand separately, and count.

By the way, I had your very question earlier. There seems to be a second typo in the line above (8.13). It says that , but I believe the RHS should be

, but I believe the RHS should be  .

.

24 November, 2013 at 6:02 am

Eytan Paldi

Thanks for the explanation!

25 November, 2013 at 2:53 pm

James Maynard

Just to confirm: Everything that Pace says is correct. Both of these are typos in the paper – sorry for the confusion this caused.

If anyone happens to spot similar typos I’d quite like to hear – either emailing me or mentioning on here would be appreciated!

24 November, 2013 at 6:49 pm

Terence Tao

I can now modify the Cauchy-Schwarz argument to prove the clean upper bound

which saves a or so from the previous bound.

or so from the previous bound.

The key estimate is

Assuming this estimate, we may integrate in to conclude that

to conclude that

which symmetrises to

giving (1).

It remains to prove (2). By Cauchy-Schwarz, it suffices to show that

But writing , the left-hand side evaluates to

, the left-hand side evaluates to

as required.

This also suggests that extremal F behave like multiples of in the

in the  variable for typical fixed choices of

variable for typical fixed choices of  .

.

In the converse direction, I have an asymptotic bound

for sufficiently large k. Using the notation of the previous post, we have the lower bound

whenever is supported on

is supported on ![[0,T]](https://s0.wp.com/latex.php?latex=%5B0%2CT%5D&bg=ffffff&fg=545454&s=0&c=20201002) ,

,  , and

, and  are independent random variables on [0,T] with density

are independent random variables on [0,T] with density  . We select the function

. We select the function

with , and

, and  for some

for some  to be chosen later. We have

to be chosen later. We have

and

and so

Observe that the random variables have mean

have mean

The variance may be bounded crudely by

may be bounded crudely by

Thus the random variable has mean at most

has mean at most  and variance

and variance  , with each variable bounded in magnitude by

, with each variable bounded in magnitude by  . By Hoefding’s inequality, this implies that

. By Hoefding’s inequality, this implies that  is at most

is at most  with probability at most

with probability at most  for some absolute constant

for some absolute constant  . If we set

. If we set  for a sufficiently large absolute constant

for a sufficiently large absolute constant  , we thus have

, we thus have

and thus

as claimed.

Hoeffding’s bound is proven by the exponential moment method, which more generally gives the bound

for any , which is a somewhat complicated, but feasible-looking, optimisation problem in A, T, s. (Roughly speaking, one expects to take A close to

, which is a somewhat complicated, but feasible-looking, optimisation problem in A, T, s. (Roughly speaking, one expects to take A close to  , T a bit smaller than

, T a bit smaller than  , and

, and  roughly of the order of

roughly of the order of  .)

.)

25 November, 2013 at 5:14 am

Eytan Paldi

As an interesting application of this upper bound, we have

1. , so under EH the current method is limited to

, so under EH the current method is limited to

.

.

2. , so Under EH[1/2] the current method is limited to

, so Under EH[1/2] the current method is limited to  .

.

25 November, 2013 at 8:49 am

Aubrey de Grey

Or 42 under the polymath8a results, yes? (I believe the confirmed and tentative versions both give this value.) Or am I overinterpreting earlier posts concerning the derivability of M from varpi?

27 November, 2013 at 2:09 pm

Eytan Paldi

Unfortunately, the particular above (used for the derivation of the upper bound) is not in

above (used for the derivation of the upper bound) is not in  (since the integration of

(since the integration of  with respect to

with respect to  gives

gives  – which is not integrable over

– which is not integrable over  ) – so this

) – so this  can’t be used as a basis function for lower bounds evaluation.

can’t be used as a basis function for lower bounds evaluation.

It should be remarked that – since it has only logarithmic singularity.

– since it has only logarithmic singularity.

7 December, 2013 at 9:47 am

Eytan Paldi

For the upper bound is

the upper bound is  , while from (7.19) in Maynard’s paper

, while from (7.19) in Maynard’s paper  – showing that the upper bound is surprisingly good even for

– showing that the upper bound is surprisingly good even for  .

.

14 December, 2014 at 7:28 pm

Eytan Paldi

It seems that there is an error in the above derivation of the lower bound since (not

(not  as in the above derivation!)

as in the above derivation!)

[Corrected, thanks – there was a similar factor missing in the bound which cancels out the error. -T.]

bound which cancels out the error. -T.]

1 February, 2015 at 7:44 pm

Eytan Paldi

There is another error in the lower bound derivation: for the probability estimate – which is clearly too crude!

for the probability estimate – which is clearly too crude! while the (much smaller!) numerator is only

while the (much smaller!) numerator is only  .

.

It seems that Hoeffding’s inequality (which is independent of the variance) gives

The problem in using this inequality is that the denominator in the exponent is

Fortunately, by using the above variance estimate, it follows from Bennett’s inequality (or even from Bernstein’s inequality) the probability estimate which implies the (slightly weaker) lower bound

which implies the (slightly weaker) lower bound

30 December, 2014 at 3:25 pm

Eytan Paldi

For the last lower bound on , it is easy to verify that the lower bound is maximized by (temporarily) fixing

, it is easy to verify that the lower bound is maximized by (temporarily) fixing  and maximizing the integral

and maximizing the integral  over

over  under the two (integral) constraints that

under the two (integral) constraints that  and

and  are fixed. It is easy to show that the optimal function

are fixed. It is easy to show that the optimal function  has the form

has the form  for some

for some  .

. (needed for the lower bound) are simple elementary functions of

(needed for the lower bound) are simple elementary functions of  – enabling simpler optimization of the lower bound over the parameters

– enabling simpler optimization of the lower bound over the parameters  .

. , it seems that the paremeters

, it seems that the paremeters  can be reparametrized by

can be reparametrized by

Fortunately, the resulting integrals representing

For large

Where are the new parameters.

are the new parameters.

(Unfortunately, it seems that asymptotically we still get the above lower bound implied by Hoeffding inequality.)

24 November, 2013 at 9:56 pm

xfxie

Seems k0 can drop some, assuming the implementation is correct.

With :

:  =339

=339

Without using the inequalities:

inequalities:  =385

=385

25 November, 2013 at 10:07 am

Terence Tao

Two small thoughts:

1. The Cauchy-Schwarz argument giving upper bounds on might more generally be able to give upper bounds for the quantity

might more generally be able to give upper bounds for the quantity

for any Selberg sieve

regardless of the choice of coefficients , assuming that the error terms are manageable (which presumably means something like

, assuming that the error terms are manageable (which presumably means something like  where

where  is the level of distribution) and also some mild size conditions on the coefficients (e.g. all of size

is the level of distribution) and also some mild size conditions on the coefficients (e.g. all of size  ). Upper bounds are of course less directly satisfying than lower bounds (particularly for the purposes of advancing the “world records” table) but would help give a picture of what more one can expect to squeeze out of these methods. (And we also have some possible ways to evade these upper bounds, e.g. by utilising the two-point correlations

). Upper bounds are of course less directly satisfying than lower bounds (particularly for the purposes of advancing the “world records” table) but would help give a picture of what more one can expect to squeeze out of these methods. (And we also have some possible ways to evade these upper bounds, e.g. by utilising the two-point correlations  as discussed previously; one could also hope to use other sieves than the Selberg sieve, though I don’t see at present what alternatives would be viable.)

as discussed previously; one could also hope to use other sieves than the Selberg sieve, though I don’t see at present what alternatives would be viable.)

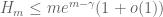

2. As mentioned in Maynard’s paper, the method here would also allow one to produce small gaps in other sets than the primes, as long as one had a non-trivial level of distribution . For instance, if one replaced primes by semiprimes (products of exactly two primes) Goldston-Graham-Pintz-Yildirim obtained the bounds

. For instance, if one replaced primes by semiprimes (products of exactly two primes) Goldston-Graham-Pintz-Yildirim obtained the bounds  and

and  unconditionally. These bounds are already quite good (semiprimes are significantly denser than primes, which is why the results here are better than those for primes) but perhaps there is further room for improvement.

unconditionally. These bounds are already quite good (semiprimes are significantly denser than primes, which is why the results here are better than those for primes) but perhaps there is further room for improvement.

25 November, 2013 at 10:48 am

Terence Tao

Just did a quick calculation: using Maynard’s sieve (with T equal to, say, ) the quantity

) the quantity  looks to be roughly on the order of

looks to be roughly on the order of  (compared to

(compared to  in the case of primes), by counting products of two primes each of size at least

in the case of primes), by counting products of two primes each of size at least  . (For semiprimes with smaller factors than this, the sieve weight

. (For semiprimes with smaller factors than this, the sieve weight  becomes smaller.) So this would give something like

becomes smaller.) So this would give something like  for semiprimes, and more generally

for semiprimes, and more generally  for products of exactly r primes (with implied constants depending on r).

for products of exactly r primes (with implied constants depending on r).

Actually, in many ways, the numerology for our current problem with primes is very similar to the GGPY numerology for semiprimes.

25 November, 2013 at 8:06 pm

Terence Tao

Looks like the Cauchy-Schwarz argument does indeed block the Selberg sieve from doing much better than primes in a k-tuple, assuming that the weights are fairly uniform in magnitude, which among other things, allows one to discard the small fraction of weights in which the heuristic

primes in a k-tuple, assuming that the weights are fairly uniform in magnitude, which among other things, allows one to discard the small fraction of weights in which the heuristic  mentioned in Section 6 of Maynard’s paper fails. The analogue of the ratio

mentioned in Section 6 of Maynard’s paper fails. The analogue of the ratio  is then (in the notation of the paper)

is then (in the notation of the paper)

Cauchy-Schwarz then gives

for any (using the fact that

(using the fact that  is constrained to be coprime to

is constrained to be coprime to  ); using this and summing in

); using this and summing in  (using the constraint

(using the constraint  ) shows that the ratio is of size

) shows that the ratio is of size  , which for bounded choices of A gives a bound of

, which for bounded choices of A gives a bound of  . If one works this argument more carefully we get the same upper bounds that we do for the integral variational problem

. If one works this argument more carefully we get the same upper bounds that we do for the integral variational problem  .

.

There is a potential loophole in using sieve weights that focus on those moduli r with a unusually large number of prime factors (so that are no longer close to each other), but this is a very sparse set of moduli, to the point where the error terms in the sieve analysis now overwhelm the main terms. Also, intuitively one should not be able to get a good sieve by using only a sparse set of the available moduli.

are no longer close to each other), but this is a very sparse set of moduli, to the point where the error terms in the sieve analysis now overwhelm the main terms. Also, intuitively one should not be able to get a good sieve by using only a sparse set of the available moduli.

To me, this suggests that the main remaining hope for breaking the logarithmic barrier is to work with a wider class of sieves than the Selberg sieve. In principle there are a lot of other possible sieves to use (e.g. beta sieve or other combinatorial sieves), but numerically these sieves tend to underperform the Selberg sieve for these sorts of problems (enveloping the primes in a sieve weighted on almost primes in order to make the density of the primes as large as possible). It’s tempting to try to perturb the Selberg sieve in some combinatorial fashion, but the trick is to keep the sieve non-negative (which is crucial in our argument, unless one can somehow get excellent control on the negative portion of the sieve); once one leaves the Selberg sieve form this becomes quite non-trivial.

this becomes quite non-trivial.

26 November, 2013 at 3:08 am

Eytan Paldi

Perhaps there is some generalization to a sieve combining translates of several admissible tuples.

26 November, 2013 at 11:06 am

Terence Tao

I’m now leaning towards the view that any replacement for the Selberg sieve will also encounter a logarithmic type barrier, in that the density

will also encounter a logarithmic type barrier, in that the density  is unlikely to be much higher than

is unlikely to be much higher than  . Actually it is rather miraculous that we get the logarithmic gain at all, a naive heuristic computation (which I give below) suggests that

. Actually it is rather miraculous that we get the logarithmic gain at all, a naive heuristic computation (which I give below) suggests that  should be the threshold.

should be the threshold.

To motivate things, suppose we start with a one-dimensional sieve , where

, where  are some coefficients for

are some coefficients for  up to some height

up to some height  . In the Selberg sieve case this divisor sum is the square of some other divisor sum

. In the Selberg sieve case this divisor sum is the square of some other divisor sum  to ensure non-negativity, but we will not assume here that the sieve is of Selberg type.

to ensure non-negativity, but we will not assume here that the sieve is of Selberg type.

The primality density of such a sieve cannot be much larger than

of such a sieve cannot be much larger than  . This is because all

. This is because all  -rough numbers (numbers with no prime factor less than

-rough numbers (numbers with no prime factor less than  ) are assigned the same value by the sieve

) are assigned the same value by the sieve  , and by the asymptotics for the Dickman-de Bruijn function, the primes in

, and by the asymptotics for the Dickman-de Bruijn function, the primes in ![[x,2x]](https://s0.wp.com/latex.php?latex=%5Bx%2C2x%5D&bg=ffffff&fg=545454&s=0&c=20201002) form a proportion of about

form a proportion of about  of all the

of all the  -rough numbers in

-rough numbers in ![[x,2x]](https://s0.wp.com/latex.php?latex=%5Bx%2C2x%5D&bg=ffffff&fg=545454&s=0&c=20201002) .

.

Now we look at a multidimensional sieve , where again we do not assume the sieve to be of Selberg type, but we impose a restriction of the shape

, where again we do not assume the sieve to be of Selberg type, but we impose a restriction of the shape  since we are unlikely to control the sieve error terms without such a hypothesis, even assuming the strongest Elliott-Halberstam type conjectures. Heuristically, this limits each of the

since we are unlikely to control the sieve error terms without such a hypothesis, even assuming the strongest Elliott-Halberstam type conjectures. Heuristically, this limits each of the  to be typically of size

to be typically of size  , and then the one-dimensional argument suggests that the primality density

, and then the one-dimensional argument suggests that the primality density  should not be much larger than

should not be much larger than  for each

for each  .

.

Now the Maynard sieve does do a bit better than this, because each factor is allowed to be a bit higher than

is allowed to be a bit higher than  on occasion (there is a weight roughly of the shape

on occasion (there is a weight roughly of the shape  in the sieve), since one can rely on concentration of measure (or the law of large numbers, if you wish) to keep the product

in the sieve), since one can rely on concentration of measure (or the law of large numbers, if you wish) to keep the product  of a reasonable size

of a reasonable size  . This occasional use of higher moduli seems to just barely be sufficient to diminish the sieve

. This occasional use of higher moduli seems to just barely be sufficient to diminish the sieve  on some of the other

on some of the other  -rough numbers than the primes to boost the prime density from

-rough numbers than the primes to boost the prime density from  to

to  . But it seems unlikely that a clever choice of sieve weights could do much better than this.

. But it seems unlikely that a clever choice of sieve weights could do much better than this.

26 November, 2013 at 12:51 pm

James Maynard

Do you have a heuristic which explains the gain in the log, without looking too much at the sieve functions themselves?

I ask because I’d been working under the same heuristic that you mention above, and it surprised me that the modified weights were able to win by a full factor of a log. I don’t have any good heuristic explanation as to how one might be able to `see’ that these weights do this well a priori. Obviously, although logs are critical in the small-gaps argument, they are very small in comparison to , and so we shouldn’t necessarily be surprised a heuristic breaks down at this level of accuracy.

, and so we shouldn’t necessarily be surprised a heuristic breaks down at this level of accuracy.

That said, I liked this heuristic because it seemed to work well with similar problems. It seems to explain quite naturally the results of GGPY/Thorne on small gaps between numbers with prime factors, results on sifting limits and results on the number of prime factors of

prime factors, results on sifting limits and results on the number of prime factors of  . (Some of these are more `robust’ to a log factor than others). The `superiority’ of the Selberg sieve over other sieves for larger

. (Some of these are more `robust’ to a log factor than others). The `superiority’ of the Selberg sieve over other sieves for larger  seemed to me just that the implied constants in the

seemed to me just that the implied constants in the  -terms were better.

-terms were better.

26 November, 2013 at 9:17 pm

Terence Tao

Unfortunately I don’t have much of an explanation for this – at a formal level it shares a lot of similarities with the logarithmic divergences that show up in the endpoint Sobolev inequalities in harmonic analysis, in that one gets contributions from logarithmically many dyadic scales (which, in this case, would be something like the contribution of moduli between and

and  for

for  ) but the way in which these scales interact seems rather specific to the Selberg sieve, and it is possible that some other sieve might give a different power of log (or more likely, no logarithmic gain at all).

) but the way in which these scales interact seems rather specific to the Selberg sieve, and it is possible that some other sieve might give a different power of log (or more likely, no logarithmic gain at all).

25 November, 2013 at 11:55 am

Aubrey de Grey

Asking the converse question: is there any N for which there are already known to be infinitely many x such that both x and x+2 are products of exactly N primes? If not, is it plausible that Maynard’s methods can be used to identify such an N? (Or if so, to reduce the known N?)

25 November, 2013 at 12:09 pm

Terence Tao

No, this is blocked by the parity problem. Given any sieve candidate , we expect

, we expect  and

and  to be negligible (this is part of the “Mobius pseudorandomness heuristic”), which basically means that if

to be negligible (this is part of the “Mobius pseudorandomness heuristic”), which basically means that if  is drawn at random from the

is drawn at random from the  distribution, then

distribution, then  has a probability

has a probability  of having an even number of factors, and

of having an even number of factors, and  of having an odd number of factors. Similarly for

of having an odd number of factors. Similarly for  . The upshot of this is that one cannot use purely sieve-theoretic methods to prescribe the parity of the number of prime factors of both

. The upshot of this is that one cannot use purely sieve-theoretic methods to prescribe the parity of the number of prime factors of both  and

and  . But it turns out (as worked out by Bombieri) that on EH, one can basically do anything that does not run into this parity obstruction; for instance one can find infinitely many prime n such that n+2 is the product of either 2013 or 2014 primes. (This should be discussed in Friedlander-Iwaniec’s “Opera del Cribro”, though I don’t have this reference handy at present.)

. But it turns out (as worked out by Bombieri) that on EH, one can basically do anything that does not run into this parity obstruction; for instance one can find infinitely many prime n such that n+2 is the product of either 2013 or 2014 primes. (This should be discussed in Friedlander-Iwaniec’s “Opera del Cribro”, though I don’t have this reference handy at present.)

Breaking the parity barrier for twins would be an absolutely huge breakthrough, even if conditional on Elliott-Halberstam. But it’s going to take a radically new idea; it’s not just a matter of tweaking the sieves.

25 November, 2013 at 11:21 pm

Anonymous

I was wondering if you could elaborate on the intuition for why it will take a radically new idea (similarly, I think I remember you saying that proving or disproving $P versus NP$ will require a new idea. how do you know?) Is it just that a lot of people have tried to solve these problems using many different techniques and failed or is it something else? Thanks.

26 November, 2013 at 9:13 am

Terence Tao

I discuss the parity problem further in my old blog post https://terrytao.wordpress.com/2007/06/05/open-question-the-parity-problem-in-sieve-theory/

27 November, 2013 at 12:00 am

Anonymous

Thanks.

25 November, 2013 at 12:43 pm

Pace Nielsen

Three days ago I started running some computations. Here are the results. Throughout, I will be assuming EH.

————————–

Take . Let

. Let  be an arbitrary polynomial function of total degree

be an arbitrary polynomial function of total degree  , which is symmetric in

, which is symmetric in  and in

and in  separately. Working over the original simplex

separately. Working over the original simplex  of Maynard, set

of Maynard, set

Using a numerical optimizer (so results are not necessarily optimal, but should be close), we get:

For ,

,  .

. ,

,  .

.

For

It is not difficult to increase a little more. Probably up to

a little more. Probably up to  is feasible.

is feasible.

————————–

Take . Let

. Let  be an arbitrary polynomial function of total degree

be an arbitrary polynomial function of total degree  , which is symmetric in the variables. Working over the original simplex

, which is symmetric in the variables. Working over the original simplex  of Maynard, and again using a numerical optimizer, we get:

of Maynard, and again using a numerical optimizer, we get:

For ,

,  .

. ,

,  .

. ,

,  .

.

For

For

This is already very close to the upper bound of recently discovered by Terry.

recently discovered by Terry.

————————-

I then tried to do the case for the new simplex

case for the new simplex  , but the numerics give weird answers. For instance, when

, but the numerics give weird answers. For instance, when  , we get

, we get  . The reason I didn’t post these computations earlier is that I’ve been trying to figure our where my error is. After two days I still have not been able to track down my error. I wrote up a LaTeX document explaining the computation, and I’m willing to email it (and the mathematica file) to anyone who is interested.

. The reason I didn’t post these computations earlier is that I’ve been trying to figure our where my error is. After two days I still have not been able to track down my error. I wrote up a LaTeX document explaining the computation, and I’m willing to email it (and the mathematica file) to anyone who is interested.

25 November, 2013 at 2:34 pm

James Maynard

I reran the computations I alluded to at the beginning of the previous post.

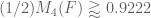

My calculations agree with yours for the original simplex (I get the slightly better bound

(I get the slightly better bound  when

when  .)

.)

This is for , using the enlarged simplex

, using the enlarged simplex  . As you have done, I’m taking

. As you have done, I’m taking  to be an arbitrary symmetric polynomial of degree

to be an arbitrary symmetric polynomial of degree  on

on  , and then I’m using the linear-programming method from my paper to calculate the eigenvector which corresponds to the optimal choice of coefficients of

, and then I’m using the linear-programming method from my paper to calculate the eigenvector which corresponds to the optimal choice of coefficients of  .

.

I then get the bounds

This was my reason for saying using the enlarged simplex gets `about half way’ to obtaining gaps of size 8: we’ve improved from a lower bound of about 0.92 to about 0.96, and we want to get it to 1.

It appears that the convergence as increases is for the enlarged simplex is noticeably slower than for the original simplex.

increases is for the enlarged simplex is noticeably slower than for the original simplex.

I can give more details of how I did the calculations if interested.

25 November, 2013 at 4:03 pm

Pace Nielsen

When I figure out where my computational error is, now I’ll know what numerics to look for. Thank you!

P.S. The reason I didn’t send those typos I mentioned above to you, is that I’m reading through your paper with Roger Baker (here at BYU) and he is compiling a list of the typos we run across. He will be sending that to you, probably in a couple of weeks.

25 November, 2013 at 7:35 pm

James Maynard

Oops, there was a small typo in my program. The corrected bounds I get are

seems good.

seems good.

and the convergence with

Summary of my calculation:

,

, is symmetric, then

is symmetric, then  is symmetric in

is symmetric in  . This means the region with

. This means the region with  will contribute

will contribute  of the total. In this region, it is straightforward to put exact limits on each integration variable. We get

of the total. In this region, it is straightforward to put exact limits on each integration variable. We get

. Again, these are quadratic forms in the coefficients of

. Again, these are quadratic forms in the coefficients of  , and so we can find the optimal choice of

, and so we can find the optimal choice of  of given degree by calculating the largest eigenvalue of a matrix.

of given degree by calculating the largest eigenvalue of a matrix.

we can notice that if

We also get a similar expression for

26 November, 2013 at 2:14 am

Bogdan

I am a bit confused: the upper bound of 0.924 is reported in post for 25 November, 2013 at 5:14 am, and the conclusion was that “so under EH the current method is limited to k_0=5”, and now you get the lower bound of 0.9689! Is this because of “using the enlarged simplex”?

26 November, 2013 at 11:24 am

James Maynard

Bogdan: Yes, the upper bound of 0.924 was for functions whose support was restricted to the smaller simplex . By comparison, we are getting a lower bound of 0.922 in this case, so these seem to be in good agreement.

. By comparison, we are getting a lower bound of 0.922 in this case, so these seem to be in good agreement.

If we use a modification of the original method (such as extending the support to the slightly large simplex or incorporating the

or incorporating the  terms) then this upper bound no longer applies. In particular, the lower bound of 0.96 using the larger simplex isn’t in contradiction to the earlier bound.

terms) then this upper bound no longer applies. In particular, the lower bound of 0.96 using the larger simplex isn’t in contradiction to the earlier bound.

26 November, 2013 at 5:29 pm

Eytan Paldi

The fast convergence (using polynomials) indicates the (possible) existence of a smooth maximizer.

25 November, 2013 at 3:23 pm

Anonymous

Comments (from a non-mathematician who is interested in mathematics):

In Maynard’s paper, it is stated that , but in the post, it is stated that

, but in the post, it is stated that  .

.

25 November, 2013 at 4:28 pm

Terence Tao

The bound is unconditional, but the improved bound

is unconditional, but the improved bound  is available if one assumes the Elliott-Halberstam conjecture; this isn’t explicitly stated in Maynard’s paper but it follows easily from the methods of the paper (so if James is revising the paper anyway, one may as well add in that remark).

is available if one assumes the Elliott-Halberstam conjecture; this isn’t explicitly stated in Maynard’s paper but it follows easily from the methods of the paper (so if James is revising the paper anyway, one may as well add in that remark).

25 November, 2013 at 3:52 pm

Anonymous

@Maynard:

* The subscript “ ” should be “

” should be “ ”.

”.

* When typing maps, one should use “\colon” instead of “:” in order to get the correct spacing aroung the colon.

(Typographical comments, valid throughout the paper.)

26 November, 2013 at 8:28 am

Aubrey de Grey

I’m wondering whether it is possible (or desirable) to reformulate the progress being made here on M_k in a manner that more closely resembles prior work, in particular Polymath8a. Taking things down to their barest essentials, I gather that we can informally say that Maynard and polymath8b have improved the k for which DHL[k,m+1] is implied by EH[theta] (but see below) from an exponentially decreasing function of (theta – m/2) (i.e. GPY) to something on the order of e^(2m/theta). I appreciate that there are good mathematical reasons for working centrally with the quantity M_k, but it’s not clear to me whether the evolving bounds on M as a function of k can be “inverted” to give bounds on k as a direct function of m and theta. If that were possible, I think that for expository purposes it would be of great interest.

Also, I have a somewhat similar question relating to polymath8a. Is it possible to state an explicit version of EH[theta] (let’s call it EH’[theta]) that is implied (up to a value of theta that is a function of i. varpi and delta) by MPZ(i)[varpi,delta], and that also suffices to imply DHL[k,m+1] for sufficiently large k? If that were possible, it seems like it would constitute a nicely clean way, again for expository purposes, to separate the progress in reducing delta from the progress in reducing k for a given theta (and m). Can someone please clarify this?

26 November, 2013 at 8:43 am

Terence Tao

The various upper and lower bounds on Maynard’s sieve all correspond to asymptotics of the form![DHL[ k, (\frac{\theta}{2} + o(1)) \log k ]](https://s0.wp.com/latex.php?latex=DHL%5B+k%2C+%28%5Cfrac%7B%5Ctheta%7D%7B2%7D+%2B+o%281%29%29+%5Clog+k+%5D&bg=ffffff&fg=545454&s=0&c=20201002) for large

for large  , which in particular implies

, which in particular implies  for large

for large  ; the only difference between them lies in the precise nature of the o(1) error term. Once the bounds stabilise, one can of course work these things out more accurately.

; the only difference between them lies in the precise nature of the o(1) error term. Once the bounds stabilise, one can of course work these things out more accurately.

The Bombieri-Vinogradov theorem already implies![DHL[k,m+1]](https://s0.wp.com/latex.php?latex=DHL%5Bk%2Cm%2B1%5D&bg=ffffff&fg=545454&s=0&c=20201002) for sufficiently large k, and is a known theorem and is thus implied by any MPZ type statement. We do have some implications that do a little better than this by exploiting an MPZ hypothesis, by using a cutoff function F for Maynard’s sieve that is supported in an appropriate cube; again, the asymptotics for large m or large k are as above (in particular, the role of delta is asymptotically negligible), but the situation seems to be more complicated in the non-asymptotic regime, e.g. when m=1 or m=2.

for sufficiently large k, and is a known theorem and is thus implied by any MPZ type statement. We do have some implications that do a little better than this by exploiting an MPZ hypothesis, by using a cutoff function F for Maynard’s sieve that is supported in an appropriate cube; again, the asymptotics for large m or large k are as above (in particular, the role of delta is asymptotically negligible), but the situation seems to be more complicated in the non-asymptotic regime, e.g. when m=1 or m=2.

26 November, 2013 at 9:36 am

Aubrey de Grey

Thanks! On the first point, absolutely – I just couldn’t exclude the possibility that this inversion might be so easy that there would be no reason to defer it. On the second point, sure, I was of course referring to an EH’ that would do the job for theta > 1/2; however I was mainly referring simply to the pre-Maynard universe and wondering whether this kind of condensation of i, varpi and delta down to a single parameter theta is possible at all.

26 November, 2013 at 11:33 am

Aubrey de Grey

In other words, when I wrote “for sufficiently large k” I should have written “for the same k that MPZ(i)[varpi,delta] itself does”.

26 November, 2013 at 1:13 pm

Terence Tao

A minor (and standard) remark: the Selberg sieve can be rewritten as

can be rewritten as

where are as in Maynard’s paper and

are as in Maynard’s paper and  are the Ramanujan sums

are the Ramanujan sums

(restricting to squarefree ); this is basically equation (5.8) of Maynard’s paper after some rearranging.

); this is basically equation (5.8) of Maynard’s paper after some rearranging.

The functions behave approximately like independent random variables as

behave approximately like independent random variables as  vary, which heuristically explains why the mean value of

vary, which heuristically explains why the mean value of  is basically

is basically

in terms of the

in terms of the  (Lemma 5.2 of Maynard’s paper). I wasn’t able to use this representation of the Selberg sieve for much else, though.

(Lemma 5.2 of Maynard’s paper). I wasn’t able to use this representation of the Selberg sieve for much else, though.

and can also give heuristic justification for the asymptotic for

26 November, 2013 at 2:51 pm

Anonymous

Typographical comment to Terry: If you use $\dots$ instead of $\ldots$, LaTeX will take case of correct placement of the dots; they shouldn’t always be lowered to the base of the line.

26 November, 2013 at 3:34 pm

Eytan Paldi

In the definition of , the functions

, the functions  need only to be in

need only to be in

(i.e. not necessarily bounded.)

(i.e. not necessarily bounded.)

26 November, 2013 at 5:10 pm

Pace Nielsen

In the spirit of “A poor man’s improvement on Zhang’s result”, I was playing around with Maynards mathematica notebook. I found that increasing “p[5]” to “p[6]” (and increasing the number of variables to 56, and precomputing polynomials up to degree 13) allows one to push . This was confirmed by Maynard. [It appears that no further gain is made in such an easy manner by just increasing the degree further.]

. This was confirmed by Maynard. [It appears that no further gain is made in such an easy manner by just increasing the degree further.]

Obviously, further improvements are available if we incorporate some of the higher order power-symmetric polynomials like P_3.

26 November, 2013 at 9:57 pm

Sniffnoy

102 or 103? It says 102 here but 103 on the wiki and I’m wondering whether that is a typo here or a copying error there (the H value listed there is for 103).

[Corrected, thanks – T.]

27 November, 2013 at 8:44 am

Terence Tao

Here is a possible way to generalise the Selberg sieve. We’ll use the “multiimensional GPY” formulation

for the sieve, where F is a smooth function supported on the simplex (or the enlarged simplex). We can square this out as

One can then consider the more general sieve

for some smooth function supported on the product of two simplices. This function obeys similar asymptotics to the original sieve (this can be seen for instance by using Stone-Weierstrass to approximate

supported on the product of two simplices. This function obeys similar asymptotics to the original sieve (this can be seen for instance by using Stone-Weierstrass to approximate  by a finite linear combination of tensor products, or else by direct computation); for instance one has

by a finite linear combination of tensor products, or else by direct computation); for instance one has

if the denominator is non-zero, where the subscripts on F denote differentiation in one or more of the variables . The main problem is that without further hypotheses on

. The main problem is that without further hypotheses on  , the sieve weight

, the sieve weight  is not necessarily non-negative, which is a crucial property in the argument. In the case that

is not necessarily non-negative, which is a crucial property in the argument. In the case that  is positive semidefinite (viewed as a “matrix” or “quadratic form” on the simplex), one obtains the non-negativity; but in this case one can diagonalise F into a non-negative combination of Selberg sieves, which cannot give better ratios than a pure Selberg sieve. However, it may be possible to have

is positive semidefinite (viewed as a “matrix” or “quadratic form” on the simplex), one obtains the non-negativity; but in this case one can diagonalise F into a non-negative combination of Selberg sieves, which cannot give better ratios than a pure Selberg sieve. However, it may be possible to have  non-negative even without

non-negative even without  being positive semidefinite, leading to a genuinely more general class of sieve. The positivity requirement is a bit complicated though, even in the base case k=1; a typical condition is

being positive semidefinite, leading to a genuinely more general class of sieve. The positivity requirement is a bit complicated though, even in the base case k=1; a typical condition is

whenever . This condition demonstrates the non-negativity of

. This condition demonstrates the non-negativity of  for products of two primes; one needs similar conditions for products of

for products of two primes; one needs similar conditions for products of  primes for any bounded r, and this should suffice (note that these sieves are concentrated on numbers with only a bounded number of prime factors).

primes for any bounded r, and this should suffice (note that these sieves are concentrated on numbers with only a bounded number of prime factors). , but hopefully they are a bit weaker, allowing for more test functions

, but hopefully they are a bit weaker, allowing for more test functions  to play with.

to play with.

These conditions are implied by positive semi-definiteness of

27 November, 2013 at 3:22 pm

Terence Tao

A cute inequality related to the above considerations: for any smooth function![F: [0,1] \to {\bf R}](https://s0.wp.com/latex.php?latex=F%3A+%5B0%2C1%5D+%5Cto+%7B%5Cbf+R%7D&bg=ffffff&fg=545454&s=0&c=20201002) , one has

, one has

where and

and  is the difference operator

is the difference operator  . Thus for instance

. Thus for instance

The sieve theoretic interpretation is that the right-hand side is an appropriately normalised version of if

if  , and the left-hand side is the contribution to

, and the left-hand side is the contribution to  coming from products of exactly

coming from products of exactly  primes for

primes for  . For sufficiently smooth functions F (e.g. finite linear combinations of exponentials), the inequality is in fact an identity, as can be seen by a rather fun computation. The claim can also be proven from the recursive distributional identity

. For sufficiently smooth functions F (e.g. finite linear combinations of exponentials), the inequality is in fact an identity, as can be seen by a rather fun computation. The claim can also be proven from the recursive distributional identity

for , where

, where  is the nonlinear operator

is the nonlinear operator

27 November, 2013 at 5:57 pm

James Maynard

A couple of calculations regarding attempts to modify the case to obtain gaps of size 8:

case to obtain gaps of size 8:

Using the enlarged simplex, I get that (and I would expect this to be close to the truth). This means that the `probability’ that one of the elements of a 5-tuple is prime is

(and I would expect this to be close to the truth). This means that the `probability’ that one of the elements of a 5-tuple is prime is  . If we assume independence, then we would expect the probability that both

. If we assume independence, then we would expect the probability that both  and

and  are prime to be

are prime to be  (since we don’t expect to be able to get an asymptotic for this quantity, in reality we have to satisfy ourselves with an upper bound, which would be worse than this). This means that the modification of taking away the contribution form the times when

(since we don’t expect to be able to get an asymptotic for this quantity, in reality we have to satisfy ourselves with an upper bound, which would be worse than this). This means that the modification of taking away the contribution form the times when  and

and  are simultaneously prime would (in the most optimistic scenario) leave us with a total of

are simultaneously prime would (in the most optimistic scenario) leave us with a total of  . This means we need at least one new idea to improve the situation. We'd need a lower bound on

. This means we need at least one new idea to improve the situation. We'd need a lower bound on  which is certainly greater than

which is certainly greater than  for this modification to get us over the edge.

for this modification to get us over the edge.

I also tested the upper bound to see how it fared numerically. My calculations should be treated as very provisional, since I haven't checked them properly. In the scenario above, I find that taking a suitable polynomial for , we get a lower bound for the modified version of

, we get a lower bound for the modified version of  which gives

which gives  . This compares well with the most optimistic bound of

. This compares well with the most optimistic bound of  from above. This also corresponds to an upper bound for

from above. This also corresponds to an upper bound for  of size

of size  , which again is close to the guessed asymptotic value of

, which again is close to the guessed asymptotic value of  .

.

Since these bounds performed quite well, it is maybe reasonable to expect us to get decent upper bounds when we are considering or similar. I don't know how we would evaluate the integrals that arise, however.

or similar. I don't know how we would evaluate the integrals that arise, however.

27 November, 2013 at 6:40 pm

Terence Tao

Thanks for these calculations! Due to the parity problem, I expect that any upper bound we obtain on is going to be off by a factor of at least 2 from the truth (so in this case, it would be like

is going to be off by a factor of at least 2 from the truth (so in this case, it would be like  rather than

rather than  ). This isn’t consistent with the

). This isn’t consistent with the  number that you are provisionally reporting, but perhaps the numerology for the

number that you are provisionally reporting, but perhaps the numerology for the  optimiser is a bit different from that for the

optimiser is a bit different from that for the  optimiser. For instance, it may be advantageous to break symmetry a bit and work with polynomials F that behave differently in the

optimiser. For instance, it may be advantageous to break symmetry a bit and work with polynomials F that behave differently in the  variables than in the

variables than in the  , lowering the probability that n is prime and n+12 is prime (in order to also lower the probability that n,n+12 are both prime) while also raising the probability that n+2,n+6,n+8 are prime.

, lowering the probability that n is prime and n+12 is prime (in order to also lower the probability that n,n+12 are both prime) while also raising the probability that n+2,n+6,n+8 are prime.

0.987 is tantalisingly close to 1, so there is still hope :) Certainly things should look better for larger k. One relatively cheap thing to try is just to take the best polynomial we have for and compute the upper bounds on

and compute the upper bounds on  (note though that if we retreat from EH to Bombieri-Vinogradov, some of the 1/2 factors in the previous formula for this quantity degrade to 1/4).

(note though that if we retreat from EH to Bombieri-Vinogradov, some of the 1/2 factors in the previous formula for this quantity degrade to 1/4).

27 November, 2013 at 6:52 pm

Terence Tao

A small observation: somewhat frustratingly, the Zhang/Maynard methods do not quite seem to be able to establish Goldbach’s conjecture up to bounded error (i.e. all sufficiently large numbers are within O(1) of the sum of two primes). But one can “split the difference” between bounds on H and establishing Goldbach conjecture with bounded error. For instance, assuming Elliott-Halberstam, one can show that at least one of the following statements hold:

1. (thus improving over the current bound of

(thus improving over the current bound of  ); or

); or

2. Every sufficiently large even number lies within 6 of a sum of two primes.

To prove this, let N be a large multiple of 6, and consider the tuple n, n+2, n+6, N-n, N-n-2. One can check that this tuple is admissible in the sense that for every prime p, there is an n such that all five elements of the tuple are coprime to p. A slight modification of the proof of DHL[5,2] then shows that there lots of n for which at least two of the five elements of this tuple are prime; either these two elements are within 6 of each other, or sum to a number between N-2 and N+6.

If we can ever get DHL[4,2] (or more precisely, a variant of this assertion for the tuple n, n+2, N-n, N-n-2), we get a more appealing dichotomy of this type: either the twin prime conjecture is true, or every sufficiently multiple of six lies within 2 of a sum of two primes.

28 November, 2013 at 8:54 am

Aubrey de Grey

Wow! This immediately raises two questions that would surely be of wide interest:

1) For k=5, can one split the difference unevenly, i.e. lower the value 6 in one statement at the expense of using a number larger than 6 in the other? If so, how unevenly – all the way to TP or Goldbach?

2) Can analogous statements be made for larger k? In particular, for k large enough to require only BV (or MPZ) rather than EH?

28 November, 2013 at 11:19 am

Aubrey de Grey

In fact, much better would be to unify the above questions in the obvious way, as follows:

If we denote by “GBE[n]” (Goldbach up to bounded error n – is there an established name for this conjecture?) the assertion that all even numbers are within n of an even sum of two primes (I’m omitting “sufficiently large” here only because I presume that “sufficiently” can easily be shown in this context to be within the range for which GC itself has already been computationally verified – apologies if that’s not true), then for which [a,b,c] can we say that DHL[a,2] implies DHL[b,2] or GBE[c] ? Terry’s observations constitute this statement for [a,b,c] = [5,3,6] and [4,2,4]. How far can the allowed values be broadened?

27 November, 2013 at 9:33 pm

Anonymous

I feel that Goldbach’s conjecture / twin primes conjecture are very close to be proved.

All originated from Zhang’s work!

27 November, 2013 at 10:17 pm

Gil Kalai

I am curious about is the following. Consider a random subset of primes (taking every prime with probability p, independently, and say p=1/2). Now consider only integers involving these primes. I think that it is known that this system of “integers” satisfies (almost surely) PNT but not at all RH. We can consider for such systems the properties BV (Bombieri Vinogradov), or more generally EH(θ) and the quantities . For such systems does BV typically hold? or it is rare like RH. Is Meynard’s implication applies in this generality? Nicely, here we can hope even for infinite consecutive primes.

. For such systems does BV typically hold? or it is rare like RH. Is Meynard’s implication applies in this generality? Nicely, here we can hope even for infinite consecutive primes.

28 November, 2013 at 8:26 am

Terence Tao

Strictly speaking, if only takes half of the primes, then the remaining integers is a very sparse set – about elements up to

elements up to  – which makes all the numerology very different from that of the full primes.

– which makes all the numerology very different from that of the full primes.

You may be thinking instead of the Beurling integers, in which the primes are replaced by some other set of positive reals with the same asymptotic density properties. These integers enjoy many of the same “multiplicative number theory” properties as the usual integers (e.g. the prime number theorem), but one usually loses all of the “additive number theory” properties, basically because the Beurling integers are not translation-invariant: if n is a Beurling integer, then n+2 usually isn’t, and so asking for things like twin Beurling primes is not really a natural question; similarly for questions about distributions of Beurling primes in residue classes (Bombieri-Vinogradov, EH, etc.) (unless one also somehow creates a theory of “Beurling Dirichlet characters” to go with the Beurling primes, but I do not know how to make this work for anything that isn’t already coming from a number field).

28 November, 2013 at 10:32 am

Gil Kalai

Terry, how can it be if you leave half the primes alive?

if you leave half the primes alive?

28 November, 2013 at 10:51 am

Terence Tao

Oops, I miscalculated; the density is now rather than

rather than  , so I drop my previous objection to the question :). (My error was thinking that the average number of divisors of n would remain comparable to

, so I drop my previous objection to the question :). (My error was thinking that the average number of divisors of n would remain comparable to  , when instead it drops to

, when instead it drops to  .) The main technical difficulty now is with counting such quantities as the number of integers n less than x such that n and n+2 are both divisible by the surviving primes; offhand I don’t see a promising technique to answer this question (as well as more general questions in which several shifts of n are involved, and some congruence class conditions on n are also imposed) but it looks like an interesting problem.

.) The main technical difficulty now is with counting such quantities as the number of integers n less than x such that n and n+2 are both divisible by the surviving primes; offhand I don’t see a promising technique to answer this question (as well as more general questions in which several shifts of n are involved, and some congruence class conditions on n are also imposed) but it looks like an interesting problem.

28 November, 2013 at 11:21 am

Gil Kalai

Indeed, I was thinking about Beurling integers, and my questions can be asked about them but I wanted a very very specific model which will make computations easier. I think that it is not that easy to work with general Beurling primes and finding a concrete stochastic models (like random graphs, sort of) may be more fruitful.

If we want to keep the density of primes on the nose we can possibly still take p_n with independent but not identical probabilities not to corrupt asymptotic density properties, or do some other “correction”, but perhaps taking half the primes is also of interest.

All the questions I asked refer only to the relative ordering of the Beurling integers and *not* to their additive properties. So “twin primes” refers to two Beurling primes with a single composite Beurling integer in between. And “consequtive primes” refers to two Beurling primes without any composite Beurling integer in between (Alas, we don’t have them often in the usual case.) I think that PNT, RH, BV (Bombieri Vinogradov), or more generally EH(θ), and (in the *ordinal*, not *additive* sense) continue to make sense (perhaps even more than one sense) for Beurling primes and it is interesting to see if Meynard implication extends, but it looks to me that considering a stochastic model based on the primes will make the issue more tractable (and perhaps useful).

(in the *ordinal*, not *additive* sense) continue to make sense (perhaps even more than one sense) for Beurling primes and it is interesting to see if Meynard implication extends, but it looks to me that considering a stochastic model based on the primes will make the issue more tractable (and perhaps useful).

28 November, 2013 at 12:28 pm

James Maynard

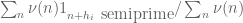

If I understand your question correctly, then I would have thought for such a system you would get (almost surely) for this subset of the primes, whenever

for this subset of the primes, whenever  holds for the primes themselves. (And so bounded gaps with

holds for the primes themselves. (And so bounded gaps with  primes).

primes).

If is the number of chosen primes in the residue class

is the number of chosen primes in the residue class  which are at most

which are at most  , then

, then  is distributed as a binomial random variable

is distributed as a binomial random variable  . Therefore, we should get, for some constant

. Therefore, we should get, for some constant

In particular, over all for which

for which  we have almost surely

we have almost surely  . (There are

. (There are  such pairs

such pairs  , and we take

, and we take  above). The errors from these

above). The errors from these  -terms are negligible. The errors from those pairs

-terms are negligible. The errors from those pairs  for which `

for which ` ‘ does not hold, and the errors

‘ does not hold, and the errors  can be bounded by regular

can be bounded by regular  for all primes.

for all primes.

28 November, 2013 at 1:52 pm

Terence Tao

I agree that this form of![EH[\theta]](https://s0.wp.com/latex.php?latex=EH%5B%5Ctheta%5D&bg=ffffff&fg=545454&s=0&c=20201002) will hold almost surely, but I think for applications to bounded gaps between these Beurling-type integers one needs a significantly stronger version of