Peter Denton, Stephen Parke, Xining Zhang, and I have just uploaded to the arXiv the short unpublished note “Eigenvectors from eigenvalues“. This note gives two proofs of a general eigenvector identity observed recently by Denton, Parke and Zhang in the course of some quantum mechanical calculations. The identity is as follows:

Theorem 1 Let

be an

Hermitian matrix, with eigenvalues

. Let

be a unit eigenvector corresponding to the eigenvalue

, and let

be the

component of

. Then

where

is the

Hermitian matrix formed by deleting the

row and column from

.

For instance, if we have

for some real number ,

-dimensional vector

, and

Hermitian matrix

, then we have

assuming that the denominator is non-zero.

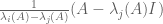

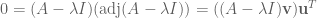

Once one is aware of the identity, it is not so difficult to prove it; we give two proofs, each about half a page long, one of which is based on a variant of the Cauchy-Binet formula, and the other based on properties of the adjugate matrix. But perhaps it is surprising that such a formula exists at all; one does not normally expect to learn much information about eigenvectors purely from knowledge of eigenvalues. In the random matrix theory literature, for instance in this paper of Erdos, Schlein, and Yau, or this later paper of Van Vu and myself, a related identity has been used, namely

but it is not immediately obvious that one can derive the former identity from the latter. (I do so below the fold; we ended up not putting this proof in the note as it was longer than the two other proofs we found. I also give two other proofs below the fold, one from a more geometric perspective and one proceeding via Cramer’s rule.) It was certainly something of a surprise to me that there is no explicit appearance of the components of

in the formula (1) (though they do indirectly appear through their effect on the eigenvalues

; for instance from taking traces one sees that

).

One can get some feeling of the identity (1) by considering some special cases. Suppose for instance that is a diagonal matrix with all distinct entries. The upper left entry

of

is one of the eigenvalues of

. If it is equal to

, then the eigenvalues of

are the other

eigenvalues of

, and now the left and right-hand sides of (1) are equal to

. At the other extreme, if

is equal to a different eigenvalue of

, then

now appears as an eigenvalue of

, and both sides of (1) now vanish. More generally, if we order the eigenvalues

and

, then the Cauchy interlacing inequalities tell us that

for , and

for , so that the right-hand side of (1) lies between

and

, which is of course consistent with (1) as

is a unit vector. Thus the identity relates the coefficient sizes of an eigenvector with the extent to which the Cauchy interlacing inequalities are sharp.

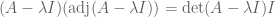

— 1. Relating the two identities —

We now show how (1) can be deduced from (2). By a limiting argument, it suffices to prove (1) in the case when is not an eigenvalue of

. Without loss of generality we may take

. By subtracting the matrix

from

(and

from

, thus shifting all the eigenvalues down by

, we may also assume without loss of generality that

. So now we wish to show that

The right-hand side is just . If one differentiates the characteristic polynomial

at , one sees that

Finally, (2) can be rewritten as

so our task is now to show that

By Schur complement, we have

Since is an eigenvalue of

, but not of

(by hypothesis), the factor

vanishes when

. If we then differentiate (4) in

and set

we obtain (3) as desired.

— 2. A geometric proof —

Here is a more geometric way to think about the identity. One can view as a linear operator on

(mapping

to

for any vector

); it then also acts on all the exterior powers

by mapping

to

for all vectors

. In particular, if one evaluates

on the basis

of

induced by the orthogonal eigenbasis

, we see that the action of

on

is rank one, with

for any , where

is the inner product on

induced by the standard inner product on

. If we now apply this to the

-form

, we have

, while

is equal to

plus some terms orthogonal to

. Since

, Theorem 1 follows.

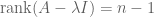

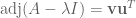

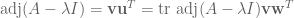

— 3. A proof using Cramer’s rule —

By a limiting argument we can assume that all the eigenvalues of are simple. From the spectral theorem we can compute the resolvent

for

as

Extracting the component of both sides and using Cramer’s rule, we conclude that

or in terms of eigenvalues

Both sides are rational functions with a simple pole at the eigenvalues . Extracting the residue at

we conclude that

and Theorem 1 follows. (Note that this approach also gives a formula for for

, although the formula becomes messier when

because the relevant minor of

is no longer a scalar multiple of the identity

.)

137 comments

Comments feed for this article

19 November, 2019 at 3:40 am

Arturo Portnoy

Looks like this identity could be useful for inverse spectral problems, where information about the eigenvalues and eigenfunctions is used to determine some parameter/coefficients of the operator/matrix.

19 November, 2019 at 7:14 am

Terence Tao

Yes, in fact we have recently learned that the identity is indeed used for inverse eigenvalue problems for Jacobi matrices, see Section 4.5 of this 2002 paper of Chu and Golub.

19 November, 2019 at 8:24 am

Chris Godsil

I’ve listed more appearances at https://mathoverflow.net/questions/346264/consequences-of-eigenvector-eigenvalue-formula-found-by-studying-neutrinos/346421#346421

19 November, 2019 at 11:07 am

Bill Province

How close does this come to resolving the Graph Reconstruction Conjecture (https://en.wikipedia.org/wiki/Reconstruction_conjecture)? I notice that we get the square of the eigenvector element values rather than the values themselves, so I don’t think that in and of itself, this resolves the reconstruction conjecture, but it gives some pretty convincing additional evidence!

In order for the reconstruction conjecture to be false in the face of this, there would need to be two graphs G1, G2 such that they are non-isomorphic, but share the same spectrum, and where the eigenvectors differ only in the choice of sign for the elements (and of course, where it’s not simply that we have one vector that is the negative of the other).

19 November, 2019 at 9:06 pm

Raphael

Due to the existence of cospectral mates, I am not sure that it really would resolve the conjecture.

20 November, 2019 at 10:24 am

Bill Province

Yet the results here are much stronger than merely being co-spectral. Being able to (nearly) completely recover the eigenvectors is something I had not seen before. Indeed, if the eigenvectors are recoverable — and we can properly associate the correct eigenvalue with each eigenvector — then we have recovered the entire matrix. I agree that we have not reached that point yet, and the existence of co-spectral non-isomorphic graphs must at least make us doubt, but this seems to be a really big step in that direction.

19 November, 2019 at 12:43 pm

J. M.

A look at L. F. Richardson’s paper (which I found through J. H. Wilkinson’s classical treatise) apparently uses a variant of the formula to iteratively improve an eigenpair.

20 November, 2019 at 10:56 am

Terence Tao

Hmm, this seems to be a little bit different. What seems to be happening here is that the author is iteratively applying linear operations of the form for

for  to isolate the

to isolate the  component of a given vector and damp out the other components. This iteration will lead to spectral multipliers that have a form resembling that used in this identity (namely a ratio of two products of characteristic function type objects) but I’m not sure there is a deeper connection beyond this (for instance, there is no role in that paper played by minors).

component of a given vector and damp out the other components. This iteration will lead to spectral multipliers that have a form resembling that used in this identity (namely a ratio of two products of characteristic function type objects) but I’m not sure there is a deeper connection beyond this (for instance, there is no role in that paper played by minors).

20 November, 2019 at 11:44 am

Emanuele Rodolà

From a computational perspective, it might be actually useful in all cases where one only needs specific eigenvector components; in these cases you don’t need to compute the eigenvalues of all sub-matrices M_j, but only for a selection of sub-matrices. I can think of a few practical settings where it could come useful, e.g. in discrete geometry processing or graph analysis.

22 November, 2019 at 5:40 pm

Comments on an arXiv preprint authored by Terence Tao – Feng Qi's Academic Homepage & Blogs

[…] https://terrytao.wordpress.com/2019/08/13/eigenvectors-from-eigenvalues/#comment-528704 […]

22 November, 2019 at 8:47 pm

Eigenvectors and Eigenvalues | Delightful & Distinctive COLRS

[…] Resource: Terry Tao blog, Nov […]

23 November, 2019 at 7:56 pm

Margaret Stawiska-Friedland

Theorem 1 can be generalized to not necessarily Hermitian matrices as follows: and let

and let  . Let

. Let  be an eigenvalue of

be an eigenvalue of  of algebraic multiplicity

of algebraic multiplicity  . It follows that the eigenspace corresponding to

. It follows that the eigenspace corresponding to  is

is  -dimensional.

-dimensional. , and so

, and so  . This implies that

. This implies that  with

with  . Further, since

. Further, since  and

and  is an eigenvalue of

is an eigenvalue of  , we have

, we have  . Hence

. Hence  . Similarly we show that

. Similarly we show that  .

. such that

such that  ,

,  . We have

. We have

. Hence

. Hence  . By Jacobi’s formula for derivative of the determinant,

. By Jacobi’s formula for derivative of the determinant,  , where

, where  .

.

Let

By nullity-rank theorem,

There exists a unique

with

23 November, 2019 at 10:12 pm

Xiu Wu

Hello Mr.Tao, I want to ask that how to determine the +/- sign of the calculated elements of the eigenvectors.

24 November, 2019 at 3:35 am

Anonymous

Read the comments above.

30 November, 2019 at 1:19 am

Xiu Wu

Thanks, I found it in one of the comments, Mr.Tao gives a method of determining the signs.

24 November, 2019 at 7:53 pm

Ed

Hi, I am no math major, but I found this paper very interesting to read! I am reading the consistency check section of the paper, and I am not sure how to show “RHS of eq 8 is the n-1 symmetric function of the eigenvalues,S_{n-1}”.

30 November, 2019 at 5:15 pm

Eigenvectors from eigenvalues - Nevin Manimala's Blog

[…] by /u/BeverlyHillsBastet [link] […]

2 December, 2019 at 12:17 am

Anonymous

Some of the popular science articles claim that it’s easier to use a matrix’s eigenvalues than its eigenvectors, at least in context of the neutrino-related research in which this identity was discovered. However, at first glance, computing all eigenvectors using this identity seems to have greater computational complexity than existing methods (which, roughly, are O(n^3)).

Of course, this work will be relevant in particular contexts where the minor matrices’ eigenvalues are already known, or where other constraints are imposed that can simplify computation. But do you think that with some additional research, the formula can be used to improve on existing eigenvector estimation methods more generally?

2 December, 2019 at 9:23 am

Terence Tao

I agree that this identity is unlikely to be a superior method for computing eigenvectors for general matrices, but for special matrices where the characteristic polynomial of the matrix and its minors are relatively easy to compute, the identity appears to be useful. For instance, we have found a number of references in the literature where this identity was applied to tridiagonal matrices to help control the convergence rate of various numerical linear algebra algorithms such as the Rayleigh-Ritz method for computing eigenvectors. So here the identity is not directly used to compute eigenvectors, but it can be used to analyze a different algorithm for (approximately) computing these eigenvectors.

Another thing the identity highlights is that the (square of the) magnitude of an eigenvector component is proportional to the distance between the eigenvalue and the spectrum of a minor. So information about how the spectrum of minors interlace with the spectrum of the full matrix will tell us something about the localization or delocalization properties of eigenvectors, and conversely. This ought to be a useful tool, or at least a useful heuristic, in random matrix theory in which one is often interested in at least one of these two pieces of information, though I do not yet have a concrete use case for this (mostly because our current tools usually do not let us easily understand the coupling between the spectrum of a matrix and the spectrum of a minor).

3 December, 2019 at 9:47 am

Tim Campion

I’m confused by the statement of the identity — are we assuming that the eigenvalues $\lambda_i(A)$ are distinct?

If not, then the identity is hard for me to believe: for instance if $\lambda_1(A) = \lambda_2(A)$, then the identity seems to entail that $|v_{1,j}| = |v_{2,j}|$ for any choice of orthogonal unit vectors $v_1,v_2$ in the eigenspace corresponding to the eigenvalue $\lambda_1(A) = \lambda_2(A)$. But if, say, this eigenspace is spanned by the first two coordinate vectors $e_1,e_2$, then we could take $v_1 = e_1, v_2 = e_2$ and obtain a contradiction.

3 December, 2019 at 9:50 am

Tim Campion

Oh I see — if the eigenvalues are not distinct, then the product on the left hand side is zero, so my reasoning is incorrect.

3 December, 2019 at 8:34 pm

Eigenvectors from Eigenvalues: a survey of a basic identity in linear algebra | What's new

[…] Parke, Xining Zhang, and I have just uploaded to the arXiv a completely rewritten version of our previous paper, now titled “Eigenvectors from Eigenvalues: a survey of a basic identity in linear […]

4 December, 2019 at 9:47 pm

Gautam

In Remark 7 of the updated paper, it appears that Aram Harrow’s name is spelled wrong.

[This will be corrected in the next revision of the ms – T.]

6 December, 2019 at 3:13 pm

Adam Denchfield

I think I found another instance of proof/usage of a special case. In this paper (linked below) by Gaveau and Schulman, “Limited quantum decay”, Equations 2.1-2.6. If this is indeed an example of a special case, then I think this identity is used fairly commonly in physics, because this usage seems familiar.

https://iopscience.iop.org/article/10.1088/0305-4470/28/24/029/pdf

[Yes, this appears to be an independent discovery of Lemma 14 in the survey. We’ll add the reference to the next revision of the ms. -T]

13 December, 2019 at 7:41 am

Pawan

How one gets this equation ?

13 December, 2019 at 8:36 am

Terence Tao

https://en.wikipedia.org/wiki/Eigendecomposition_of_a_matrix#Functional_calculus

17 April, 2020 at 6:39 am

Pawan

Thank you.

This is not clear. In particular the use of Cramer’s rule. To use this rule there should be AX=B. What is A, X and B here ?

17 April, 2020 at 10:25 am

Terence Tao

Here we are using Cramer’s rule in the form (see https://en.wikipedia.org/wiki/Cramer%27s_rule#Finding_inverse_matrix ). Applying this with

(see https://en.wikipedia.org/wiki/Cramer%27s_rule#Finding_inverse_matrix ). Applying this with  replaced by

replaced by  and extracting the

and extracting the  coefficient we see that

coefficient we see that ![\frac{\mathrm{det}(\lambda I_{n-1} - M_j)}{\mathrm{det}(\lambda I_n - A)} = [(\lambda I_n - A)^{-1}]_{jj}](https://s0.wp.com/latex.php?latex=%5Cfrac%7B%5Cmathrm%7Bdet%7D%28%5Clambda+I_%7Bn-1%7D+-+M_j%29%7D%7B%5Cmathrm%7Bdet%7D%28%5Clambda+I_n+-+A%29%7D+%3D+%5B%28%5Clambda+I_n+-+A%29%5E%7B-1%7D%5D_%7Bjj%7D&bg=ffffff&fg=545454&s=0&c=20201002) . On the other hand, from the eigendecomposition of the matrix

. On the other hand, from the eigendecomposition of the matrix  one has

one has ![[(\lambda I_n - A)^{-1}]_{jj} = \sum_{k=1}^n \frac{1}{\lambda - \lambda_k(A)} |v_{k,j}|^2](https://s0.wp.com/latex.php?latex=%5B%28%5Clambda+I_n+-+A%29%5E%7B-1%7D%5D_%7Bjj%7D+%3D+%5Csum_%7Bk%3D1%7D%5En+%5Cfrac%7B1%7D%7B%5Clambda+-+%5Clambda_k%28A%29%7D+%7Cv_%7Bk%2Cj%7D%7C%5E2&bg=ffffff&fg=545454&s=0&c=20201002) .

.

9 January, 2020 at 2:09 pm

Henning

I wonder about the application of the EEI to not necessarily Hermitian diagonalizable matrices when there are two or more matrices with the same eigenvalues but different eigenvectors. Example: The matrices A_1 = CM and A_2 = C^1/2 M C^1/2 have the same eigenvalues if C is diagonal positive definite and M real symmetric. Application of the EEI to this spectrum results in the eigenvectors of the Hermitian case A_2. But how can one obtain the correct eigenvectors, using only the EEI and some minimal other assumptions, for A_1? (This problem is somewhat similar to a standard problem in vibrational analysis described in the book G.M.L. Gladwell: “Inverse Problems In Vibration”, where the EEI for Jacobi matrices appears in Eqs. 4.3.17 and 4.5.6.)

9 January, 2020 at 2:38 pm

Ralph Burger

Doesn’t all as well depend on the minors and their eigenvalues?

9 January, 2020 at 6:19 pm

Anonymous

Yes, I forgot to mention that all the minors have identical spectra between the two cases, too.

24 March, 2020 at 3:21 am

Sasha Sodin

Dear Terry,

I would like to mention another couple of references:

– Kerov’s paper “interlacing measures” and his book “Asymptotic Representation Theory of the Symmetric Group and its Applications in Analysis”, which describe a result equivalent to this identity (and its relation to the Markov moment problem)

– Krein’s work on the spectral shift function (and earlier work by I.M.Lifshitz)

– the famous paper of Aronszajn and Donoghue

The first one describes several seemingly unrelated facts which can be stated in a similar way (e.g. the hook formula in the representation theory of S_N). The other two discuss operator-theoretic versions.

With best regards,

Sasha

8 April, 2020 at 9:28 am

Andrew Knyazev

Dear Terry,

The main result here has a simple and intuitively evident geometric interpretation:

Crossing a 3D ellipsoid centered at the origin and aligned with the coordinate axes by a 2D plane crossing the origin results in a 2D ellipse. The position of the 2D plane relative to the 3D ellipsoid is then uniquely (up to symmetries) determined by 5 numbers: the 2 lengths of the semi-axes of the 2D ellipse and the 3 lengths of the semi-axes of the 3D ellipsoid. Perhaps you may want to add a plot like this to the paper, https://commons.wikimedia.org/wiki/File:Indexellipsoid.png

Having the semi-axes of the 3D ellipsoid aligned with the coordinate axes, one can derive a formula for the (absolute values of) coordinates of the unit vector normal to the 2D plane, which is the main result of your paper, implying everything else, as far as I can see.

These formulas can evidently be found in old textbooks on geometry. I have seen one in a 100 y.o. book scanned by Google books, but did not save the reference.

My papers go one step further and derive (from the coordinates of the normal vector) formulas for the (absolute values of) coordinates of the semi-axes of the 2D ellipse relative to the coordinates of the semi-axes of the 3D ellipsoid. The semi-axes of the 2D ellipse are actually Ritz vectors in the Rayleigh-Ritz method where the trial subspace is a hyper-plane (i.e. co-dimension 1)

Best, Andrew

8 April, 2020 at 9:42 am

Eigenvectors from Eigenvalues – Rumbo Loxodromico

[…] Terry Tao gives a nice “geometric” proof on his blog that might be interesting to readers here (see “2 a geometric proof”): https://terrytao.wordpress.com/2019/08/13/eigenvectors-from-eigenvalues/ […]

4 June, 2020 at 2:40 am

Hippolite Kaarineo Dery

So confusing

13 July, 2020 at 7:54 am

Symbols

Thank you Prof. Tao for this wonderful blog post. Was looking for this on an assignment.

14 July, 2020 at 7:24 am

Zeno

Also our paper published recently (after a long gestation):

“Eigenlogic in the spirit of George Boole”, https://doi.org/10.1007/s11787-020-00252-3

also available on the arXiv:1512.06632

We show that the multilinear method introcduced by Boole for the invention of mathematical logic was actually linear algebra… truth values become eigenvalues…

For all these matters one should give a deeper look at the English school of the XiXth century, they invented linear algebra and logic (their link is quite close)

see Boole, Hamilton, Cayley, Sylvester, Jacobi, Clifford….

4 August, 2020 at 11:31 pm

Anonymous

the last proof is really cool. Just a question here. Do you ever feel the need to think it is important to think of things from scratch. I feel using existing identities is a bit impure way of deriving something. Is it important to build up using new observations in mathematics or to connect different patterns that we’ve already seen ( like these identities )

30 December, 2020 at 9:09 pm

Lynmar M. Didal

This is in the same situation as the “new way to solve quadratic equations.” (re: semantic search engine)

19 January, 2022 at 6:56 am

Andre j- Grebenc

I am an engineer and I kindly ask for some examples( cases)

[See Example 2 in the paper. -T]