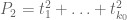

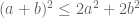

For each natural number , let

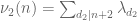

denote the quantity

where denotes the

prime. In other words,

is the least quantity such that there are infinitely many intervals of length

that contain

or more primes. Thus, for instance, the twin prime conjecture is equivalent to the assertion that

, and the prime tuples conjecture would imply that

is equal to the diameter of the narrowest admissible tuple of cardinality

(thus we conjecturally have

,

,

,

,

, and so forth; see this web page for further continuation of this sequence).

In 2004, Goldston, Pintz, and Yildirim established the bound conditional on the Elliott-Halberstam conjecture, which remains unproven. However, no unconditional finiteness of

was obtained (although they famously obtained the non-trivial bound

), and even on the Elliot-Halberstam conjecture no finiteness result on the higher

was obtained either (although they were able to show

on this conjecture). In the recent breakthrough of Zhang, the unconditional bound

was obtained, by establishing a weak partial version of the Elliott-Halberstam conjecture; by refining these methods, the Polymath8 project (which I suppose we could retroactively call the Polymath8a project) then lowered this bound to

.

With the very recent preprint of James Maynard, we have the following further substantial improvements:

Theorem 1 (Maynard’s theorem) Unconditionally, we have the following bounds:

.

for an absolute constant

and any

.

If one assumes the Elliott-Halberstam conjecture, we have the following improved bounds:

.

.

for an absolute constant

and any

.

The final conclusion on Elliott-Halberstam is not explicitly stated in Maynard’s paper, but follows easily from his methods, as I will describe below the fold. (At around the same time as Maynard’s work, I had also begun a similar set of calculations concerning

, but was only able to obtain the slightly weaker bound

unconditionally.) In the converse direction, the prime tuples conjecture implies that

should be comparable to

. Granville has also obtained the slightly weaker explicit bound

for any

by a slight modification of Maynard’s argument.

The arguments of Maynard avoid using the difficult partial results on (weakened forms of) the Elliott-Halberstam conjecture that were established by Zhang and then refined by Polymath8; instead, the main input is the classical Bombieri-Vinogradov theorem, combined with a sieve that is closer in spirit to an older sieve of Goldston and Yildirim, than to the sieve used later by Goldston, Pintz, and Yildirim on which almost all subsequent work is based.

The aim of the Polymath8b project is to obtain improved bounds on , and higher values of

, either conditional on the Elliott-Halberstam conjecture or unconditional. The likeliest routes for doing this are by optimising Maynard’s arguments and/or combining them with some of the results from the Polymath8a project. This post is intended to be the first research thread for that purpose. To start the ball rolling, I am going to give below a presentation of Maynard’s results, with some minor technical differences (most significantly, I am using the Goldston-Pintz-Yildirim variant of the Selberg sieve, rather than the traditional “elementary Selberg sieve” that is used by Maynard (and also in the Polymath8 project), although it seems that the numerology obtained by both sieves is essentially the same). An alternate exposition of Maynard’s work has just been completed also by Andrew Granville.

— 1. Overview of argument —

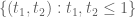

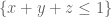

Define an admissible -tuple to be an increasing tuple

of integers, which avoids at least one residue class modulo

for each

. For

, let

denote the following claim:

Conjecture 2 (

) If

is an admissible

-tuple, then there are infinitely many translates

of

that contain at least

primes.

The prime tuples conjecture is then the assertion that holds for all

. Clearly, if

is true, then we have

whenever

is an admissible

-tuple. Theorem 1 then follows from the following claim:

Theorem 3 (Maynard’s theorem, DHL version) Unconditionally, we have the following bounds:

.

whenever

is sufficiently large and

.

If one assumes the Elliott-Halberstam conjecture, we have the following improved bounds:

.

.

whenever

is sufficiently large and

.

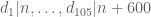

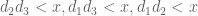

Indeed, the results then follow from using the admissible

-tuple

and the admissible

-tuple

found by Engelsma (and recorded on this site). For the larger results, note that the bound

is obeyed if

for a sufficiently large

and

is large enough, and the claim follows by using the observation that one can create an admissible

-tuple of length

by using the first

primes past

; similarly if one assumes the Elliott-Halberstam conjecture. (Note as the

are clearly non-decreasing in

, it suffices to work with sufficiently large

to obtain bounds such as

.)

As in previous work, the conclusions are obtained by constructing a sieve weight with good properties. We use the same asymptotic notation as in the Polymath8a project, thus all quantities depend on an asymptotic parameter

unless explicitly declared to be fixed, and asymptotic notation such

,

or

is relative to this parameter. We let

and as before. We let

be the quantity

when

is prime, and zero otherwise.

Lemma 4 (Criterion for

) Let

and

be fixed integers. Suppose that for each fixed admissible

-tuple

and each congruence class

such that

is coprime to

for all

, one can find a non-negative weight function

, fixed quantities

, a quantity

, and a quantity

such that one has the upper bound

for all

, and the key inequality

Then

holds.

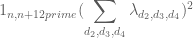

The case of this lemma is Lemma 4.1 of the polymath8a paper. The general

case is proven by an essentially identical argument, namely one considers the expression

uses the hypotheses (1), (2), (3) to show that this is positive for sufficiently large , and observing that the summand is only positive when

contain at least

primes.

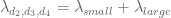

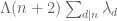

We recall the statement of the Elliott-Halberstam conjecture, for a given choice of parameter :

where

The Bombieri-Vinogradov theorem asserts that holds for all

, while the Elliott-Halberstam conjecture asserts that

holds for all

.

In Polymath8a, the sieve weight was constructed in terms of a smooth compactly supported one-variable function

. A key innovation in Maynard’s work is to replace the sieve with one constructed using a smooth compactly supported multi-variable function

, which affords significantly greater flexibility. More precisely, we will show

Proposition 5 (Sieve asymptotics) Suppose that

holds for some fixed

, and set

for some fixed

. Let

is a fixed symmetric smooth function supported on the simplex

Then one can find

obeying the bounds (1), (2) with

where we use the shorthand

for the mixed partial derivatives of

.

(In fact, one can obtain asymptotics for (1), (2), rather than upper and lower bounds.)

(One can work with non-symmetric functions , but this does not improve the numerology; see the remark after (7.1) of Maynard’s paper.)

We prove this proposition in Section 2. We remark that if one restricts attention to functions of the form

for smooth and supported on

, then

and

and this claim was already essentially established back in this Polymath8a post (or see Proposition 4.1 and Lemma 4.7 of the Polymath8a paper for essentially these bounds). In that previous post (and also in the paper of Farkas, Pintz, and Revesz), the ratio was optimised in this one-dimensional context using Bessel functions, and the method was unable to reach

without an improvement to Bombieri-Vinogradov, or to reach

even on Elliott-Halberstam. However, the additional flexibility afforded by the use of multi-dimensional cutoffs allows one to do better.

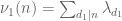

Combining Proposition 5 with Lemma 4, we obtain the following conclusion. For each , let

be the quantity

where ranges over all smooth symmetric functions

that are supported on the simplex

. Equivalently, by substituting

and using the fundamental theorem of calculus, followed by an approximation argument to remove the smoothness hypotheses on

, we have

where ranges over all bounded measurable functions supported on

. Then we have

Corollary 6 Let

be such that

holds, and let

,

be integers such that

Then

holds.

To use this corollary, we simply have to locate test functions that give as large a lower bound for

as one can manage; this is a purely analytic problem that no longer requires any further number-theoretic input.

In particular, Theorem 3 follows from the following lower bounds:

The first two cases of this proposition are obtained numerically (see Section 7 of Maynard’s paper), by working with functions that of the special form

for various real coefficients and non-negative integers

, where

and

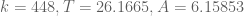

In Maynard’s paper, the ratio

in this case is computed to be

where is the

matrix with entries

,

is the

matrix with

entry equal to

where

and is the

matrix with

entry equal to

where is the quantity

One then optimises the ratio by linear programming methods (a similar idea appears in the original paper of Goldston, Pintz, and Yildirim) to obtain a lower bound for

for

and

.

The final case is established in a different manner; we give a proof of the slightly weaker bound

in Section 3.

— 2. Sieve asymptotics —

We now prove Proposition 5. We use a Fourier-analytic method, similar to that in this previous blog post.

The sieve we will use is of the form

where denotes the square-free integers and

is the Möbius function This should be compared with the sieve

used in the previous blog post, which basically corresponds to the special case .

We begin with (1). Using (10), we may rearrange the left-hand side of (1) as

Observe that as the numbers have no common factor when

, the inner sum vanishes unless the quantities

are coprime, in which case this inner sum can be estimated as

Also, at least one of the two products involving will vanish unless one has

Let us first deal with the contribution of the error term to (1). This contribution may be bounded by

which sums to ; since

and

, we conclude that this contribution is negligible.

To conclude the proof of (1), it thus suffices to show that

Next, we smoothly extend the function to a smooth compactly supported function

, which by abuse of notation we will continue to refer to as

. By Fourier inversion, we may then express

in the form

where and where

is a smooth function obeying the rapid decay bounds

for any fixed . The left-hand side of (11) may then be rewritten as

where and

We may factorise as an Euler product

In particular, we have the crude bound

combining this with (13) we see that the contribution to (14) in which or

is negligible, so we may restrict the integral in (14) to the region

. In this region, we have the Euler product approximations

where we have used the bound and the asymptotic

for

. Since also

, we conclude that

Using (13) again to dispose of the error term, and then using (13) once more to remove the restriction to

, we thus reduce to verifying the identity

But from repeatedly differentiating (12) under the integral sign, one has

and thus

integrating this for using Fubini’s theorem (and (13)), the claim then follows from (5). This concludes the proof of (1).

Now we prove (2). We will just prove the claim for , as the other cases follow similarly using the symmetry hypothesis on

. The left-hand side of (2) may then be expanded as

Observe that the summand vanishes unless (note that

is comparable to

and thus exceeds

). So we may simplify the above expression to

As in the estimation of (1), the summand vanishes unless are coprime, and if

Let obey the above constraints. For any modulus

, define the discrepancy

Since and

is fixed, the hypothesis

implies that

for any fixed . On the other hand, the sum

can, by the Chinese remainder theorem, be rewritten in the form

and is a primitive residue class; note that

does not exceed

. By (15), this expression can then be written as

Let us first control the error term, which may be bounded by

Note that for any , there are

choices of

for which (17) holds. Thus we may bound the previous expression by

By the Cauchy-Schwarz inequality and (16), this expression may be bounded by

for any fixed . On the other hand, we have the crude bound

, as well as the standard estimate

(see e.g. Corollary 2.15 of Montgomery-Vaughan). Putting all this together, we conclude that the contribution of the error term to (2) is negligible. To conclude the proof of (2), it thus suffices to show that

But this can be proven by repeating the arguments used to prove (11) (with replaced by

, and

replaced by

); the presence of the Euler totient function causes some factors of

in that analysis to be replaced by

, but this turns out to have a negligible impact on the final asymptotics since

. This concludes the proof of (2) and hence Proposition 5.

Remark 1 An inspection of the above arguments shows that the simplex

can be enlarged slightly to the region

however this only leads to a tiny improvement in the numerology. It is interesting to note however that in the

case,

is the unit square

, and by taking

and taking

close to

, one can come “within an epsilon” of establishing

(and in particular, the twin prime conjecture) from the full Elliott-Halberstam conjecture; this fact was already essentially observed by Bombieri, using the weight

rather than the Selberg sieve. (Strictly speaking, to establish (1) in this context, one needs the Elliott-Halberstam conjecture not only for

, but also for other arithmetic functions with a suitable Dirichlet convolution

structure; we omit the details.)

Remark 2 It appears that the

conjecture studied in Polymath8a can serve as a substitute for

in Corollary 6, except that one also has to impose an additional constraint on the function

(or

), namely that it is supported in the cube

(in order to keep the moduli involved appropriately smooth). Perhaps as a first approximation, we should ignore the role of

, and just pretend that

is as good as

. In particular, inserting our most optimistic value of

obtained by Polymath8, namely

, we can in principle take

as large as

, although this only is a

improvement or so over the Bombieri-Vinogradov inequality.

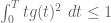

— 3. Large computations —

We now give a proof of (9) for sufficiently large . We use the ansatz

where is the tensor product

and is supported on some interval

and normalised so that

The function is clearly symmetric and supported on

. We now estimate the numerator and denominator of the ratio

that lower bounds .

For the denominator, we bound by

and use Fubini’s theorem and (8) to obtain the upper bound

and thus

Now we observe that

whenever , and so we have the lower bound

We interpret this probabilistically. Let be independent, identically distributed non-negative real random variables with probability density

; this is well-defined thanks to (18). Observe that

is the joint probability density of

, and so

We will lower bound this probability using the Chebyshev inequality. (In my own calculations, I had used the Hoeffding inequality instead, but it seems to only give a slightly better bound in the end for (perhaps saving one or two powers of

).) In order to exploit the law of large numbers, we would like the mean

of

, where

, to be less than

:

The variance of is

times the variance of a single

, which we can bound (somewhat crudely) by

Thus by Chebyshev’s inequality, we have

To clean things up a bit we bound by

to obtain the simpler bound

assuming now that . In particular,

, so we have the cleaner bound

To summarise, we have shown that

and

One can optimise this carefully to give (8) (as is done in Maynard’s paper), but for the purposes of establishing the slightly weaker bound (9), we can use of the form

with and

. With the normalisation (20) we have

so

and

and thus

while

which gives the claim.

161 comments

Comments feed for this article

19 November, 2013 at 11:04 pm

Terence Tao

There are a couple of initial directions to proceed from here, I think. One is to get better bounds on ; as pointed out by Eytan in the previous thread; Maynard is working with functions of the first two symmetric polynomials

; as pointed out by Eytan in the previous thread; Maynard is working with functions of the first two symmetric polynomials  and

and  , but in principle all the symmetric functions are available. (Though perhaps before doing that we should at least get to the point where we can replicate Maynard’s computations; note that he has supplied a Mathematica notebook in the source code of his arXiv file, although it is slightly nontrivial to extract it from the arXiv interface.) Another is to use MPZ instead of Bombieri-Vinogradov, but this puts an additional constraint on the test function F that has to be accounted for, and is likely to have a modest impact on

, but in principle all the symmetric functions are available. (Though perhaps before doing that we should at least get to the point where we can replicate Maynard’s computations; note that he has supplied a Mathematica notebook in the source code of his arXiv file, although it is slightly nontrivial to extract it from the arXiv interface.) Another is to use MPZ instead of Bombieri-Vinogradov, but this puts an additional constraint on the test function F that has to be accounted for, and is likely to have a modest impact on  (improving this quantity by about 5% or so). A third thing to do is to try to enlarge the allowable support of the function F, as per Remark 1; this is likely to be a very modest gain (basically it should shift

(improving this quantity by about 5% or so). A third thing to do is to try to enlarge the allowable support of the function F, as per Remark 1; this is likely to be a very modest gain (basically it should shift  downward by 1 or so), but this could be significant for bounding

downward by 1 or so), but this could be significant for bounding  on EH, as it may shift

on EH, as it may shift  down from 5 to 4 (bringing the

down from 5 to 4 (bringing the  bound from 12 to 8).

bound from 12 to 8).

Indeed, it seems that focusing on the case (assuming EH) may well be the best place to start, as the numerics should be fast and one can work a certain number of things out by hand in such low-dimensional cases.

case (assuming EH) may well be the best place to start, as the numerics should be fast and one can work a certain number of things out by hand in such low-dimensional cases.

20 November, 2013 at 3:51 am

Andrew Sutherland

With Maynard’s permission, I have posted a copy of his Mathematica notebook source as a text file here.

20 November, 2013 at 6:19 am

Anonymous

Any chance I can make you (on anyone else) convert the text document into a notebook? I’m totally new to Mathematica and would like to play around with a fully working notebook. (I’m using v9.0 in case it is important.) [Sorry for interrupting the thread.]

20 November, 2013 at 7:51 am

Andrew Sutherland

You should be able to just save the file with the extension .nb and mathematica will be happy to load it as a notebook.

20 November, 2013 at 8:09 am

Anonymous

Thank you, Andrew.

@Terry: Just remove this post and my previous question if you want.

20 November, 2013 at 10:16 am

James Maynard

I don’t think you can get to (under EH) using just Remark 1 and higher symmetric polynomials (this seems to get only about half way numerically). That said, there are various possible small modifications (such as those you mention below) which might give enough of an improvement.

(under EH) using just Remark 1 and higher symmetric polynomials (this seems to get only about half way numerically). That said, there are various possible small modifications (such as those you mention below) which might give enough of an improvement.

20 November, 2013 at 11:36 am

Vít Tuček

Did you try nonpolynomial symmetric functions? There is some work done on symmetric orthogonal polynomials on the standard simplex. See e.g. the section 3.3 of https://www.sciencedirect.com/science/article/pii/S0021904504002199 and https://www.sciencedirect.com/science/article/pii/S0377042700005045

20 November, 2013 at 1:03 pm

Eytan Paldi

A related idea is to use the transformation to have the weight function over the unit ball (which is perhaps simpler than the simplex).

to have the weight function over the unit ball (which is perhaps simpler than the simplex).

20 November, 2013 at 3:16 pm

Vít Tuček

That’s precisely what is happening in the articles I’ve linked. The author then “constructs” some invariant orthogonal polynomials on the standard simplex. The problem is to find the matrix of with respect to the basis of orthogonal symmetric polynomials.

with respect to the basis of orthogonal symmetric polynomials.

Of course, we can always take arbitrary polynomials in elementary symmetric functions (which give us all invariant polynomials) and evaluate the integrals numerically.

20 November, 2013 at 3:24 pm

James Maynard

I only looked very briefly at the numerical optimization with non-polynomial functions.

We know (Weierstrass) that polynomial approximations will converge to the optimal function. Moreover, I found that the numerical bounds from polynomial approximations seemed to converge quickly.

For , it is easy to use approximations involving higher symmetric polynomials, but there is only a very limited numerical improvement after having taken a low-degree approximation.

, it is easy to use approximations involving higher symmetric polynomials, but there is only a very limited numerical improvement after having taken a low-degree approximation.

For things are more complicated, and convergence is a bit slower, so if there is some nicer basis of symmetric functions maybe thats worth looking at. That said, I would have guessed that it is computationally feasible to include higher degree symmetric polynomials and get a bound that is accurate enough to get the globally best value of

things are more complicated, and convergence is a bit slower, so if there is some nicer basis of symmetric functions maybe thats worth looking at. That said, I would have guessed that it is computationally feasible to include higher degree symmetric polynomials and get a bound that is accurate enough to get the globally best value of  (if we’re only following the method in my paper – with new ideas, such as MPZ this might no longer be true).

(if we’re only following the method in my paper – with new ideas, such as MPZ this might no longer be true).

20 November, 2013 at 3:42 pm

Eytan Paldi

There is no need for numerical evaluation since any monomial in over the simplex is transformed to a monomial in

over the simplex is transformed to a monomial in  over the unit ball whose integral is separable (by using spherical coordinates) with explicit expression in terms of beta functions (in this case factorials.)

over the unit ball whose integral is separable (by using spherical coordinates) with explicit expression in terms of beta functions (in this case factorials.)

19 November, 2013 at 11:14 pm

Polymath 8 – a Success! | Combinatorics and more

[…] Tao launched a followup polymath8b to improve the bounds for gaps between primes based on Maynard’s […]

19 November, 2013 at 11:21 pm

Gil Kalai

Naive questions: 1) Do the small gaps results apply to primes in arithmetic progressions? 2) Could there be a way to bootstrap results for k-tuples of prime in small intervals to get back results on 2-tuples. (The k-tuples result feels in some sense also stronger than the twin prime conjecture.)

20 November, 2013 at 8:03 am

Terence Tao

For (1), this should be possible. The original Goldston-Pintz-Yildirim results were extended to arithmetic progressions by Freiberg (http://arxiv.org/abs/1110.6624) and to more general sets of Bohr type by my student Benatar (http://arxiv.org/abs/1305.0348). In Maynard’s paper, it is noted that for the purposes of getting finite values for , the set of primes can be replaced by any other set that obeys a Bombieri-Vinogradov type theorem (at any level of distribution, not necessarily as large as 1/2), which includes the primes in arithmetic progressions (and also primes in fairly short intervals).

, the set of primes can be replaced by any other set that obeys a Bombieri-Vinogradov type theorem (at any level of distribution, not necessarily as large as 1/2), which includes the primes in arithmetic progressions (and also primes in fairly short intervals).

There may be some interplay between the k-tuple results and the 2-tuple results, but note that the set of primes we can detect in a k-tuple is rather sparse, we only catch about primes in such a tuple. Basically, Maynard’s construction gives, for each

primes in such a tuple. Basically, Maynard’s construction gives, for each  and each admissible tuple

and each admissible tuple  , a sieve weight

, a sieve weight  (which is basically concentrated on a set in

(which is basically concentrated on a set in ![[x,2x]](https://s0.wp.com/latex.php?latex=%5Bx%2C2x%5D&bg=ffffff&fg=545454&s=0&c=20201002) of density about

of density about  ) with the property that if

) with the property that if ![n \in [x,2x]](https://s0.wp.com/latex.php?latex=n+%5Cin+%5Bx%2C2x%5D&bg=ffffff&fg=545454&s=0&c=20201002) is chosen randomly with probability density equal to

is chosen randomly with probability density equal to  , then

, then

(or, in the original notation, ) for

) for  , which by the first moment method shows that with positive probability at least

, which by the first moment method shows that with positive probability at least  of the

of the  will be prime. As

will be prime. As  increases, it is true that we get a few more primes this way, but they are spread out in a much longer interval, and the number of

increases, it is true that we get a few more primes this way, but they are spread out in a much longer interval, and the number of  with this many primes also gets sparser, so it’s not clear how to perform the tradeoff.

with this many primes also gets sparser, so it’s not clear how to perform the tradeoff.

But this does remind me of a possible idea I had to shave 1 off of . Suppose for instance one was trying to catch two primes close together in the tuple

. Suppose for instance one was trying to catch two primes close together in the tuple  . Currently, we need estimates of the form

. Currently, we need estimates of the form

to catch two primes at distance at most 8 apart; one can also replace the four constants by other constants that add up to 1, although this doesn’t seem to help much. To get primes distance at most 6 apart, one would ordinarily need to boost three of these constants up to

by other constants that add up to 1, although this doesn’t seem to help much. To get primes distance at most 6 apart, one would ordinarily need to boost three of these constants up to  (and drop the last one down to zero). But suppose we had bounds of the form

(and drop the last one down to zero). But suppose we had bounds of the form

Then one could get a gap of at most 6, and not just 8, because the first four estimates show that there are two primes in n,n+2,n+6,n+8 with probability greater than , and the last estimate excludes the possibility that this only occurs for the n,n+8 pair. Unfortunately with sieve theory it is difficult to get really good bounds on

, and the last estimate excludes the possibility that this only occurs for the n,n+8 pair. Unfortunately with sieve theory it is difficult to get really good bounds on  , so I don’t know whether this idea will actually work.

, so I don’t know whether this idea will actually work.

20 November, 2013 at 10:29 am

Terence Tao

Here is a slight refinement of the last idea. Assume EH, and suppose we want to improve the current bound of to

to  . Assume this were false, thus after some point there are no pairs of primes of distance 10 or less apart. Thus, given any large random integer n (chosen using a probability distribution

. Assume this were false, thus after some point there are no pairs of primes of distance 10 or less apart. Thus, given any large random integer n (chosen using a probability distribution  ), the events “n prime”, “n+2 prime”, “n+6 prime”, “n+8 prime”, “n+12 prime” are all disjoint, except for the pair “n prime” and “n+12 prime” which are allowed to overlap. If we then let

), the events “n prime”, “n+2 prime”, “n+6 prime”, “n+8 prime”, “n+12 prime” are all disjoint, except for the pair “n prime” and “n+12 prime” which are allowed to overlap. If we then let  be the probability that

be the probability that  is prime, and

is prime, and  be the probability that n and n+12 are simultaneously prime, we thus have the inequality

be the probability that n and n+12 are simultaneously prime, we thus have the inequality

So if we can get a lower bound on

which is asymptotically better than an upper bound for

then we can reduce from 12 to 10. The tricky thing though is still how to get a good upper bound on

from 12 to 10. The tricky thing though is still how to get a good upper bound on  . But perhaps there is now a fair amount of “room” in the ratio between (1) and

. But perhaps there is now a fair amount of “room” in the ratio between (1) and  if we use more symmetric polynomials and enlarge the simplex as per Remark 1; the computations in Maynard’s paper show that this ratio is at least

if we use more symmetric polynomials and enlarge the simplex as per Remark 1; the computations in Maynard’s paper show that this ratio is at least  which gives almost no room to play with, but perhaps with the above improvements one can get a more favorable ratio.

which gives almost no room to play with, but perhaps with the above improvements one can get a more favorable ratio.

20 November, 2013 at 10:39 am

James Maynard

Another comment in the same vein:

Instead of using we can use

we can use  . We can therefore get a small improvement numerically from removing e.g. the contribution when all of

. We can therefore get a small improvement numerically from removing e.g. the contribution when all of  have a small prime factor.

have a small prime factor.

20 November, 2013 at 3:48 pm

Terence Tao

I thought about this idea a bit more, but encountered a problem in the EH case: if one wants a meaningful lower bound on the second term, then one starts wanting to estimate sums such as for various values of

for various values of  , but this is only plausible if the sieve level

, but this is only plausible if the sieve level  of

of  is a bit below the threshold of

is a bit below the threshold of  (by a factor of

(by a factor of  ), but then one is probably losing more from having a reduced sieve level

), but then one is probably losing more from having a reduced sieve level  (as it now has to be below the optimal value of

(as it now has to be below the optimal value of  that one wants to pick if one wants to get the maximum benefit of EH) than one is gaining from removing this piece.

that one wants to pick if one wants to get the maximum benefit of EH) than one is gaining from removing this piece.

However, the situation is better if one is starting from Bombieri-Vinogradov or MPZ as the distribution estimate (so one would now be trying to beat the 600 bound rather than the 12 bound). Here the sieve level is more like

is more like  and so there is actually a fair bit of room to insert some divisibility constraints

and so there is actually a fair bit of room to insert some divisibility constraints  and still be able to control

and still be able to control  . The bad news is that the final bound one gets here could be really small, something like

. The bad news is that the final bound one gets here could be really small, something like  of the main term, which looks too tiny to lead to any actual improvement in the H value.

of the main term, which looks too tiny to lead to any actual improvement in the H value.

21 November, 2013 at 6:51 pm

James Maynard

Good point. Maybe (I haven’t really thought about this) we can use exponential sum estimates to get us a bit of extra room, but this is already sounding like quite a lot of work for what would be only a very small numerical improvement.

21 November, 2013 at 7:15 am

Terence Tao

I found a way to get an upper bound on the quantity

(and hence ) but I don’t know how effective it will be yet. Basically, the idea is to observe that if

) but I don’t know how effective it will be yet. Basically, the idea is to observe that if  is given in terms of a multidimensional GPY cutoff function f as in equation (10) in the blog post, then when n and n+12 are both prime, we have

is given in terms of a multidimensional GPY cutoff function f as in equation (10) in the blog post, then when n and n+12 are both prime, we have  , whenever

, whenever  is given in terms of another multidimensional GPY cutoff function f’ with the property that

is given in terms of another multidimensional GPY cutoff function f’ with the property that  for all

for all  . Then

. Then

The last step is of course crude, but it crucially replaces a sum over two primality conditions (which is hopeless to estimate with current technology) with a sum over one primality condition (which we do know how to control, assuming a suitable distribution hypothesis). The final expression may be computed by Proposition 5 of the blog post to be

and so the probability above may be upper bounded by the ratio

above may be upper bounded by the ratio

If we set f’=f then this gives the trivial upper bound , but the point is that we can do better by optimising in f’.

, but the point is that we can do better by optimising in f’.

Now the optimisation problem for f’ is much easier than the main optimisation for : we need to optimise the square-integral of

: we need to optimise the square-integral of  subject to

subject to  being supported on the simplex and having the same trace as

being supported on the simplex and having the same trace as  on the boundary

on the boundary  . (No symmetry hypothesis needs to be imposed on f’.) It is not difficult (basically fundamental theorem of calculus + converse to Cauchy-Schwarz) to see that the extremiser occurs with

. (No symmetry hypothesis needs to be imposed on f’.) It is not difficult (basically fundamental theorem of calculus + converse to Cauchy-Schwarz) to see that the extremiser occurs with

and so we have

But I don’t know yet how strong this bound will be in practice; one needs to stay a little bit away from the extreme points of the simplex in order for the denominator

to stay a little bit away from the extreme points of the simplex in order for the denominator  not to kill us. (On the other hand, as observed earlier, the bound can’t get worse than

not to kill us. (On the other hand, as observed earlier, the bound can’t get worse than  .)

.)

In the large k regime, the analogue of has size about

has size about  ; I’m hoping that the bound for

; I’m hoping that the bound for  is significantly smaller, indeed independence heuristics suggest it should be something like

is significantly smaller, indeed independence heuristics suggest it should be something like  . It’s dangerous of course to extrapolate this to the k=5 case but one can hope that it is still the case that

. It’s dangerous of course to extrapolate this to the k=5 case but one can hope that it is still the case that  is small compared to

is small compared to  in this case.

in this case.

21 November, 2013 at 6:39 pm

James Maynard

Nice! If we’re only using Bombieri-Vinogradov (or MPZ) then presumably we can use a hybrid bound, where if is small we use your argument as above, but if

is small we use your argument as above, but if  is larger we instead use

is larger we instead use

(i.e. rather than forcing n to be prime and n+12 an almost-prime with a very small level-of-distribution, we relax this to n and n+12 being almost-primes.)

If we’re using Elliott-Halberstam then we get no use from this, since the primes have (apart from an ) the same level-of-distribution results available.

) the same level-of-distribution results available.

21 November, 2013 at 7:03 pm

Terence Tao

Certainly we have the option of deleting both primality constraints, but I’m not sure how one can separate the large case from the small

case from the small  case while retaining the positivity of all the relevant components of

case while retaining the positivity of all the relevant components of  , which is implicitly used in order to safely drop the constraints that n is prime or n+12 is prime.

, which is implicitly used in order to safely drop the constraints that n is prime or n+12 is prime.

In the large k regime, if we take the sieve from your Section 8, but restrict it to a smaller simplex, such as , then it does appear that the analogue of

, then it does appear that the analogue of  scales like

scales like  , which it should (morally we should have

, which it should (morally we should have  , though with current methods we could only hope for an upper bound that is of this order of magnitude). It would be interesting to see what numerics we can get in the k=5 case though.

, though with current methods we could only hope for an upper bound that is of this order of magnitude). It would be interesting to see what numerics we can get in the k=5 case though.

One nice thing is that all the bounds for , etc. are quadratic forms in the function f, so the same linear programming methods in your paper will continue to be useful here, without need for more nonlinear optimisation.

, etc. are quadratic forms in the function f, so the same linear programming methods in your paper will continue to be useful here, without need for more nonlinear optimisation.

21 November, 2013 at 7:25 pm

James Maynard

I guess I was imagining a Cauchy-Schwarz step:

and then using the separate bounds in for the different sums.

21 November, 2013 at 8:19 pm

Terence Tao

Ah, I see. That could work, although one loses a factor of 2 from using the inequality and so splitting might not be efficient in practice – but I guess we can experiment with all sorts of combinations to see what works numerically and what doesn’t.

and so splitting might not be efficient in practice – but I guess we can experiment with all sorts of combinations to see what works numerically and what doesn’t.

21 November, 2013 at 9:00 pm

James Maynard

We can regain the factor of two by being a bit more careful. Let , where

, where  is

is  provided

provided  is small, and

is small, and  is the remainder. Then, expanding the square, we would use your bound for all the terms except the

is the remainder. Then, expanding the square, we would use your bound for all the terms except the  terms. Of course, it might be that in practice that the benefit of doing this is small.

terms. Of course, it might be that in practice that the benefit of doing this is small.

(PS: Apologies for the formatting errors I’ve had in my posts – I’ll try to check them more carefully)

21 November, 2013 at 9:30 pm

James Maynard

Ignore my last comment – this loses positivity.

21 November, 2013 at 9:30 pm

Terence Tao

Hmm… but in general, the do not have a definite sign, and so I don’t see how to discard the cutoffs

do not have a definite sign, and so I don’t see how to discard the cutoffs  or

or  in the cross terms

in the cross terms  unless one used Cauchy-Schwarz (which would be a little bit more efficient than the

unless one used Cauchy-Schwarz (which would be a little bit more efficient than the  inequality, though not by too much).

inequality, though not by too much).

22 November, 2013 at 7:35 am

James Maynard

Actually, I think the Cauchy step I suggested above is rubbish (I was clearly more tired last night than I realized). We would lose the vital filtering out of small primes, or we would be doing worse than just going one way or the other.

I think my concern was that with only a limited amount of room for divisibility constraints we might only be filtering out factors of which are typically less than

which are typically less than  for a constant

for a constant  , and potentially losing a factor of

, and potentially losing a factor of  . (I’m thinking about the case when

. (I’m thinking about the case when  is large).I think this is what would be happening with the original GPY weights, but our weights are constructed so that the main contribution is when

is large).I think this is what would be happening with the original GPY weights, but our weights are constructed so that the main contribution is when  is of size

is of size  (some suitable constant

(some suitable constant  ), which gives us enough room that we don’t need to worry here.

), which gives us enough room that we don’t need to worry here.

Presumably this would give an improvement to of size about

of size about  (ignoring all log factors).

(ignoring all log factors).

20 November, 2013 at 3:57 pm

Tristan Freiberg

Re (1), depends what exactly one means by GPY for APs, but GPY extended their original work to primes in APs — wasn’t me. My understanding is that these amazing new results apply equally well to admissible k-tuples of linear forms g_1x + h_1,…,g_kx + h_k, not just monic ones. I’m sure we’ll see lots of neat applications of this that we can get almost for free after Maynard’s spectacular proof!

20 November, 2013 at 6:20 am

timur

In the second sentence, is it mean that H_m is the least quantity such that there are infinitely many intervals of length H_m that contain m+1 (not m) or more primes?

[Corrected, thanks – T.]

20 November, 2013 at 8:22 am

Pace Nielsen

Dear Terry,

Could you expound a little bit on your Remark 1? If we are within epsilon of DHL[2,2] under EH, why don’t we have DHL[3,2] under EH?

20 November, 2013 at 9:06 am

Terence Tao

Good question! There is a funny failure of monotonicity when one tries to use the boost in Remark 1, which I’ll try to explain a bit more here.

Start with the task of trying to prove DHL[2,2], or more precisely the twin prime problem of finding two primes in . The way we try to do this is to pick a sieve weight

. The way we try to do this is to pick a sieve weight  which basically has the form

which basically has the form

for some sieve coefficients whose exact value we will ignore for now. We then want to control sums such as

whose exact value we will ignore for now. We then want to control sums such as

The first sum can be easily estimated in practice, so let us ignore it for now and focus on the second two sums. At first glance, because of the two divisibility constraints , it looks like we need

, it looks like we need  to have any real hope of controlling the sum; but note that the weight

to have any real hope of controlling the sum; but note that the weight  forces

forces  to be prime and so

to be prime and so  will be

will be  , so we actually only need

, so we actually only need  to control the second sum, and similarly

to control the second sum, and similarly  to control the first sum. The upshot of this is that the function

to control the first sum. The upshot of this is that the function  in the blog post only needs to be supported on the square

in the blog post only needs to be supported on the square  rather than the triangle

rather than the triangle  (and if one then sets F equal to 1 on this square, one gets

(and if one then sets F equal to 1 on this square, one gets  , coming within an epsilon of the twin prime conjecture on EH).

, coming within an epsilon of the twin prime conjecture on EH).

[I'm glossing over some details as to how to deal with the allegedly easy first sum. If then this sum is indeed easy to estimate, but if

then this sum is indeed easy to estimate, but if  can exceed

can exceed  then one has to proceed more carefully. I think what one does here is try to use a separation of variables trick, breaking up

then one has to proceed more carefully. I think what one does here is try to use a separation of variables trick, breaking up  into pieces of the form

into pieces of the form  , where

, where  is a divisor sum of

is a divisor sum of  only, and

only, and  is a divisor sum of

is a divisor sum of  only, and then use an Elliott-Halberstam hypothesis for

only, and then use an Elliott-Halberstam hypothesis for  or

or  (rather than

(rather than  ) to control error terms; this should get us back to the weaker constraints

) to control error terms; this should get us back to the weaker constraints  ,

,  .]

.]

Now we turn to DHL[3,2], playing with the tuple n,n+2,n+6. Now we use a sieve of the form

and want to estimate the sums

.

.

Again ignoring the first sum as being presumably easy to handle, we now need the constraints in order to control the latter three sums respectively (rather than

in order to control the latter three sums respectively (rather than  ). In terms of the cutoff function F, this means that we may enlarge the support from the simplex

). In terms of the cutoff function F, this means that we may enlarge the support from the simplex  to

to  . But note now that we have a constraint

. But note now that we have a constraint  present for the 3-tuple problem which was not present in the 2-tuple problem, because we had no need to control

present for the 3-tuple problem which was not present in the 2-tuple problem, because we had no need to control  in the 2-tuple problem. So there is a failure of monotonicity; the simple example of

in the 2-tuple problem. So there is a failure of monotonicity; the simple example of ![F(t_1,t_2) = 1_{[0,1]}(t_1) 1_{[0,1]}(t_2)](https://s0.wp.com/latex.php?latex=F%28t_1%2Ct_2%29+%3D+1_%7B%5B0%2C1%5D%7D%28t_1%29+1_%7B%5B0%2C1%5D%7D%28t_2%29&bg=ffffff&fg=545454&s=0&c=20201002) which gets us within an epsilon of success for 2-tuples on EH doesn't directly translate to something for 3-tuples.

which gets us within an epsilon of success for 2-tuples on EH doesn't directly translate to something for 3-tuples.

20 November, 2013 at 11:50 am

Terence Tao

Thinking about it a bit more, this lack of monotonicity is probably a sign that the arguments are not as efficient as they could be. In what one might now call the “classical” GPY/Zhang arguments, one relies on the Elliott-Halberstam conjecture just for the von Mangoldt function . To obtain the enlargement of the simplex, one would also need the EH conjecture for other divisor sums

. To obtain the enlargement of the simplex, one would also need the EH conjecture for other divisor sums  , but this type of generalisation of EH is a standard extension (see e.g. Conjecture 1 of Bombieri-Friedlander-Iwaniec) and so this would not be considered that much more “expensive” than EH just for von Mangoldt (although the latter is pretty expensive at present!).

, but this type of generalisation of EH is a standard extension (see e.g. Conjecture 1 of Bombieri-Friedlander-Iwaniec) and so this would not be considered that much more “expensive” than EH just for von Mangoldt (although the latter is pretty expensive at present!).

To recover monotonicity, one would have to also assume EH for hybrid functions such as . One could simply conjecture EH for such beasts, but this now looks considerably more “expensive” than the previous versions of EH (indeed, it may already be stronger than the twin prime conjecture, especially if the level of the divisor sum is allowed to be large). On the other hand, perhaps a Bombieri-Vinogradov type theorem for these hybrid functions is not unreasonable to hope for (though I am not sure exactly how this would help in a situation in which we already had EH for the non-hybrid functions).

. One could simply conjecture EH for such beasts, but this now looks considerably more “expensive” than the previous versions of EH (indeed, it may already be stronger than the twin prime conjecture, especially if the level of the divisor sum is allowed to be large). On the other hand, perhaps a Bombieri-Vinogradov type theorem for these hybrid functions is not unreasonable to hope for (though I am not sure exactly how this would help in a situation in which we already had EH for the non-hybrid functions).

20 November, 2013 at 12:03 pm

Pace Nielsen

Thank you for this explanation. It makes a lot of sense.

I wonder if taking different admissible 3-tuples (e.g. {0,2,6} and {0,4,6}) and then weighting them appropriately in the sieve would allow further refinement of the support simplex.

20 November, 2013 at 2:43 pm

Terence Tao

Unfortunately these tuples somehow live in completely different worlds and don’t seem to have much interaction. Specifically, the only chance we have to make prime for large n is if

prime for large n is if  , while the only chance we have to make

, while the only chance we have to make  prime is if

prime is if  . So any sieve that “sees” the first tuple in any non-trivial way would necessarily be concentrated on

. So any sieve that “sees” the first tuple in any non-trivial way would necessarily be concentrated on  , and any sieve that sees the second tuple would be concentrated instead on

, and any sieve that sees the second tuple would be concentrated instead on  . The distribution of primes in

. The distribution of primes in  and in

and in  are more or less independent (one may at best save a multiplicative factor of two in the error terms by treating them together, which is negligible in the grand scheme of things), so I don’t think one gains much from a weighted average of sieves adapted to two different residue classes mod 3.

are more or less independent (one may at best save a multiplicative factor of two in the error terms by treating them together, which is negligible in the grand scheme of things), so I don’t think one gains much from a weighted average of sieves adapted to two different residue classes mod 3.

20 November, 2013 at 5:22 pm

Pace Nielsen

Good point. I should have used {0,2,6} and {2,6,8} together instead. I think the idea in my head was something along the lines of your earlier post, about using probabilities in some ways. This would require our weights to discriminate against both 0 and 8 being prime simultaneously (in this case), and that appears to be a difficult task.

20 November, 2013 at 9:09 am

Sylvain JULIEN

You might be interested in the following heuristics conditional on a rather stronger form of Goldbach’s conjecture (that I call NFPR conjecture) explaining roughly why $H_{k}$ should be $O(k\log k)$: http://mathoverflow.net/questions/132973/would-the-following-conjectures-imply-lim-inf-n-to-inftyp-nk-p-n-ok-lo

It would be interesting to try to establish rigorously the presumably best possible upper bound $r_{0}(n)=O*(\log^{2}n)$ that would settle both NFPR conjecture and Cramer’s conjecture: maybe you could start a future Polymath project to do so?

20 November, 2013 at 12:15 pm

Terence Tao

One thought on the variational problem; for sake of discussion let us take k=3. The quantity is then the maximum value of

is then the maximum value of

for symmetric F(x,y,z) supported on the simplex subject to the constraint

subject to the constraint

(As discussed earlier, we can hope to enlarge this simplex to the larger region , but let us ignore this improvement for now.) In Maynard’s paper, one considers arbitrary polynomial combinations of the first two symmetric polynomials

, but let us ignore this improvement for now.) In Maynard’s paper, one considers arbitrary polynomial combinations of the first two symmetric polynomials  ,

,  as candidates for F.

as candidates for F.

However, we know from Lagrange multipliers (as remarked after (7.1) in Maynard’s paper) that the optimal F must be a constant multiple of

and so in particular takes the form

for a symmetric function G(x,y) of two variables supported on the triangle . So one could collapse the three-dimensional variational problem to a two-dimensional one, which in principle helps avoid the “curse of dimensionality” and would allow one to numerically explore a greater region of the space of symmetric functions (e.g. by writing everything in terms of G and expanding G into functions of symmetric polynomials such as

. So one could collapse the three-dimensional variational problem to a two-dimensional one, which in principle helps avoid the “curse of dimensionality” and would allow one to numerically explore a greater region of the space of symmetric functions (e.g. by writing everything in terms of G and expanding G into functions of symmetric polynomials such as  ).

).

It is possible that one could iterate this process and reduce to a one-dimensional variational problem, but it looks messy and I have not yet attempted this.

20 November, 2013 at 3:43 pm

James Maynard

I was unable to iterate the reduction in dimension step more than once. (This is roughly why I was able to solve the eigenfunction equation when , since it reduces to solving a single variable PDE, but not for

, since it reduces to solving a single variable PDE, but not for  ).

).

The eigenfunction equation for looks like

looks like

and I failed to get anything particularly useful out of this (although maybe someone else has some clever ideas – I’m far from an expert at this. Differentiating wrt x and y turns this into a two variable PDE). One can do some sort of iterative substitution, but I just got a mess.

20 November, 2013 at 2:22 pm

Anonymous

Notation:

* Use “ ” instead of “

” instead of “ ”. (Also, it is easier to use “

”. (Also, it is easier to use “ ” than “

” than “ ”.)

”.) ” instead of “

” instead of “ ”.

”.

* Use “

20 November, 2013 at 2:49 pm

Eytan Paldi

By representing as the largest eigenvalue of a certain non-negative integral operator, a simple upper bound for

as the largest eigenvalue of a certain non-negative integral operator, a simple upper bound for  (implying some limitations of the method) is given by the operator trace.

(implying some limitations of the method) is given by the operator trace.

20 November, 2013 at 3:55 pm

Terence Tao

A cheap way to combine Maynard’s paper with the Zhang/Polymath8a stuff: if![MPZ[\varpi,\delta]](https://s0.wp.com/latex.php?latex=MPZ%5B%5Cvarpi%2C%5Cdelta%5D&bg=ffffff&fg=545454&s=0&c=20201002) is true, then we have

is true, then we have  as

as  . The reason for this is that the function F (or f) constructed in the large k_0 setting is supported in the cube

. The reason for this is that the function F (or f) constructed in the large k_0 setting is supported in the cube ![[0,T/k_0]^{k_0}](https://s0.wp.com/latex.php?latex=%5B0%2CT%2Fk_0%5D%5E%7Bk_0%7D&bg=ffffff&fg=545454&s=0&c=20201002) (as well as in the simplex); by construction,

(as well as in the simplex); by construction,  decays like

decays like  , so in particular f will be supported on

, so in particular f will be supported on ![[0, \frac{\delta}{1/4+\varpi}]^{k_0}](https://s0.wp.com/latex.php?latex=%5B0%2C+%5Cfrac%7B%5Cdelta%7D%7B1%2F4%2B%5Cvarpi%7D%5D%5E%7Bk_0%7D&bg=ffffff&fg=545454&s=0&c=20201002) for

for  large enough. This means that the moduli that come out of the sieve asymptotic analysis will be

large enough. This means that the moduli that come out of the sieve asymptotic analysis will be  -smooth, and so MPZ may be used in place of EH much as in Zhang (or Polymath8a).

-smooth, and so MPZ may be used in place of EH much as in Zhang (or Polymath8a).

So using our best verified value 7/600 of , we have the bound

, we have the bound  , and using the tentative value 13/1080 this improves to

, and using the tentative value 13/1080 this improves to  . Not all that dramatic of an improvement, but it does show that the Polymath8a stuff is good for something :).

. Not all that dramatic of an improvement, but it does show that the Polymath8a stuff is good for something :).

20 November, 2013 at 5:31 pm

Terence Tao

The computations in Section 8 of Maynard’s paper should give explicit values of H_m for small m that are fairly competitive with the bound , I think.

, I think.

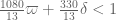

For simplicity let us just work off of Bombieri-Vinogradov (so is basically 1/2); one can surely push things further by using the MPZ estimates, but let’s defer that to later. The criterion (Proposition 4.2 of Maynard, or Corollary 6 here) is then that

is basically 1/2); one can surely push things further by using the MPZ estimates, but let’s defer that to later. The criterion (Proposition 4.2 of Maynard, or Corollary 6 here) is then that  holds whenever

holds whenever  , where

, where  is the diameter of the narrowest k-tuple. For instance,

is the diameter of the narrowest k-tuple. For instance,  gives

gives  .

.

In Section 8 of Maynard, the lower bound

holds for any such that

such that

where

(here are the moments

are the moments  , where

, where  .) It looks like a fairly routine matter to optimise A,T for any given k, and then find the first k with

.) It looks like a fairly routine matter to optimise A,T for any given k, and then find the first k with  (to get a

(to get a  bound), the first k with

bound), the first k with  (to get a

(to get a  bound), and so forth.

bound), and so forth.

Actually there is some room to improve things a bit; if one inspects Maynard’s argument more carefully, one can improve (1) to

where is the variance

is the variance

and the criterion (1′) is replaced with

which could lead to some modest improvement in the numerology. (I also have an idea on removing the -T in the denominator in (2) and (2′) (or the -T/k in (1) and (1′), but this is a bit more complicated.)

20 November, 2013 at 9:29 pm

Pace Nielsen

“The computations in Section 8 of Maynard’s paper should give explicit values of H_m for small m that are fairly competitive with the bound H_1 \leq 600, I think.”

Assuming I didn’t make any errors, for you can get

you can get  rather easily with your improved equations. (Take

rather easily with your improved equations. (Take  for example.) I couldn’t get

for example.) I couldn’t get  to work out. Also, I didn’t try to do

to work out. Also, I didn’t try to do  so others might want to give it a try.

so others might want to give it a try.

I didn’t do anything fancy to find the solution for — just made a lot of graphs. Similarly, for

— just made a lot of graphs. Similarly, for  I just graphed enough points so that I was convinced that the maximum for the RHS of (2) is less than 4.

I just graphed enough points so that I was convinced that the maximum for the RHS of (2) is less than 4.

20 November, 2013 at 9:40 pm

Pace Nielsen

P.S. While I was playing around with trying to actually find the optimal solution, I did notice that making the substitution (for some variable

(for some variable  , which we may want to replace with an exponential) simplifies things quite a bit. For instance, this allows one to convert (2′) into an inequality of the form

, which we may want to replace with an exponential) simplifies things quite a bit. For instance, this allows one to convert (2′) into an inequality of the form  for a function

for a function  (which has a number of nice properties).

(which has a number of nice properties).

20 November, 2013 at 10:05 pm

Pace Nielsen

P.P.S. I just discovered the “FindMaximum” function in Mathematica, which concurs with my findings. When , It says that the RHS of (2) takes a maximum value of

, It says that the RHS of (2) takes a maximum value of  when (2′) holds, at

when (2′) holds, at  . On the other hand, when

. On the other hand, when  , then the RHS of (2) is less than 4, when (2′) holds.

, then the RHS of (2) is less than 4, when (2′) holds.

If this function is to be believed, when then

then  is the lowest

is the lowest  value for which the RHS of (2) is bigger than 8 when (2′) also holds. One takes

value for which the RHS of (2) is bigger than 8 when (2′) also holds. One takes  .

.

21 November, 2013 at 2:32 am

Andrew Sutherland

The admissible

21 November, 2013 at 1:51 pm

Terence Tao

In light of James’ calculation below that a naive insertion of (or

(or  ) gives good results for the small k numerics, it is now tempting to do the same for the large k asymptotics given above.

) gives good results for the small k numerics, it is now tempting to do the same for the large k asymptotics given above.

If one does things optimistically (ignoring ), then all one has to do is replace the condition

), then all one has to do is replace the condition  with

with  for one’s favourite value of

for one’s favourite value of  . Given that in the small k case this improved 105 to 68, perhaps we could hope that 145 improves to below 105?

. Given that in the small k case this improved 105 to 68, perhaps we could hope that 145 improves to below 105?

To do things properly (i.e. rigorously), we unfortunately have to also take into account , and specifically there is an annoying new constraint

, and specifically there is an annoying new constraint

needed to ensure that all the moduli involved are -smooth. This (when combined with either

-smooth. This (when combined with either  or

or  ) is likely to cause some degradation in the bounds, but at least these bounds would be rigorous.

) is likely to cause some degradation in the bounds, but at least these bounds would be rigorous.

On the other hand, we do know that our MPZ estimates only need a certain amount of dense divisibility rather than smoothness. It is likely that one could modify the above arguments (probably by introducing two different deltas, much as we did in Polymath8a) we could reduce the dependence on delta to the point where it is almost negligible. But this looks a little tricky and is probably best deferred until a little later.

p.s. Gah, I just realised that my formula for given previously was incorrect, as I had lost the

given previously was incorrect, as I had lost the  factor. The true formula for

factor. The true formula for  is

is

which unfortunately is likely to make the previous computations a little bit worse. Sorry about that!

21 November, 2013 at 2:58 pm

Pace Nielsen

With the corrected formula for , the FindMaximum command gives the following results:

, the FindMaximum command gives the following results:

For , we have

, we have  .

.

For , we have

, we have  .

.

I’ll give your other improvement some thought when I have more time.

21 November, 2013 at 4:13 pm

Terence Tao

Thanks for the quick recalculation! It’s a shame that we lost a factor of 5 due to the error, although we still beat Polymath8a, for what it’s worth…

22 November, 2013 at 1:33 am

Andrew Sutherland

With we get

we get  with this tuple.

with this tuple.

21 November, 2013 at 3:51 pm

xfxie

Just had an initial try for . Seems

. Seems  cannot be dropped down too far. Here is a possible solution 508.

cannot be dropped down too far. Here is a possible solution 508.

21 November, 2013 at 8:12 pm

Pace Nielsen

Using , xfxie's

, xfxie's  appears optimal for

appears optimal for  . For

. For  , we can get

, we can get  .

.

22 November, 2013 at 6:18 am

Andrew Sutherland

This gives the lower bound 484290 on .

.

22 November, 2013 at 7:04 am

Andrew Sutherland

Of course I meant to say upper bound :)

22 November, 2013 at 7:37 am

Andrew Sutherland

Actually we can get H_2 down to 484276.

22 November, 2013 at 9:18 am

Fan

The link to the 484276 tuple keeps throwing 403

22 November, 2013 at 10:04 am

Andrew Sutherland

Fixed.

23 November, 2013 at 4:13 pm

xfxie

For =42392, seems

=42392, seems  can be further down to 484272 :).

can be further down to 484272 :).

23 November, 2013 at 4:33 pm

Andrew Sutherland

Slight further improvement: 484260.

23 November, 2013 at 6:32 pm

xfxie

Further improved to 484238.

24 November, 2013 at 2:00 am

Andrew Sutherland

Another small improvement: 484234.

24 November, 2013 at 6:35 am

xfxie

Can be further down to 484200.

24 November, 2013 at 6:21 pm

Andrew Sutherland

A little more progress: 484192.

25 November, 2013 at 2:09 am

Andrew Sutherland

484176.

26 November, 2013 at 5:01 am

xfxie

Can be dropped to: 484168 (for k=42392).

27 November, 2013 at 2:08 am

Andrew Sutherland

484162

27 November, 2013 at 12:40 pm

Andrew Sutherland

484142

28 November, 2013 at 4:47 am

Andrew Sutherland

484136

28 November, 2013 at 6:25 am

Andrew Sutherland

484126

20 November, 2013 at 6:36 pm

andre

Please forgive me my ignorance, I even didn’t fully read Maynard’s preprint. with an outer integration over the (k-1)-dim. cube. On page 19, along the proof of Lemma 7.2, formula (7.12) he uses an outer integration over the (k-1)-dim simplex. So my question is, if the outer integration in the definition of

with an outer integration over the (k-1)-dim. cube. On page 19, along the proof of Lemma 7.2, formula (7.12) he uses an outer integration over the (k-1)-dim simplex. So my question is, if the outer integration in the definition of  was over the (k-1)-dim simplex, too?

was over the (k-1)-dim simplex, too?

On page 5, in Proposition 4.1, he defines

20 November, 2013 at 9:13 pm

Terence Tao

In Lemma 7.2, one is only considering functions F of the form defined in (7.3), which are supported on the simplex, and so integration of such functions in the cube is the same as on the simplex.

20 November, 2013 at 6:48 pm

Eytan Paldi

It seems that the largest eigenvalue of the operator defined in (7.2) of Maynard’s preprint is the square root of (not

(not  as remarked there.)

as remarked there.)

20 November, 2013 at 7:11 pm

Eytan Paldi

In fact, the exact relationship between the above linear operator and is not sufficiently clear to me.

is not sufficiently clear to me.

20 November, 2013 at 9:09 pm

Terence Tao

The connection comes from the identity

which shows that is the largest eigenvalue of

is the largest eigenvalue of  . (Actually, as

. (Actually, as  is not quite compact, although it is bounded, self-adjoint, and positive definite, one has to be a little careful here with the spectral theory to ensure that

is not quite compact, although it is bounded, self-adjoint, and positive definite, one has to be a little careful here with the spectral theory to ensure that  only has point spectrum at its operator norm; I think it will work out though, I will try to supply some details later. In any case this doesn’t impact the substance of Maynard’s paper, as one never actually analyses eigenfunctions in that paper.)

only has point spectrum at its operator norm; I think it will work out though, I will try to supply some details later. In any case this doesn’t impact the substance of Maynard’s paper, as one never actually analyses eigenfunctions in that paper.)

20 November, 2013 at 9:37 pm

Eytan Paldi

Thanks for the explanation! One may use this to show that for each given polynomial P, the ratio for should increase (unless P is optimal). This gives iterative process for improving

should increase (unless P is optimal). This gives iterative process for improving  .

.

22 November, 2013 at 7:31 am

Terence Tao

A little expansion of Eytan’s remark (a version of the power method): from Cauchy-Schwarz one has

which upon rearranging means that the Rayleigh quotient is non-decreasing if one replaces

is non-decreasing if one replaces  by

by  . Iterating this, we may replace

. Iterating this, we may replace  by

by  (or any higher power

(or any higher power  and guarantee a better lower bound on

and guarantee a better lower bound on  unless one is already at an eigenfunction.

unless one is already at an eigenfunction.

This suggests that we can improve the large k analysis (and in particular, the m=2 values) by taking our current candidate for f and applying to it to obtain a new candidate that is guaranteed to be better. This is a bit messy though; a simpler (though slightly less efficient) approach would be to compute

to it to obtain a new candidate that is guaranteed to be better. This is a bit messy though; a simpler (though slightly less efficient) approach would be to compute  instead of

instead of  and use the inequality

and use the inequality

to obtain what should presumably be a better lower bound for M_k than what we currently have.

22 November, 2013 at 8:20 am

Eytan Paldi

In general this iteration gives linear convergence to , so one may try to accelerate it numerically (e.g. by Aitken’s

, so one may try to accelerate it numerically (e.g. by Aitken’s  method.)

method.)

21 November, 2013 at 5:44 am

Eytan Paldi

It seems that the integral operator above is compact since its representing integrand is continuous on its domain (i.e. the product of the simplex in t and the simplex in u.)

But I don’t see why its representing integrand is symmetric (as it should for a self-adjoint operator)!

I suggest to see if the integrands representing powers of this operators can be evaluated explicitly (e.g. by the integrand composition operation) – so the traces of (small) powers of the operator may be evaluated – giving upper bounds for corresponding powers of .

.

21 November, 2013 at 6:57 am

Terence Tao

One can check by hand that for any (say) bounded compactly supported F,G on the simplex.

for any (say) bounded compactly supported F,G on the simplex.

The operator is not quite an integral operator because each of the

is not quite an integral operator because each of the  components only integrates in one of the dimensions rather than all

components only integrates in one of the dimensions rather than all  of the dimensions. As such, it doesn’t look compact, but may still have pure point spectrum, at least at the top of the spectrum (I have to check this). In particular, I don’t expect the traces of powers of this operator to be finite. A model case here is the operator

of the dimensions. As such, it doesn’t look compact, but may still have pure point spectrum, at least at the top of the spectrum (I have to check this). In particular, I don’t expect the traces of powers of this operator to be finite. A model case here is the operator ![T: L^2([0,1]^2) \to L^2([0,1]^2)](https://s0.wp.com/latex.php?latex=T%3A+L%5E2%28%5B0%2C1%5D%5E2%29+%5Cto+L%5E2%28%5B0%2C1%5D%5E2%29&bg=ffffff&fg=545454&s=0&c=20201002) given by

given by

which is positive semi-definite and bounded with an operator norm of 1, but has an infinity of eigenfunctions at 1 (any f that is independent of y will be an eigenfunction).

21 November, 2013 at 7:40 am

Eytan Paldi

Thanks! (I see now my error.)

Anyway, I think that it is also important to get also some upper bounds for (to estimate the tightness of its lower bounds and to get some limitations of the method.)

(to estimate the tightness of its lower bounds and to get some limitations of the method.)

21 November, 2013 at 11:51 am

Terence Tao

I no longer have any clear intuition as to whether the spectrum of is pure point, absolutely continuous, or some combination of both (it could also possibly have singular continuous spectrum); the situation could be as complicated as it is for discrete or continuous Schrodinger operators, which have a very wide range of possible behaviours. Consider for instance the model operator

is pure point, absolutely continuous, or some combination of both (it could also possibly have singular continuous spectrum); the situation could be as complicated as it is for discrete or continuous Schrodinger operators, which have a very wide range of possible behaviours. Consider for instance the model operator

on the triangle (all variables are assumed to be non-negative); this is one of the two components of

(all variables are assumed to be non-negative); this is one of the two components of  in the k=2 case. One can split

in the k=2 case. One can split  into the functions that are constant in the y direction, and functions that are mean zero in the y direction. The operator T annihilates the latter space, and acts by multiplication by

into the functions that are constant in the y direction, and functions that are mean zero in the y direction. The operator T annihilates the latter space, and acts by multiplication by  on the former space. So there is absolutely continuous spectrum on

on the former space. So there is absolutely continuous spectrum on ![[0,1]](https://s0.wp.com/latex.php?latex=%5B0%2C1%5D&bg=ffffff&fg=545454&s=0&c=20201002) and a big null space at 0. The operator norm of 1 is not attained by any function, but one can get arbitrarily close to this norm by, e.g. the indicator function of the rectangle

and a big null space at 0. The operator norm of 1 is not attained by any function, but one can get arbitrarily close to this norm by, e.g. the indicator function of the rectangle ![[0,\varepsilon] \times [0,1-\varepsilon]](https://s0.wp.com/latex.php?latex=%5B0%2C%5Cvarepsilon%5D+%5Ctimes+%5B0%2C1-%5Cvarepsilon%5D&bg=ffffff&fg=545454&s=0&c=20201002) for

for  (one can normalise to have unit norm in L^2 if one wishes).

(one can normalise to have unit norm in L^2 if one wishes).

Despite the possible lack of eigenfunctions (which in particular may mean that in some cases the maximum of the ratio is not attainable), I believe it is still correct that the operator norm of

is not attainable), I believe it is still correct that the operator norm of  for all functions on the simplex is the same as the operator norm of

for all functions on the simplex is the same as the operator norm of  for symmetric functions on the simplex, which informally means that we can restrict without loss of generality to the case of symmetric functions F. To see this, suppose that we have a sequence

for symmetric functions on the simplex, which informally means that we can restrict without loss of generality to the case of symmetric functions F. To see this, suppose that we have a sequence  of

of  -normalised functions on the simplex which is a extremising sequence for

-normalised functions on the simplex which is a extremising sequence for  , thus this sequence approaches the supremum

, thus this sequence approaches the supremum  as

as  . By replacing

. By replacing  with its absolute value

with its absolute value  (which does not change the L^2 norm, but can only serve to increase the

(which does not change the L^2 norm, but can only serve to increase the  ) we may assume that the

) we may assume that the  are non-negative. In particular, the projection

are non-negative. In particular, the projection  to the symmetric functions (i.e. the symmetrisation of

to the symmetric functions (i.e. the symmetrisation of  ) is bounded away from zero (in fact it is at least