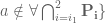

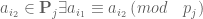

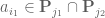

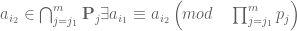

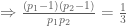

Tamar Ziegler and I have just uploaded to the arXiv our paper “Infinite partial sumsets in the primes“. This is a short paper inspired by a recent result of Kra, Moreira, Richter, and Robertson (discussed for instance in this Quanta article from last December) showing that for any set of natural numbers of positive upper density, there exists a sequence

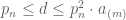

of natural numbers and a shift

such that

for all

this answers a question of Erdős). In view of the “transference principle“, it is then plausible to ask whether the same result holds if

is replaced by the primes. We can show the following results:

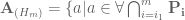

Theorem 1

- (i) If the Hardy-Littlewood prime tuples conjecture (or the weaker conjecture of Dickson) is true, then there exists an increasing sequence

of primes such that

is prime for all

.

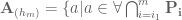

- (ii) Unconditionally, there exist increasing sequences

and

of natural numbers such that

is prime for all

.

- (iii) These conclusions fail if “prime” is replaced by “positive (relative) density subset of the primes” (even if the density is equal to 1).

We remark that it was shown by Balog that there (unconditionally) exist arbitrarily long but finite sequences of primes such that

is prime for all

. (This result can also be recovered from the later results of Ben Green, myself, and Tamar Ziegler.) Also, it had previously been shown by Granville that on the Hardy-Littlewood prime tuples conjecture, there existed increasing sequences

and

of natural numbers such that

is prime for all

.

The conclusion of (i) is stronger than that of (ii) (which is of course consistent with the former being conditional and the latter unconditional). The conclusion (ii) also implies the well-known theorem of Maynard that for any given , there exist infinitely many

-tuples of primes of bounded diameter, and indeed our proof of (ii) uses the same “Maynard sieve” that powers the proof of that theorem (though we use a formulation of that sieve closer to that in this blog post of mine). Indeed, the failure of (iii) basically arises from the failure of Maynard’s theorem for dense subsets of primes, simply by removing those clusters of primes that are unusually closely spaced.

Our proof of (i) was initially inspired by the topological dynamics methods used by Kra, Moreira, Richter, and Robertson, but we managed to condense it to a purely elementary argument (taking up only half a page) that makes no reference to topological dynamics and builds up the sequence recursively by repeated application of the prime tuples conjecture.

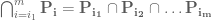

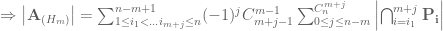

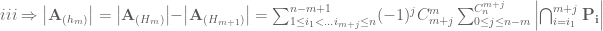

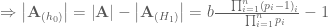

The proof of (ii) takes up the majority of the paper. It is easiest to phrase the argument in terms of “prime-producing tuples” – tuples for which there are infinitely many

with

all prime. Maynard’s theorem is equivalent to the existence of arbitrarily long prime-producing tuples; our theorem is equivalent to the stronger assertion that there exist an infinite sequence

such that every initial segment

is prime-producing. The main new tool for achieving this is the following cute measure-theoretic lemma of Bergelson:

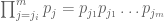

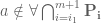

Lemma 2 (Bergelson intersectivity lemma) Letbe subsets of a probability space

of measure uniformly bounded away from zero, thus

. Then there exists a subsequence

such that

for all

.

This lemma has a short proof, though not an entirely obvious one. Firstly, by deleting a null set from , one can assume that all finite intersections

are either positive measure or empty. Secondly, a routine application of Fatou’s lemma shows that the maximal function

has a positive integral, hence must be positive at some point

. Thus there is a subsequence

whose finite intersections all contain

, thus have positive measure as desired by the previous reduction.

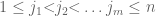

It turns out that one cannot quite combine the standard Maynard sieve with the intersectivity lemma because the events that show up (which roughly correspond to the event that

is prime for some random number

(with a well-chosen probability distribution) and some shift

) have their probability going to zero, rather than being uniformly bounded from below. To get around this, we borrow an idea from a paper of Banks, Freiberg, and Maynard, and group the shifts

into various clusters

, chosen in such a way that the probability that at least one of

is prime is bounded uniformly from below. One then applies the Bergelson intersectivity lemma to those events and uses many applications of the pigeonhole principle to conclude.

45 comments

Comments feed for this article

26 January, 2023 at 11:24 am

Anonymous

“this answers a question of >>ErdÅ‘s”, Erdős is misspelled

[Corrected, thanks – T.]

26 January, 2023 at 5:29 pm

Anonymous

Considering theorem 1(iii), what is the strongest known necessary or sufficient conditions for the sequence for the conclusions of theorem 1 ?

for the conclusions of theorem 1 ?

[Sorry, I am not able to interpret this question. -T]

27 January, 2023 at 2:00 am

remy

I propose to take a side step or look at the integers in different ways. For that I propose to introduce all the primes less than the value of the integer and to look only at the vector of all the modulos.

the vector is decomposable in sum ->glodbach’s conjecture

and that must also, can be , simplify your approach

Sorry, I am not able to interpret this question.

remy

27 January, 2023 at 2:49 am

Raphael

Sorry, maybe too naive question, but can we give some explicit initial elements of any example for the two sequences?

1 February, 2023 at 10:04 am

Terence Tao

Unfortunately not (though we can of course normalize one of the elements to be anything we like by translating the other sequence in the opposite direction). Even the simpler problem of producing an explicit positive integer such that

such that  are prime for infinitely many

are prime for infinitely many  is open; we know that there is at least one such

is open; we know that there is at least one such  with

with  , and every even integer should in fact have this property (de Polignac’s conjecture), but we cannot exhibit one explicitly. (Note that any difference

, and every even integer should in fact have this property (de Polignac’s conjecture), but we cannot exhibit one explicitly. (Note that any difference  of two elements of the first sequence will be of this form.)

of two elements of the first sequence will be of this form.)

1 February, 2023 at 11:59 pm

Raphael

Very interesting, thank you very much! Follow-up: Is there already a conjecture that for every $ latex n $ there is an infinite sequence of prime- tupels (with an appropriate unique definition of prime-

tupels (with an appropriate unique definition of prime- tupel, generalizing twins, triples, …)?

tupel, generalizing twins, triples, …)?

27 January, 2023 at 7:15 am

Yemon Choi

Does Theorem 1(ii) answer this question which I raised years ago on MathOverflow? https://mathoverflow.net/questions/3347/is-the-set-of-primes-translation-finite

(The original context was related to weak compactness / weak almost periodicity, see Defn 2.3 + Thm 2.4 + Lemma 2.5 of https://arxiv.org/abs/0811.4432 )

1 February, 2023 at 10:07 am

Terence Tao

Yes, it seems that Theorem 1.5 of our paper is precisely the assertion that the primes are not translation-finite. I’ll add these references to the next revision of the paper.

27 January, 2023 at 10:56 am

Anonymous

It seems that the formulation of theorem 1(III) is not sufficiently clear (because the primes are “positive density subset of the primes” – so the replacement of the primes by themselves should not change the conclusions !)

[“positive density” clarified to “positive relative density” -T.]

27 January, 2023 at 3:55 pm

a

Happy new year

28 January, 2023 at 5:12 am

Older but not wiser

Been 19 years since I took grad real analysis. Forgot the arguments you posted. Yet this post is short and very understandable.

28 January, 2023 at 2:38 pm

Anonymous

Is it possible to generalize these results to sumsets in ?

?

28 January, 2023 at 6:39 pm

Sr Sidney Silva

as resoluções onde ele estiver presente…. com relação aos números primos, onde um Brasileiro afirma cientificamente que alguns números não são primos como todos(as) vem relatando de tempos passados, com uma simples PA(Progressão Aritmética), desvendei seus enigmas, onde afirmo cientificamente que não são primos e nem primos gêmeos: segue os números relatados a seguir: 2; 19; 41; 59; 61; 79; 101; 139; 179; 181; 199; 239; 241; 281; 359; 401; 419; 421; 439; 461; 479; 499; 521; 541; 599; 601; 619; 641; 659; 661; 701; 719; 739; 761; 821; 839; 859; 881; 919; 941; 1019; 1021; 1039; 1061; 1181; 1201; 1259; 1279; 1301; 1319; 1321; 1361; 1381; 1399; 1439; 1459; 1481; 1499; 1559; 1579; 1601; 1619; 1621; 1699; 1721; 1741; 1759; 1801; 1861; 1879; 1901; 1979; e mais alguns…… sendo assim “A Hipótese de Rielmann perde totalmente sua força” o autor Sr Sidney Silva.

1 February, 2023 at 10:50 am

Terence Tao

I would imagine that the tools used here (in particular, the Maynard sieve) would extend to other number fields, for instance establishing an analogous result for the Gaussian primes, but I have not checked all the details.

28 January, 2023 at 3:32 pm

AF

Of the conjectures of Dickson and Hardy–Littlewood, neither is stronger than the other, because while Hardy and Littlewood do give an asymptotic formula, Dickson considers general linear functions (where the leading coefficient, ie. the step size is allowed to vary).

2 February, 2023 at 8:00 pm

Keiju Sono

In page 3, the fifth line of the proof of Prop2.1

j>1 will be j>i.

I think the question of how small the width h_{k}-h_{1} of the prime producing k-tuple in Theorem 1.5 can be is one of the essential questions.

Have you considered this?

[Thanks, this typo will be corrected in a future revision of the ms. The most recent update of the paper now contains some discussion of growth rates; see Remark 3.3. -T.]

5 February, 2023 at 2:16 am

Arman

Please Prof creareate a YouTube channel and you will get more than one billion subscribers please

12 February, 2023 at 2:46 pm

Anonymous

It will result in anger from other math agencies that have benefited from having his talk on their channel on Youtube. It’s not advised.

5 February, 2023 at 2:33 am

Arman

Prof share more if you make a video will be much clearer but if you have not time post more i am very huge fan of MATH)

11 February, 2023 at 10:22 am

ducduc2710

Some calculations involving prime numbers https://www.researchgate.net/publication/367227821_Certaines-des-conseuquenceus-suivantes

5 May, 2023 at 2:37 pm

Sidney Silva Carnavale

Prezados nobres amigos, com meu respeito a todos(as) aqui presente, interessantes esses relatos, porém não tem fundamento e conteúdo para tal afirmação, muita teoria de tempos passados e ainda não me deparei com um argumento mais plausível, todos(as) usam uma variável, uma integral, log, enfim relatam fatos de pensadores de tempos passados; continuo afirmando com total veracidade que todos os números que citei anteriormente não são primos, prove que minha tese esta errada. Sr Sidney Silva.

11 February, 2023 at 4:42 pm

Rhmn

I saw your question on MO whose answer by Joel sharpens your result on uncountability of X! Can’t we simply argue by saying that X is an uncountable compact subspace of Cantor space so it is of size continuum?

[Yes, this also works, though Joel’s argument does not require the knowledge that X is compact. – T.]

12 February, 2023 at 2:14 pm

a

Happy Feb 12

13 February, 2023 at 7:16 pm

wangwei

Proof of Four color theorem

Suppose there is a map that can only be colored in five colors. Remove a country. If it can only be colored in five colors after removal, then remove the country. Otherwise, leave it. Each country will perform and operations in turn until all countries have completed one round, and then the next round will perform the same operations until no country has been removed in one round. In this way, the new map formed in the remaining countries can only be colored in five colors, and if any country is removed, it can be colored in four colors.

In this way, the neighboring countries of each country must contain four colors, because if a country contains only three colors, it can be colored with four colors after removing the country, then it can also be colored with four colors without removing the country (new map), which is inconsistent with the new map.

If the neighboring countries of each country contain four colors, the new map should only be colored with five colors after removing one country, which is contradictory, so the assumption is not tenable. There is no map that can only be colored with five colors.

Is my proof correct?

14 February, 2023 at 9:59 am

Anonymous

It is not clear why your proof can’t be applied to another numbers (e.g. 3 0r 5) than 4 (in other words. it is not clear from your proof what is special about 4)

21 February, 2023 at 10:11 am

Anonymous

4 is very special in analytic number theory.

22 February, 2023 at 3:32 pm

Sr Sidney Silva

Prezado nobre amigo, interessante sua pergunta, pois com uma simples PA(Progressão Aritmética) padronizei duas fórmulas: vejamos como ficaria pegar um número primo e dividir ou seja usar a fatoração de números primos para ser fatorado do menor para o maior e do maior para o menor, assim será considerado um número primo:

esse número é primo 12280616105497 e somente pode ser dividido e fatorado somente pelos números 1867; 1871; 1873; 1877 que também são primos vejamos como fica minha tese:

do menor para o maior:

12280616105497 1867

6577726891 1871

3515621 1873

1877 1877

1

Agora irei fatorar com números primos do maior para o menor:

12280616105487 1877

6542683061 1873

3493157 1871

1867 1867

1

Provando minha tese que o numeral 2, 19 e outros já citados acima não são primos, e os primos gêmeos não existe. lendo este número primo teremos; (Doze Trilhões, Duzentos e Oitenta Bilhões, Seiscentos e Dezesseis Milhões, Cento e Cinco Mil Quatrocentos e Oitenta e Sete.), com esta simples fórmula cheguei na casa dos 92 Nonilhões, e é Finito…. Sr Sidney Silva.

23 February, 2023 at 3:32 am

Luis Martinez

12280616105497 = 3515621*3493157

is not prime

23 February, 2023 at 2:52 pm

Sr Sidney Silva

Sim, são primos, agora se tiver usando o numeral dois e muitos outros que mencionei que não são primos pode ser que sua afirmação esteja correta, porém todos que fatorei são primos tanto o de 14 dígitos e seus resultados são primos, prova cientifica minha tese esta correta. Sr Sidney Silva

24 February, 2023 at 1:04 am

remy

I specify that there is not a prime number at each

different primorielle

an example with a series of twin primes

m=11590571 = 11590571

mm=m+2 = 11590573

m%(2*3*5*7*11*13*17) = 359351= 43 * 61 * 137

mm%(2*3*5*7*11*13*17) = 359353

m%(2*3*5*7*11*13) = 29021

mm%(2*3*5*7*11*13) = 29023

m%(2*3*5*7*11) = 1301

mm%(2*3*5*7*11) = 1303

m%(2*3*5*7) = 41

mm%(2*3*5*7) = 43

m%(2*3) = 5

mm%(2*3) = 1->3

19 February, 2023 at 3:52 am

Luis Martinez

Know primes number (included in sequences)

Hence the Mersenne primes are verified in the form:

if

With

20 February, 2023 at 1:13 pm

Sr Sidney Silva

Neste enunciado o 19 não é primo, então cai por terra esta hipótese!!

23 February, 2023 at 5:06 am

remy

your conjecture is true because,for me

(largePrimeNum)mod(2*3*5*7*11*…101)=PrimeNum

(largePrimeNum)mod(2*3*5*7*11*…97)=PrimeNum

…

(largePrimeNum)mod(2*3*5*7*11*…89)=PrimeNum

(largePrimeNum)mod(2*3*5*7*11*…83)=PrimeNum

…

(largePrimeNum)mod(2*3*5*7*11*…47)=PrimeNum

(largePrimeNum)mod(2*3*5*7*11*…43)=PrimeNum

…

(largePrimeNum)mod(2*3*5*7*11)=PrimeNum

(largePrimeNum)mod(2*3*5*7)=PrimeNum

for the demonstration it is necessary to write the prime numbers with a primorielle base

Attached is a file with the first 1000 prime numbers in primorielle base

https://www.cjoint.com/doc/23_02/MBvp6pGpfsJ_sortie.txt

so

largePrimeNum=pq+r=primorielle n+prime

(largePrimeNum)mod(primorielle )=prime

it is a consequence of the proposal of the available demonstrations

Click to access 2206.0068v1.pdf

and don’t repeat it but chatGPT doesn’t know how to make a small prime number from a large prime number

thank you for your attention

24 February, 2023 at 1:05 am

remy

I specify that there is not a prime number at each

different primorielle

an example with a series of twin primes

m=11590571 = 11590571

mm=m+2 = 11590573

m%(2*3*5*7*11*13*17) = 359351= 43 * 61 * 137

mm%(2*3*5*7*11*13*17) = 359353

m%(2*3*5*7*11*13) = 29021

mm%(2*3*5*7*11*13) = 29023

m%(2*3*5*7*11) = 1301

mm%(2*3*5*7*11) = 1303

m%(2*3*5*7) = 41

mm%(2*3*5*7) = 43

m%(2*3) = 5

mm%(2*3) = 1->3

24 February, 2023 at 12:57 pm

Sr Sidney Silva

Prezado Srs(as), prove que esses números que mencionei pode ser encontrado números gêmeos, não vão encontrar, pois dentro da lista que me foi passado, aparece o 2, 19,41, 59, 61, 79 e muitos outros que citei não ser primos, enfim esses números não são primos, gostaria que todos(as) revesse seus conceitos, pois existe argumento dentro de um embasamento que esses números não são primos, os que citei acima em epigrafe.

24 February, 2023 at 1:02 pm

Sr Sidney Silva

Nobre amigo, ficou vago sua explanação, prove sem esses números que apresentei que não são primos para ver se seu resultado esta correto? tenta olhar de outro ângulo minhas teorias… pois minhas teses estão de acordo com a revista científicas Sidney Silva.

27 February, 2023 at 12:18 am

remy

I present a prime number generator based on an arithmetic progression (a sum) and I justify this progression with a demonstration proposal.

I do not change the rules of the game

27 February, 2023 at 2:47 pm

Sr Sidney Silva

Prezado nobre amigo interessante sua explanação com meu respeito a todos(as) aqui presente neste singelo canal, tenho um conceito sobre o que é números primos:

“Pra ser um número primo terá que dividir somente pelo número primo, sendo do menor para o maior, e do maior para o menor, só assim poderá ser um número primo exato e finito” essa Lei é aplicada no momento em que for feita a Fatoração de números primos”…. ao contrário que todos(as) vem relatando em tempos passados, usando uma teoria já obsoleta para os tempos atuais, Sr Sidney Silva. Porém não é possível esta sequência, pois tem alguns números que não são primos e os primos gêmeos não existe,

Estes números que irei apresentar não são primos!!

2; 19; 41; 59; 61; 79; 101; 139; 179; 181; 199; 239; 241; 281; 359; 401; 419; 421; 439; 461; 479; 499; 521; 541; 599; 601; 619; 641; 659; 661; 701; 719; 739; 761; 821; 839; 859; 881; 919; 941; 1019; 1021; 1039; 1061; 1181; 1201; 1259; 1279; 1301; 1319; 1321; 1361; 1381; 1399; 1439; 1459; 1481; 1499; 1559; 1579; 1601; 1619; 1621; 1699; 1721; 1741; 1759; 1801; 1861; 1879; 1901; 1979; Prove que esta Tese esta errada

cientificamente… Sr Sidney Silva.

Irei relatar uma prova de que minha Tese esta correta, vejamos como fica:

pois com uma simples PA(Progressão Aritmética) padronizei duas fórmulas: vejamos como ficaria pegar um número primo e dividir ou seja usar a fatoração de números primos para ser fatorado do menor para o maior e do maior para o menor, assim será considerado um número primo:

esse número é primo 12280616105497 e somente pode ser dividido e fatorado somente pelos números 1867; 1871; 1873; 1877 que também são primos vejamos como fica minha tese:

do menor para o maior:

12280616105497 1867

6577726891 1871

3515621 1873

1877 1877

1

Agora irei fatorar com números primos do maior para o menor:

12280616105487 1877

6542683061 1873

3493157 1871

1867 1867

1

Provando minha tese que o numeral 2, 19 e outros já citados acima não são primos, e os primos gêmeos não existe. lendo este número primo teremos; (Doze Trilhões, Duzentos e Oitenta Bilhões, Seiscentos e Dezesseis Milhões, Cento e Cinco Mil Quatrocentos e Oitenta e Sete.), com esta simples fórmula cheguei na casa dos 92 Nonilhões, e é Finito…. Sr Sidney Silva. dentro deste valor encontrado multipliquei 1867*1871*1873*1877= 12280616105497 todos são números primos, irei citar outro exemplo mais simples: Sidney Silva

o primeiro numero primo seria o 3 vejamos como ficaria minha tese e teoria:

3*5*7*11*13*17= 255255 vejamos como fica pela minha definição de números primos:

Do menor para o maior:

255255 3

85085 5

17017 7

2431 11

221 13

17 17

1

Agora do maior para o menor:

255255 17

15015 13

1155 11

105 7

15 5

3 3

1

Prova que os valores fatorados somente com números primos; será considerado números primos, pois foi dividido somente com os números primos existente dentro da minha tese e teoria…porém haverá um único numero primo que será dividido por ele mesmo e o resultado será 1, classificando como os verdadeiros números primos………esse é meu conceito e a definição para os números primos…….meu cordial abraço Sr Sidney Silva.

28 February, 2023 at 12:58 am

remy

não concordaremos, lamento,a direcção do factoring não altera o resultado do factoring

24 February, 2023 at 2:47 am

remy

Attached is a file with twin primes that shares some of their writing

https://www.cjoint.com/doc/23_02/MBykOnigb1J_sortie.txt

I recommend using notepad++ there is no automatic line wrap

1 May, 2023 at 7:20 am

Anonymous

Prof. Zhexuan Tao: In recent years, I have repeatedly read your “Every Odd Number Greater Than 1 Is the Sum of Five Prime Numbers at Most” issued on February 1, 2012, “Heuristic Limitation of the Circle Method” issued on May 20, 2012, “Parity-Check Problem Obstacle for Binary Goldbach Conjecture with Bounded Errors” issued on July 9, 2014, “Heath-Brown’s Theorem on Twins of Prime Numbers and Siegel Zero” issued on August 26, 2015, and “the Principle of Inclusion and Exclusion for Commuting Predictions” issued on February 23, 2021, and other blog posts and related comments.

Researchers have been going around in circles regarding the proof of “Even Goldbach Conjecture and “Twin Prime Conjecture” based on the parity-check problem, the Möbius pseudo-randomness principle, the Siegel Zero and the screening theory, the Hall principle of inclusion and exclusion, and other obstacles. Thus, we should think about how to move our thought out of the loops made by these obstacles.

I think that the two major questions of “Even Goldbach’s Conjecture” and “Twin Prime Conjecture” are the defective problems of the fundamental theory of sets in essence . Thus, I would like to propose the following three propositions to have a deeper discussion with you.

Proposition 1: An integer set consisting of all integers greater than 1 and less than or equal to an integer is equivalent to the union of the prime factor sets less than or equal to the integer. Each integer containing a prime factor is an element of the set of prime factors, and each set of prime factors is a subset of the set of integers.

Each in the union sets of several subsets contains a unique personality and several different commonalities. The unique individuality of each union element makes the element exist independently in the union set. Each commonality is a property of each subset of the union set combined with the commonality of each other element. Each subset of the union set contains a unique property, which is the commonality of each union element. All elements that contain this commonality belong to elements of that subset. The number of commonalities in each union element in a union set is the number of times that the element intersects with each subset, or the number of the elements belonging to each subset in the union set Therefore, the number of commonalities contained in each element is also considered as a commonality. The elements with the same number of commonalities form a set of the number of the same commonalities.

As a result, a union set of several subsets can form two set forms. One is a union set consisting of union elements, and the other is a repeated set consisting of intersecting elements.

“The union set of sets of prime factors less than or equal to an integer” indicates that each union element contains more than one prime factor less than or equal to that integer. All elements that contain a prime factor form the set of that prime factor. Each set of prime factors is a subset of that union set. Thus, all elements containing m prime factors form a subset of the set containing m prime factors. “The principle of Inclusion and Exclusion” can be used to find the cardinal number of a set of elements containing more than m commonalities or the cardinal number of a set of elements containing only m commonalities.

The relevant symbols of relevant elements and sets that indicate the number of commonalities are added, and the symbols are used to describe the formula as follows:

To be continued······

Chinese Xulianke

xulianke@yeah.net

1 May, 2023 at 7:46 am

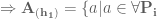

Anonymous

Ⅰ、 ,

,  ,

,  is the n smallest prime numbers, and

is the n smallest prime numbers, and  is an element with m commonalities in

is an element with m commonalities in  ;

;  ;

;  ,

,  ;

;  is a universal set, and

is a universal set, and  ’ is a complementary set of

’ is a complementary set of  ;

;  ; Set

; Set  ,

, .

.

N is the set of natural numbers,

ⅰ、

,

,  ,

,  ,

,  ;

;

ⅱ、

and

and  ,

,  ,

, and

and  ,

,  and

and  .

.

ⅲ、

is divided into:

is divided into:  ,

,  ,

,  ,

,  ;

;

.

.

ⅳ、

.

.

Based on “the Principle of Inclusion and Exclusion”

from

ⅴ、 the inverse formula is:

the inverse formula is: .

. is:

is:

,

,  ,

,  。

。 ,

, ,

,  .

.

By the “Principle of Inclusion and Exclusion”

By “the Inverse Formula of the Principle of Inclusion and Exclusion”

ⅵ、 .

.

By the nature of the set

To be continued······

Chinese Xulianke

xulianke@yeah.net

1 May, 2023 at 2:15 pm

Anonymous

Ⅱ、Related Conjecture:

ⅰ、Obviously, each element in contains more than m prime factors. Each element in

contains more than m prime factors. Each element in  contains only m prime factors. Each element in ,

contains only m prime factors. Each element in ,  does not contain a prime factor in

does not contain a prime factor in  and can only be a prime number. When d and n increase infinitely, the number of prime factors increase infinitely.

and can only be a prime number. When d and n increase infinitely, the number of prime factors increase infinitely.

ⅱ、

and.

and.  ;

;  and.

and.

;

;  and.

and.  .

. has randomness.

has randomness.  also has randomness.

also has randomness.

Thus, the operation of

ⅲ、

,

,  ,

, ,

,  ;

;  .

.

,

, .

.  ,

,  .

.

ⅳ、 it is conjectured that there must be n prime numbers between d and nd

it is conjectured that there must be n prime numbers between d and nd  ; and the interval of prime numbers, the function of the nth prime number, and other prime number problems can be derived from the formula of“

; and the interval of prime numbers, the function of the nth prime number, and other prime number problems can be derived from the formula of“ ”.

”.

The definite existence of one prime number between d and 2d has been proved;

V. ” and “

” and “ ”related to “Siegel Zero”? Can the location of the zero bound be derived from “

”related to “Siegel Zero”? Can the location of the zero bound be derived from “ ”? I think there is a certain causal relationship between them. Since the process of proving the above-mentioned relevant formulas and principles above is too long, it cannot be listed in this article.

”? I think there is a certain causal relationship between them. Since the process of proving the above-mentioned relevant formulas and principles above is too long, it cannot be listed in this article.

Is the randomness of “

To be continued

Chinese Xulianke

xulianke@yeah.net

1 May, 2023 at 6:50 pm

Anonymous

Proposition 2: “The sum of two integers greater than 1 is equal to the set consisting of the sum of the same integer”is equivalent to“the intersection of the union of the prime factor sets less than the integer minus 2 and the set of itself is the set where the sum of the two addends of each intersection element is equal to the integer”, and is also equivalent to “one integer written as a set consisting of the sum of all two integers greater than 1”.

Obviously, if the integers in Proposition 2 are taken to be even numbers, it is the problem of“Even Goldbach’s Conjecture”.From Proposition 1, it is known that the “even Goldbach’s conjecture” is the intersection of union sets of two sets of prime factors less than the same even number, that is, the intersection of two identical union sets. In Proposition 1, “the Principle of Inclusion and Exclusion” can be applied to find the cardinal number of sets that contain m commonalities in any union set. In the intersection of two union sets, it is necessary to find the set consisting of the intersection of elements containing commonalities in one union set and elements containing commonalities in the other union set. This is equivalent to the form of “Goldbach’s conjecture” in which one addend contains prime factors and the other addend contains prime factors in the form of one even number written as the sum of two integers greater than 1. Obviously, there is a new principle that cannot be solved by “the Principle of Inclusion and Exclusion”, that is, “the correlation principle of finding the cardinal number of the intersection of two union sets”.

Each prime factor present in an even number is commonly present in both addends of each intersection element, while the same prime factor not present in an even number cannot be present in both addends of the same intersection element. Further, regarding each prime factor whose square number is smaller than the even number, there must be a term containing more than two identical prime factors, and each prime factor whose square number is greater than the even number has no repeated prime factors in each term.

Thus, it is necessary to divide the prime factors less than that even number into: prime factors that divide even numbers and prime factors that do not divide even numbers, as well as prime factors whose square number is smaller than an even number and do not divide evenly in an even number and prime factors whose square number is greater than an even number and do not divide evenly in an even number.

The new symbolic form is used to perform the formulaic description and operation derivation as follows:

To be continued········

Chinese Xulianke

xulianke

12 May, 2023 at 8:53 am

ducduc2710

Dear Professor Terence Tao, why not prove Bunyakovsky conjecture to get 3 out of 4 problems of Landau. How does the sum of 2k primes equal to the sum of 2h primes apply to the prime number structure? And one more thing, n^2+1 is not provable by sums of three cubes.