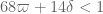

As in all previous posts in this series, we adopt the following asymptotic notation: is a parameter going off to infinity, and all quantities may depend on

unless explicitly declared to be “fixed”. The asymptotic notation

is then defined relative to this parameter. A quantity

is said to be of polynomial size if one has

, and bounded if

. We also write

for

, and

for

.

The purpose of this (rather technical) post is both to roll over the polymath8 research thread from this previous post, and also to record the details of the latest improvement to the Type I estimates (based on exploiting additional averaging and using Deligne’s proof of the Weil conjectures) which lead to a slight improvement in the numerology.

In order to obtain this new Type I estimate, we need to strengthen the previously used properties of “dense divisibility” or “double dense divisibility” as follows.

Definition 1 (Multiple dense divisibility) Let

. For each natural number

, we define a notion of

-tuply

-dense divisibility recursively as follows:

- Every natural number

is

-tuply

-densely divisible.

- If

and

is a natural number, we say that

is

-tuply

-densely divisible if, whenever

are natural numbers with

, and

, one can find a factorisation

with

such that

is

-tuply

-densely divisible and

is

-tuply

-densely divisible.

We let

denote the set of

-tuply

-densely divisible numbers. We abbreviate “

-tuply densely divisible” as “densely divisible”, “

-tuply densely divisible” as “doubly densely divisible”, and so forth; we also abbreviate

as

.

Given any finitely supported sequence and any primitive residue class

, we define the discrepancy

We now recall the key concept of a coefficient sequence, with some slight tweaks in the definitions that are technically convenient for this post.

Definition 2 A coefficient sequence is a finitely supported sequence

that obeys the bounds

for all

, where

is the divisor function.

- (i) A coefficient sequence

is said to be located at scale

for some

if it is supported on an interval of the form

for some

.

- (ii) A coefficient sequence

located at scale

for some

is said to obey the Siegel-Walfisz theorem if one has

for any

, any fixed

, and any primitive residue class

.

- (iii) A coefficient sequence

is said to be smooth at scale

for some

is said to be smooth if it takes the form

for some smooth function

supported on an interval of size

and obeying the derivative bounds

for all fixed

(note that the implied constant in the

notation may depend on

).

Note that we allow sequences to be smooth at scale without being located at scale

; for instance if one arbitrarily translates of a sequence that is both smooth and located at scale

, it will remain smooth at this scale but may not necessarily be located at this scale any more. Note also that we allow the smoothness scale

of a coefficient sequence to be less than one. This is to allow for the following convenient rescaling property: if

is smooth at scale

,

, and

is an integer, then

is smooth at scale

, even if

is less than one.

Now we adapt the Type I estimate to the -tuply densely divisible setting.

Definition 3 (Type I estimates) Let

,

, and

be fixed quantities, and let

be a fixed natural number. We let

be an arbitrary bounded subset of

, let

, and let

a primitive congruence class. We say that

holds if, whenever

are quantities with

for some fixed

, and

are coefficient sequences located at scales

respectively, with

obeying a Siegel-Walfisz theorem, we have

for any fixed

. Here, as in previous posts,

denotes the square-free natural numbers whose prime factors lie in

.

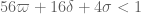

The main theorem of this post is then

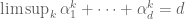

Theorem 4 (Improved Type I estimate) We have

whenever

and

In practice, the first condition here is dominant. Except for weakening double dense divisibility to quadruple dense divisibility, this improves upon the previous Type I estimate that established under the stricter hypothesis

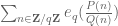

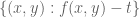

As in previous posts, Type I estimates (when combined with existing Type II and Type III estimates) lead to distribution results of Motohashi-Pintz-Zhang type. For any fixed and

, we let

denote the assertion that

for any fixed , any bounded

, and any primitive

, where

is the von Mangoldt function.

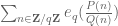

Proof: Setting sufficiently close to

, we see from the above theorem that

holds whenever

and

The second condition is implied by the first and can be deleted.

From this previous post we know that (which we define analogously to

from previous sections) holds whenever

while holds with

sufficiently close to

whenever

Again, these conditions are implied by (8). The claim then follows from the Heath-Brown identity and dyadic decomposition as in this previous post.

As before, we let denote the claim that given any admissible

-tuple

, there are infinitely many translates of

that contain at least two primes.

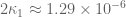

This follows from the Pintz sieve, as discussed below the fold. Combining this with the best known prime tuples, we obtain that there are infinitely many prime gaps of size at most , improving slightly over the previous record of

.

— 1. Multiple dense divisibility —

We record some useful properties of dense divisibility.

Lemma 7 (Properties of dense divisibility) Let

and

.

- (i) If

is

-tuply

-densely divisible, and

is a factor of

, then

is

-tuply

-densely divisible. Similarly, if

is a multiple of

, then

is

-densely divisible.

- (ii) If

are

-densely divisible, then

is also

-densely divisible.

- (iii) Any

-smooth number is

-tuply

-densely divisible.

- (iv) If

is

-smooth and square-free for some

, and

, then

is

-tuply

-densely divisible.

Proof: (i) is easily established by induction on , the idea being to start with a good factorisation of

and perturb it into a factorisation of

or

by dividing or multiplying by a small number. To prove (ii), we may assume without loss of generality that

, so that

. If we set

, then the factors of

, as well as the factors of

multiplied by

, are both factors of

. From this we can deduce the

-dense divisibility of

from the

-dense divisibility of

.

The claim (iii) is easily established by induction on and a greedy algorithm, so we turn to (iv). The claim is trivial for

. Next, we consider the

case. Our task is to show that for any

, one can find a factorisation

with

. If

, we can achieve this factorisation by initialising

to equal

and then greedily multiplying the remaining factors of

until one exceeds

, so we may assume instead that

. Then by the greedy algorithm we can find a factor

of

with

; if we then greedily multiply

by factors

with

we obtain the claim.

Finally we consider the case. We assume inductively that the claim has already been proven for smaller values of

. Let

be such that

. By hypothesis, the

-smooth quantity

is at least

. By the greedy algorithm, we may thus factor

where

and

and thus

Now we divide into several cases. Suppose first that . Then

, so by the

case, we may find a factorisation

with

. Setting

and

, the claim then follows from the induction hypothesis.

Now suppose that . By the greedy algorithm, we may then find a factor

of the

-smooth quantity

with

; setting

, we see that

is a multiple of

and hence

. The claim now follows from the induction hypothesis and (iii).

Finally, suppose that . By the greedy algorithm, we may then find a factor

of the

-smooth quantity

with

; setting

, we see that

is a multiple of

and hence

. The claim now follows from the induction hypothesis and (iii).

Now we record the criterion for using to deduce

.

Proposition 8 (Criterion for DHL) Let

be such that

holds. Suppose that one can find a natural number

and real numbers

and

such that

where

Then

holds.

Proof: We use the Pintz sieve from this post, repeating the proof of Theorem 5 from that post (and using the explicit formulae for and

from this comment thread). The main difference is that the exponent

in equation (10) of that post needs to be replaced with

(and similarly for the displays up to (11)), and

needs to be replaced with

.

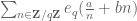

Applying this proposition with ,

,

,

sufficiently close to

, and

we obtain

as claimed.

— 2. van der Corput estimates —

In this section we generalise the van der Corput estimates from Section 1 of this previous post to wider classes of “structured functions” than rational phases. We will adopt an axiomatic approach, laying out the precise axioms that we need a given class of structured functions to obey:

Definition 9 (Structured functions) Let

be a bounded subset of

. A class of structured functions is a family

of collections

of functions

defined on subsets

of

for each prime

and every

; an element of

is then said to be a structured function of complexity at most

and modulus

. Furthermore we place an equivalence relation

on each class

with

sufficiently large depending on

. This class and this equivalence relation is assumed to obey the following axioms:

- (i) (Monotonicity) One has

whenever

. Furthermore, if

is sufficiently large depending on

, the equivalence relations on

and

agree on their common domain of definition.

- (ii) (Near-total definition) If

, then the domain

of

consists of

with at most

points removed.

- (iii) (Pointwise bound) If

, then

for all

in the domain

of

.

- (iv) (Conjugacy) If

, then

for some

.

- (v) (Multiplication) If

, then the pointwise product

(on the common domain of definition) can be expressed as the sum of

functions

(which we will call the components of

) in

for some

.

- (vi) (Translation invariance) If

, and

, then the function

(defined on the translation

of the domain of definition of

) lies in

for some

.

- (vii) (Dilation invariance) If

, and

, then the function

(defined on the dilation

of the domain of definition of

) lies in

for some

.

- (viii) (Polynomial phases) If

is a polynomial of degree at most

, then the function

lies in

for some

. More generally, if

, then the product

lies in

for some

. Furthermore, if

is sufficiently large depending on

, this operation respects the equivalence relation

:

if and only if

. Finally, if

and

is not identically zero, then

.

- (ix) (Almost orthogonality) If

have domains of definition

respectively, one has

for an algebraic integer

, with the error term

being Galois-absolute in the sense that all Galois conjugates of the error term are also

. Furthermore, if

is sufficiently large depending on

, then

vanishes whenever

.

- (x) (Integration) Suppose that

is such that

contains a component equivalent to

for some

. Suppose also that

is sufficiently large depending on

. Then there exists

such that

.

Example 1 (Polynomial phases) Let

be a bounded subset of

. If, for every prime

and

, we define

to be the set of all functions of the form

, where

are polynomials of degree at most

with integer coefficients, defined on all of

, then this is a class of structured functions (note that the almost orthogonality axiom requires the Weil conjectures for curves). Two polyomial phases

will be declared equivalent if

differ only in the constant term. Note from the Chinese remainder theorem that the function

is then also a structured function of complexity at most

and modulus

.

Example 2 (Polynomial phases twisted by characters) Let

be a bounded subset of

. If, for every prime

and

, we define

to be the set of all functions of the form

, where

is a phase,

are polynomials of degree at most

with integer coefficients,

, and the

are Dirichlet characters of order

, with the non-standard convention that

is undefined (instead of vanishing) at zero. Then this is a class of structured functions (again, the almost orthogonality axiom requires the Weil conjectures for curves). We declare two structured functions to be equivalent if they agree up to a constant phase on their common domain of definition. Note from the Chinese remainder theorem that the function

is then also a structured function of complexity at most

if the

are Dirichlet characters of period

(and conductor dividing

), again with the convention that

is undefined (instead of vanishing) when

.

Example 3 (Rational phases) Let

be a bounded subset of

. If, for every prime

and

, we define

to be the set of all functions of the form

, where

are polynomials of degree at most

with integer coefficients and with

monic, with the function only defined when

, then this is a class of structured functions (again, the almost orthogonality axiom requires the Weil conjectures for curves). We declare two structured functions to be equivalent if they agree up to a constant phase on their common domain of definition. Note from the Chinese remainder theorem that the function

is then also a structured function of complexity at most

and modulus

.

Example 4 (Trace weights) Let

be a bounded subset of

. We fix a prime

not in

, and we fix an embedding

of the

-adics into

. If, for every prime

and

, we define

to be the set of all functions

of the form

where

is

with at most

points removed, and

is a lisse

-adic sheaf on

that is pure of weight

and geometrically isotypic with conductor at most

(see this previous post for definitions of these terms), then this is a class of structured functions. We declare two trace weights

to be equivalent if one has

and

for some geometrically isotypic sheaves

whose geometrically irreducible components are isomorphic. The almost orthogonality now is deeper, being a consequence of Deligne’s second proof of the Weil conjectures, and also using a form of Schur’s lemma for sheaves; see Section 5 of this paper of Fouvry, Kowalski, and Michel. The integration axiom follows from Lemma 5.3 of the same paper. This class of structured functions includes the previous three classes, but also includes Kloosterman-type objects such as

(and many other exponential sums) besides. (Indeed, it basically closed under the operations of Fourier transforms, convolution, and pullback, as long as certain degenerate cases are avoided.)

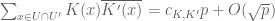

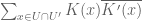

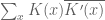

We now turn to the problem of obtaining non-trivial bounds for the expression

where ,

is a structured function of bounded complexity and modulus

, and

is a smooth function at scale

. The trivial bound here is

since one has from the divisor bound. In some cases we cannot hope to improve upon this bound; for instance, if

is a constant phase

then there is clearly no improvement available. Similarly, if

is the linear phase

, then there is no improvement in the regime

; if

is the quadratic phase

then there is no improvement in the regime

; if

is the cubic phase

then there is no improvement in the regime

; and so forth. On the other hand, we will be able to establish a van der Corput estimate which roughly speaking asserts that as long as these polynomial obstructions are avoided, and

is smooth, one gets a non-trivial gain.

We first need a lemma:

Lemma 10 (Fundamental theorem of calculus) Let

be a class of structured functions. Let

, let

, and let

be a structured function of complexity at most

with modulus

. Assume that

is sufficiently large depending on

. Let

, and suppose that there is a polynomial

of degree at most

such that

for all

for which this identity is well-defined. Then there exists a polynomial

of degree at most

such that

for all

for which this identity is well-defined.

Proof: By dilating by and using the dilation invariance of structured functions, we may assume without loss of generality that

. We can write

in terms of the binomial functions

for

(which are well-defined if

) as

for some coefficients . If we then define

then is a polynomial of degree at most

(if

) and

by Pascal’s identity. So if we multiply

by

(using the polynomial phase invariance of structured functions) we may assume without loss of generality that

, thus

. But then the claim follows from the integration axiom.

Now we can state the van der Corput estimate.

Proposition 11 (van der Corput) Let

be a class of structured functions. Let

be of polynomial size, and let

be a structured function of modulus

and complexity at most

. Let

be fixed, and let

denote the set of sufficiently large primes

dividing

with the property that there exists a polynomial

of degree at most

such that

, and let

. Then for any

of polynomial size, any factorisation

, and any coefficient sequence function

which is smooth at scale

, one has

where

,

, and

, where the sum is implicitly assumed to range over those

for which

is defined.

The parameter is technical, as is the

term; heuristically one should view this estimate as asserting that

under reasonable non-degeneracy conditions. Assuming sufficient dense divisibility and in the regime , the optimal value of the right-hand side is

, where

, which is attained when

for

and

.

Proof: We induct on , assuming that the claim has already been proven for all smaller values of

.

We may factor where

and

. Then we may write

Observe that any given ,

has magnitude

(from the divisor bound), the function

is of the form

for some

supported on an interval of length

and obeying the bounds

, and the function

is a structured function of modulus

and complexity at most

(here we use the dilation and translation invariance properties of structured functions). From this we see that to prove the proposition for a given value of

, it suffices to do so under the assumption

, in which case the objective is to prove that

The claim is trivial (from the divisor bound) with , so we may assume

, in which case we will show that

By applying a similar reduction to before we may also assume that all prime factors of are larger than some large fixed constant

, which we will assume to be sufficiently large for the arguments below to work.

We begin with the base case . In this case it will suffice to establish the bound

By completion of sums, it will suffice to show that

for all . By the Chinese remainder theorem and the divisor bound, it will suffice to show that

for all and all

. However, by the hypothesis

,

, and the claim now follows from the almost orthogonality properties of structured functions.

Now suppose that , and the claim has already been proven for smaller values of

. If

then the claim follows from the

bound, so we may assume that

, in which case we will establish

If we have , then

and the claim then follows by the induction hypothesis (concatenating and

). Similarly, if

, then

, and the claim follows from the triangle inequality. Thus we may assume that

Let . We can rewrite

as

We factor

and by the divisor bound , and so by the triangle inequality and the Cauchy-Schwarz inequality

since the summand is only non-zero when is supported on an interval of length

. This last expression may be rearranged as

The diagonal contribution can be estimated (using the pointwise bounds

) by

, which is acceptable, so it suffices to show that

We observe that is the sum of

structured functions of modulus

and complexity

, each of which the product of one of the components of

of modulus

and complexity

for all

. We can of course delete any components that vanish identically. Suppose that for one of these primes

, one of the components of the function

is equivalent to

for some polynomial

of degree at most

. Then by Lemma 10, if

is sufficiently large (larger than a fixed constant), either

, or else

is equivalent

for some polynomial

of degree at most

, but by the hypothesis

the latter case cannot occur since

is non-vanishing and

. Thus if we set

to be the product of all the primes

with this property, we see that

.

Applying the induction hypothesis, we may thus bound

by

The contribution of the first two terms to (9) is acceptable thanks to Lemma 5 of this previous post, so the only contribution remaining to control is

We may bound

The first term is dominated by the term appearing as the

summand in (9), while the contribution of the second term may be bounded using another application of Lemma 5 of this previous post and the bound

by

which is acceptable.

Remark 1 The above arguments relied on a

-version of the van der Corput

-process, and in the case of Dirichlet characters is essentially due to Graham and Ringrose (see also Heath-Brown). If we work with a class of structured functions that is closed under Fourier transforms (such as the trace weights), then the

-version of the van der Corput

-process also becomes available (in principle, at least), thus potentially giving a slightly larger range of “exponent pairs”; however this looks complicated to implement (the role of polynomial phases now needs to be replaced by a more complicated class that involves things like the Fourier transforms of polynomial phases, as well as their “antiderivatives”) and will likely only produce rather small improvements in the final numerology.

We isolate a special case of the above result:

Corollary 12 Let the notation and assumptions be as in Proposition 11 with

,

, and

-densely divisible. Then for any

, one has the bounds

and

The dependence on in the first bound can be improved, but we will not need this improvement here.

Proof: From the case of the above proposition we have

giving the first claim of the proposition.

Similarly, from the case of the above proposition we have

for any factorisation of

. As

is

-densely divisible, we may select

so that

so that

and the second claim follows.

— 3. A two-dimensional exponential sum —

We now apply the above theory to obtain a new bound on a certain two-dimensional exponential sum that will show up in the Type I estimate.

Proposition 13 Let

be a

-densely divisible squarefree integer of polynomial size for some

, let

be of polynomial size, and let

. Let

be smooth sequences at scale

respectively. Then

Here the summations are implicitly restricted to those

for which the denominator in the phase is non-zero. We also have the bound

The main term here is , which in certain regimes improves upon the bound of

that one obtains by completing the sums in the

variable but not exploiting any additional cancellation in the

variable.

Proof: We first claim that it suffices to verify the proposition when . Indeed, if we set

(where one computes the reciprocal of inside

), we see that

is

-densely divisible (thanks to Lemma 7), squarefree, and polynomial size, that

, and that

By the inclusion-exclusion formula and divisor bound, it thus suffices to show that for all , one has

where is either of the two right-hand sides in the proposition, i.e. either

or

By the divisor bound, we see that there are pairs

such that

. Thus it will suffice to show that

Making the change of variables ,

and using the

case of the proposition, we can bound the left-hand side by

and

and one verifies that these two quantities bound the two possible values of respectively.

Henceforth . Note that the above reduction also allows us to assume that

has no prime factors less than a sufficiently large fixed constant

to be chosen later. Our task is now to show that

and

From completion of sums we have

so it will suffice to show that

and

for any given . We rewrite this as

where

By the Chinese remainder theorem, this function factors as , where

and . Note that for any prime

dividing

(and thus larger than

), the rational function

is not divisible by

. From the Weil conjectures for curves this implies that

. In fact, from Deligne’s theorem (and in particular the fact that cohomology groups of sheaves are again sheaves), we have the stronger assertion that

is a sum of boundedly many trace weights at modulus

with complexity

in the sense of Example 4. (In the Grothendieck-Lefschetz trace formula, only the first cohomology

is non-trivial; the second cohomology

disappears because the rational function is not divisible by

, and the zeroth cohomology

disappears because the underlying curve is affine, although in any event the contribution of the zeroth cohomology could be absorbed into the

term in (10).) By the divisor bound, this implies that

is the sum of

trace weights at modulus

with complexity

. We can of course delete any components that vanish identically.

We claim that for any dividing

(and hence larger than

), none of the components of

are equivalent to a quadratic phase

. Assuming this claim for the moment, the required bound (10) then follows from Corollary 12. It thus suffices to verify the claim. If the claim failed, then we would have

for some algebraic integer , which is non-zero since

is equivalent to

and is non-zero. Since all non-zero algebraic integers have at least one Galois conjugate of modulus at least

, it will suffice (for

large enough) to establish that all Galois conjugates of the left-hand side of (27) are

. In other words, it suffices to establish the bound

for all . Setting

and

and concatenating parameters, it suffices to show that

whenever and

.

We now use a result of Hooley, which asserts that for any rational function of two variables and bounded degree, one has

provided that

is a geometrically generically irreducible curve (i.e. irreducible over an algebraic closure of

) and also that

is a (possibly reducible or empty) curve for any . We apply this result to the rational function

For any , it is clear that

is not identically zero, so the second condition of Hooley is satisfied. It remains to verify the first. (Thanks to Brian Conrad for fixing some errors in the argument that follows.) Suppose that the claim failed, thus

is reducible for generic

, or equivalently that the polynomial

is reducible in . Being linear in

, this polynomial

is clearly irreducible in

; since

does not lie in

, it remains irreducible in the larger ring

by Gauss’s lemma.

We now perform a technical reduction to deal with the problem that the field is not perfect. Since

involves the nonzero term

as its only

-term, over

it cannot be a constant multiple of a

-power. Hence, if it is irreducible over the separable closure

of

then it remains irreducible over the perfect closure

of

, so it suffices to check irreducibility over the separable closure.

Assuming is reducible over the separable closure, then up to constant multipliers (i.e. multiples in

) its irreducible factors in

must be Galois conjugate to each other with respect to

. Thus, none of these factors can lie in

or

, as otherwise all the factors would and hence so would their product

(a contradiction since

). Thus, the irreducible factorization over

remains an irreducible factorization in

and over

. Since

has nonzero constant term and degree at most

in either

or

, this implies that the irreducible factors of

in

are linear in both

and

, thus

for some and

. But

is visibly constant, so all

vanish and hence

, an absurdity.

— 4. Type I estimate —

We begin the proof of Theorem 4, closely following the arguments from Section 5 of this previous post or Section 2 of this previous post. One difference however will be that we will not discard the averaging as we will need it near the end of the argument. Let

be as in the theorem. We can restrict

to the range

for some sufficiently slowly decaying , since otherwise we may use the Bombieri-Vinogradov theorem (Theorem 4 from this previous post). Thus, by dyadic decomposition, we need to show that

for any fixed and for any

in the range

be a sufficiently small fixed exponent.

By Lemma 11 of this previous post, we know that for all in

outside of a small number of exceptions, we have

Specifically, the number of exceptions in the interval is

for any fixed

. The contribution of the exceptional

can be shown to be acceptable by Cauchy-Schwarz and trivial estimates (see Section 5 of this previous post), so we restrict attention to those

for which (14) holds. In particular, as

is restricted to be quadruply

-densely divisible, we may factor

with coprime and square-free, with

-densely divisible with

,

doubly

-densely divisible,and

and

Here we use the easily verified fact that , and we have also used Lemma 7 to ensure that dense divisibility is essentially preserved when transferring a factor of

from

(namely, the portion of

coming from primes up to

) to

.

By dyadic decomposition, it thus suffices to show that

for any fixed , where

obey the size conditions

Fix . We abbreviate

and

by

and

respectively, thus our task is to show that

We now split the discrepancy

as the sum of the subdiscrepancies

and

In Section 5 of this previous post, it was established (using the Bombieri-Vinogradov theorem) that

It will suffice to prove the slightly stronger statement

for all coprime to

, since if one then specialises to the case when

and averages over all primitive

we obtain (18) from the triangle inequality.

We use the dispersion method. We write the left-hand side of (19) as

for some bounded sequence . This expression may be rearranged as

so from the Cauchy-Schwarz inequality and crude estimates it suffices to show that

for any fixed , where

is a smooth coefficient sequence at scale

. Expanding out the square, it suffices to show that

where is subject to the same constraints as

(thus

and

for

), and

is some quantity that is independent of

.

Observe that must be coprime to

and

coprime to

, with

, to have a non-zero contribution to (21). We then rearrange the left-hand side as

note that these inverses in the various rings ,

,

are well-defined thanks to the coprimality hypotheses.

We may write for some

. By the triangle inequality, and relabeling

as

, it thus suffices to show that

for some independent of

,

.

At this stage in previous posts we isolated the coprime case as the dominant case, using a controlled multiplicity hypothesis to deal with the non-coprime case. Here, we will carry the non-coprime case with us for a little longer so as not to rely on a controlled multiplicity hypothesis; this introduces some additional factors of

into the analysis but they should be ignored on a first reading.

Applying completion of sums (Section 2 from this previous post), we can express the left-hand side of (22) as a main term

where .

Let us first deal with the main term (23). The contribution of the coprime case does not depend on

and can thus be absorbed into the

term. Now we consider the contribution of the non-coprime case when

. We may estimate the contribution of this case by

We may estimate by

. We just estimate the contribution of

, as the other case is treated similarly (after shifting

by

). We rearrange this contribution as

The summation is

. Evaluating the

summations, we obtain a bound of

Since and

, we have

, and so we may evaluate the

summation as

By (16) and (15), this is as required.

It remains to control (24). We may assume that , as the claim is trivial otherwise. It will suffice to obtain the bound

Using (25), it will suffice to show that

for each .

We now work with a single . To proceed further, we write

and

,

; it then suffices to show that

for each .

Henceforth we work with a single choice of . We pause to verify the relationship

From (25) and (17), this follows from the assertion that

but this follows from (4), (5) if is sufficiently small depending on

.

As is

-densely divisible, we may now factor

where

and thus

Factoring out , we may then write

where

and

By dyadic decomposition, it thus suffices to show that

whenever are such that

and

and

We rearrange this estimate as

for some bounded sequence which is only non-zero when

By Cauchy-Schwarz and crude estimates, it then suffices to show that

where is a coefficient sequence at scale

. The left-hand side may be bounded by

The contribution of the diagonal case is

by the divisor bound, which is acceptable since

. Thus it suffices to control the off-diagonal case

.

Note that need to lie in

for the summand to be non-vanishing. We use the following elementary lemma:

Proof: Setting , it suffices to show that

for each fixed . Since

it suffices to show that

for all coprime of polynomial size.

If divides both

and

, then for each

dividing

,

must divide one of

,

, or

. Thus we can factor

and

,

,

,

, which implies that

. For fixed

, we see from the divisor bound that there are

choices for

. Fixing

, we see that

have magnitude

, so there are

possible pairs of

whose difference is non-zero and divisible by

. The claim then follows from the divisor bound.

From this lemma, we see that for each fixed choice of in the above sum, it suffices to show that

Thus far the arguments have been essentially identical to that in the previous post, except that we have retained the averaging (and crucially, this averaging is inside the absolute values rather than outside). We now exploit the doubly dense divisibility of

to factor

where

and is

-densely divisible; this is admissible as long as

which are conditions which we will verify later. By dyadic decomposition, and the triangle inequality in , it thus suffices to show that

and all -densely divisible

, where

is a smooth non-negative coefficient sequence at scale

, where

Note that if the factors are to be non-vanishing,

,

are to be

-densely divisible, and so

is

-densely divisible as well thanks to Lemma 7.

We write the above estimate as

where

We now perform Weyl differencing. Set , then

and we can rewrite

and so it suffices to show that

By Cauchy-Schwarz, it suffices to show that

We restrict and

to individual residue classes

and

; it then suffices to show that

From (26) we see that the quantity vanishes unless

is square-free, and in that case it takes the form

when restricted to ,

, where

are quantities that may depend on

but are independent of

with

and

adopting the convention that vanishes when

, and

is a bounded quantity depending on

but otherwise independent of

. If we let

be such that

and

, and let

, we can simplify the above as

and note that

We thus have

and therefore

It thus suffices to show that

Shifting by

, then relabeling

as

, it suffices to show that

The contribution of the diagonal case is

, which is acceptable thanks to (29) (which implies

; we have a factor of

to spare which we will simply discard). It thus suffices to control the off-diagonal case

. It then suffices to show that

for each non-zero .

Performing a Taylor expansion, we can write

for any fixed , where

Absorbing the factor into

, and taking

large enough, it suffices to show that

for coefficient sequences which are smooth at scales

respectively. But by applying Proposition 13, and making the substitutions

,

, we may bound the left-hand side by

and

Using the former bound when and the latter bound when

, we obtain the upper bound of

Since

and , it suffices to show that

Since , we can replace

by

. The above bounds then simplify to

From (29) we already have . Also, from (31) we have

and conversely

Inserting these bounds and discarding the remaining powers of , we reduce to

and

and

We rearrange these as

Applying the bounds on from (30), these reduce to

The third bound follows since , and so may be dropped. We also recall the two bounds assumed from (29):

The bound (35) is implied by (34) and may thus be dropped. We have

so the remaining three bounds may be rewritten as

Since , these three bounds reduce to

From (16) we have , so the third bound is automatic, and the other two bounds become

Since , these two bounds become

which we rearrange as

and the claim follows.

63 comments

Comments feed for this article

28 July, 2013 at 9:16 am

Terence Tao

While writing the above post, I got the strong sense that any further pushing of our current methods will become increasingly complicated, and lead to increasingly smaller returns; each new appication of Cauchy-Schwartz, in particular, can make the main term in an estimate somewhat better behaved, but at the cost of making a lot of error terms worse (and more numerous), and it becomes increasingly tricky to ensure that all these error terms remain dominated by the main term. When the time comes to start writing up the results of this project (which will probably begin fairly soon), we may have to consider a tradeoff between simplicity of exposition and the optimality of the results; would it be worth it, for instance, to add ten pages to the argument in order to reduce H by 10%?

One nice thing about the basic structure of Zhang’s argument, though, is that it is very modular; one can, for instance, swap in one Type I estimate for a fancier one and keep everything else unchanged. (For instance, as noted before, we can swap out the Type III estimates entirely, and revert to the previous Type I estimate, thus eliminating the need to use Deligne’s theorems.) So it should be relatively easy to designate one set of estimates as the “primary” version of the argument, and then remark on various ways to either strengthen the bounds (at the cost of increased complexity) or simplify the proof (at the cost of worse bounds).

28 July, 2013 at 11:04 am

Armin

What this project is aiming for is different so, perhaps it is asking too much, but it would be nice to see the simplest possible argument no matter the quality of the estimate. Did you make any progress in this sense compared to Zhang’s original proof?

28 July, 2013 at 11:07 am

andrescaicedo

I would think the best currently available bound should be included and proved in the paper.

That said, the write-up could take advantage of the modularity of the result, perhaps starting with the argument in https://terrytao.wordpress.com/2013/06/30/bounded-gaps-between-primes-polymath8-a-progress-report/#comment-236995 and then have later sections improve the estimates that came before, highlighting the needed adjustments in the argument. (This organic approach would also make clear how the results developed through the project, which some readers may find just as fascinating as the proofs themselves.)

This makes the paper longer, of course, and harder to write, but perhaps most useful, as readers could stop at the end of essentially any given section, and walk out with a complete proof, a decent estimate, and an idea of what details of the argument would admit further improving.

28 July, 2013 at 12:26 pm

Terence Tao

I like the idea of starting with the “minimal” proof needed to obtain a qualitative version of Zhang’s theorem (B[H] for some unspecified H) and then replacing things with fancier estimates later. Let me try to lay out what that “minimal” proof looks like.

1. First we need to show that![DHL[k_0,2]](https://s0.wp.com/latex.php?latex=DHL%5Bk_0%2C2%5D&bg=ffffff&fg=545454&s=0&c=20201002) for some

for some  implies

implies ![B[H]](https://s0.wp.com/latex.php?latex=B%5BH%5D&bg=ffffff&fg=545454&s=0&c=20201002) for some finite H. This is easy; we can just follow Zhang here and observe that for any

for some finite H. This is easy; we can just follow Zhang here and observe that for any  , the first

, the first  primes past

primes past  form an admissible

form an admissible  -tuple.

-tuple.

2. Then we need to show that![MPZ[\varpi,\delta]](https://s0.wp.com/latex.php?latex=MPZ%5B%5Cvarpi%2C%5Cdelta%5D&bg=ffffff&fg=545454&s=0&c=20201002) for some

for some  implies

implies ![DHL[k_0,2]](https://s0.wp.com/latex.php?latex=DHL%5Bk_0%2C2%5D&bg=ffffff&fg=545454&s=0&c=20201002) for some sufficiently large

for some sufficiently large  (we can initially work with the original formulation of MPZ involving smoothness, rather than the fancier versions involving dense divisibility). This is not too bad; the elementary Selberg sieve in https://terrytao.wordpress.com/2013/06/08/the-elementary-selberg-sieve-and-bounded-prime-gaps/ (using the “first-generation” weights

(we can initially work with the original formulation of MPZ involving smoothness, rather than the fancier versions involving dense divisibility). This is not too bad; the elementary Selberg sieve in https://terrytao.wordpress.com/2013/06/08/the-elementary-selberg-sieve-and-bounded-prime-gaps/ (using the “first-generation” weights  from the original Goldston-Pintz-Yildirim paper (or from Zhang’s paper) rather than the optimised Bessel weight) can do this after a certain lengthy amount of elementary number theory (no contour integration is required). (For the “minimal” proof we can set

from the original Goldston-Pintz-Yildirim paper (or from Zhang’s paper) rather than the optimised Bessel weight) can do this after a certain lengthy amount of elementary number theory (no contour integration is required). (For the “minimal” proof we can set  as Zhang does, although this does not actually lead to any significant simplifications in the argument.) Alternatively one could simply cite the paper of Motohashi-Pintz for this implication, although the implication is not completely explicit in that paper.

as Zhang does, although this does not actually lead to any significant simplifications in the argument.) Alternatively one could simply cite the paper of Motohashi-Pintz for this implication, although the implication is not completely explicit in that paper.

3. Then we need to show that![MPZ[\varpi,\delta]](https://s0.wp.com/latex.php?latex=MPZ%5B%5Cvarpi%2C%5Cdelta%5D&bg=ffffff&fg=545454&s=0&c=20201002) follows from Type I, Type II, and Type III estimates. Actually it turns out that we can do everything using a sufficiently good Type I/II estimate (one which can allow

follows from Type I, Type II, and Type III estimates. Actually it turns out that we can do everything using a sufficiently good Type I/II estimate (one which can allow  to be as large as 1/6), removing the need for Type III estimates. In this case, we can also use Vaughan’s identity rather than the Heath-Brown identity, which is not a major simplification but may be a bit more familiar to some readers (and allows one to avoid the combinatorial lemma on subset sums, although this lemma is rather easy). One still needs to use some sort of dyadic decomposition here (actually for technical reasons we use a finer-than-dyadic decomposition), but this is rather easy and standard.

to be as large as 1/6), removing the need for Type III estimates. In this case, we can also use Vaughan’s identity rather than the Heath-Brown identity, which is not a major simplification but may be a bit more familiar to some readers (and allows one to avoid the combinatorial lemma on subset sums, although this lemma is rather easy). One still needs to use some sort of dyadic decomposition here (actually for technical reasons we use a finer-than-dyadic decomposition), but this is rather easy and standard.

4. To prove a type I/II estimate, it turns out that the Type II argument can be used to cover both cases. (The Type I and Type II arguments are very similar, except at one stage where Cauchy-Schwarz is applied slightly differently in the two cases.) The ingredients needed here are

4.1. The Bombieri-Vinogradov theorem (which in turn is proven using the large sieve inequality, a Fourier decomposition into Dirichlet characters, and the Siegel-Walfisz theorem). This is a little lengthy, but completely standard, and we can cite for instance this paper of Bombieri-Friedlander-Iwaniec for the precise version of Bombieri-Vinogradov that we need.

4.2. The observation that most large numbers do not have an enormous number of small prime factors (less than

do not have an enormous number of small prime factors (less than  ); this is elementary but a little technical.

); this is elementary but a little technical.

4.3 A moderately complicated but elementary sequence of Cauchy-Schwarz and completion-of-sums type manipulations (based on the dispersion method), together with some standard bounds on mean values of divisor functions etc. (We have a minor simplification here over the textbook use of the dispersion method as used by Zhang and others, in that instead of having to estimate three different sums , one only needs to control an

, one only needs to control an  type sum, although this is not a major simplification since

type sum, although this is not a major simplification since  is the hardest of the three sums to control anyway. There is also a technical tradeoff: early versions of the Type I/II estimates (including Zhang’s) required a certain “controlled multiplicity” hypothesis on the congruence classes involved (in order to reduce to the case when two moduli

is the hardest of the three sums to control anyway. There is also a technical tradeoff: early versions of the Type I/II estimates (including Zhang’s) required a certain “controlled multiplicity” hypothesis on the congruence classes involved (in order to reduce to the case when two moduli  are coprime); we’ve now removed this hypothesis, but at the (minor) cost of having to carry around an additional parameter

are coprime); we’ve now removed this hypothesis, but at the (minor) cost of having to carry around an additional parameter  in the analysis (measuring the gcd of

in the analysis (measuring the gcd of  ). I’m not sure at this point which of these methods would be better for the “minimal” proof.

). I’m not sure at this point which of these methods would be better for the “minimal” proof.

4.4 A van der Corput estimate that gives a power saving for sums such as for

for  a small power of the smooth modulus

a small power of the smooth modulus  (I think with

(I think with  and using the Type II argument, we need a power saving with

and using the Type II argument, we need a power saving with  as small as

as small as  , which can be achieved using a double van der Corput + the Weil conjectures for curves. By splitting into Type I and Type II I think we only need a power saving for

, which can be achieved using a double van der Corput + the Weil conjectures for curves. By splitting into Type I and Type II I think we only need a power saving for  as small as

as small as  , which requires only one van der Corput + Weil conjectures for curves. (One can do a little better than this by splitting up the moduli more optimally, which is what one does for the most advanced Type I estimates, but perhaps we can avoid this for the “minimal” argument.)

, which requires only one van der Corput + Weil conjectures for curves. (One can do a little better than this by splitting up the moduli more optimally, which is what one does for the most advanced Type I estimates, but perhaps we can avoid this for the “minimal” argument.)

The van der Corput estimate is proven by an elementary application of Cauchy-Schwarz (following an old paper of Graham and Ringrose), together with the Weil conjectures for curves which in particular gives square root cancellation for sums of the form . This bound can simply be cited in the literature, but actually for the purposes of getting a qualitative result, we don’t need square root cancellation; any power saving should suffice. I had claimed earlier that an elementary argument of Kloosterman gives a non-trivial power saving for any exponential sum

. This bound can simply be cited in the literature, but actually for the purposes of getting a qualitative result, we don’t need square root cancellation; any power saving should suffice. I had claimed earlier that an elementary argument of Kloosterman gives a non-trivial power saving for any exponential sum  with

with non-constant, but actually, now that I look at it more carefully, I only see how to make Kloosterman’s argument work with the Kloosterman sum

non-constant, but actually, now that I look at it more carefully, I only see how to make Kloosterman’s argument work with the Kloosterman sum  as it exploits a certain dilation symmetry in this sum which is not present in the general case. But presumably an elementary argument should be available (which would not need the Weil conjectures for curves; this can be proven using the elementary method of Stepanov, but even this requires the Riemann-Roch theorem and so is not completely elementary.)

as it exploits a certain dilation symmetry in this sum which is not present in the general case. But presumably an elementary argument should be available (which would not need the Weil conjectures for curves; this can be proven using the elementary method of Stepanov, but even this requires the Riemann-Roch theorem and so is not completely elementary.)

28 July, 2013 at 12:50 pm

Armin

One of the most inspiring aspects of this project was that it has brought to light so many different corners of various fields and linked them in such a beautiful way. Since you already have a number of experts involved in this project, why waste an opportunity to write a completely self-contained textbook? Each person will probably write a chapter or two. This would better serve future generations of mathematicians than a technical paper improving upon Zhang’s result. Right now you have to be a student of one of these experts to learn this material properly, but you have a chance to change this.

29 July, 2013 at 6:40 am

Gergely Harcos

I think the aim of this project would be best fulfilled by a research paper containing the optimal result. The extra 10 pages would be amply justified by the 10% reduction in . On the other hand, it would also be very useful to compile a set of lecture notes (for lecturing purposes) which are self-contained, avoid Deligne’s theorem, and yield a reasonably small

. On the other hand, it would also be very useful to compile a set of lecture notes (for lecturing purposes) which are self-contained, avoid Deligne’s theorem, and yield a reasonably small  . Finally, these lecture notes could be expanded into a textbook in the farther future.

. Finally, these lecture notes could be expanded into a textbook in the farther future.

29 July, 2013 at 7:17 am

Armin

The optimal result is H=2, and readers of this research paper are probably the same experts who already understand the arguments well enough following developments on this blog. The only real purpose of writing a technical paper is to create a template for the future book, and test run the collaborative process. For that reason, I certainly hope that Terry Tao will not be the one writing 95% of the paper. However, I am only an observer and I wish all the best for everyone involved in this project, regardless of the final product.

29 July, 2013 at 9:57 am

Gergely Harcos

I disagree that the optimal result is , perhaps the twin prime conjecture is false! By optimal result I meant the smallest

, perhaps the twin prime conjecture is false! By optimal result I meant the smallest  that the project was able to achieve. I also disagree that “The only real purpose of writing a technical paper is to create a template for the future book, and test run the collaborative process”. The aim of this project was to produce an

that the project was able to achieve. I also disagree that “The only real purpose of writing a technical paper is to create a template for the future book, and test run the collaborative process”. The aim of this project was to produce an  that is as small as possible, and in my opinion the purpose of writing the paper is to publish the result and have it checked by referees as any other theorem in mathematics. Not all the experts followed the developments on this blog, and in fact some future experts are not even born yet! Publishing a weaker result with a simpler proof, or writing a textbook should be regarded as a secondary goal or outcome of the project.

that is as small as possible, and in my opinion the purpose of writing the paper is to publish the result and have it checked by referees as any other theorem in mathematics. Not all the experts followed the developments on this blog, and in fact some future experts are not even born yet! Publishing a weaker result with a simpler proof, or writing a textbook should be regarded as a secondary goal or outcome of the project.

29 July, 2013 at 10:34 am

Armin

Those unborn experts is my deepest concern. Without commitment, I fear that after the initial excitement you will take the easy way out and abandon them after 20 pages of technical summary, and will not bear the project to its full term.

29 July, 2013 at 10:55 am

Gergely Harcos

I am not following you. By unborn experts I meant that a paper is written not only for the present, but also for the future. So it is necessary to produce a decent paper that will be stored in hundreds of libraries and websites (not only on this blog) just as any other scientific paper. On the other hand, the full proof is already written up in detail on this blog, so producing the paper really means to compile the material without the twists and turns that are characteristic in the research process (and nicely reflected on this blog), and of course adding the usual introduction to put the results into context. The final paper will contain a full proof of the stated result, just as any other research paper in mathematics.

29 July, 2013 at 12:41 pm

Mark Bennet

There seem to me to be three possible outcomes, which maybe merit two papers. The first is as elementary a proof as is currently possible of a finite prime gap which occurs infinitely often. The second is the absolute best we can do to prove a minimal prime gap with the techniques currently to hand. The third, which gives a route-map fpr the future, is a record of what has been tried and hasn’t worked, and some intelligent commentary on where new ideas may be needed. ie “if you do better than us with these ideas you might get to … & if you want to do better than that you need an idea we haven’t thought of yet.”

I think the intensity of this project conceals how rare it has been for these three questions to be open and viable in the timescale achieved here.

29 July, 2013 at 3:05 pm

Terence Tao

I think that, thanks to the modular structure of the argument, it should be possible to at least partially achieve the first and third of these objectives in a paper ostensibly focused on the second objective, since we can easily present multiple versions (including some conjectural ones that we can’t actually achieve yet) of each modular component of the argument.

It’s also not necessary for everything to go into a traditionally published paper; we can have a traditional refereed paper containing the best results we can currently establish, together with some additional blog posts, e.g. one post for the simplest possible proof and another for speculative improvements.

As for lecture notes and textbooks, my feeling here is that it is best to wait until the subject matures more. There will be more developments and followups to Zhang’s paper than the current polymath project (indeed, there have already been several other research papers that have built upon Zhang’s result outside of the polymath8 project) and it may take some time to get the proper perspective to digest all these results. (And actually it may be best for someone who is not as directly involved with the research to write a proper summary, cf. for instance Soundararajan’s Bull. AMS survey of the previous Goldston-Pintz-Yildirim breakthrough on prime gaps.)

29 July, 2013 at 3:00 am

James Hilferty

Dear Terry is there any truth in the rummer that you are a “pure mathematician” and not a practical one. I was having a discussion with my niece’s husband about the usefulness of the Gaussian Normal Distribution Curve (he is a professor of mathematics) and I think that I ran rings around and then he suggested without even a blush, that it was only a Hypothesis; and about the Central Limit Theory (which I believe in) and then that you are a Pure (not practical) mathematician; and I asked the obvious question “what’s the point of maths if it doesn’t work?

29 July, 2013 at 12:12 pm

Eytan Paldi

Perhaps (for the next improvement) it is better to reduce the lower bound on than improving type I estimate in theorem 4?

than improving type I estimate in theorem 4?

30 July, 2013 at 6:27 am

Aubrey de Grey

That doesn’t seem to be the situation at this point, as I understand it. Not only is there an apparently very robust obstacle to reducing the lower bound on sigma (in the form of an inability to handle efficiently what are being termed Type V sums), but also there is not all that much out there in terms of avenues for improving the Type III estimate, which would be needed in order to get more than a rather small increase in varpi (to around 1/82 from the current 1/85) even if sigma were reduced. Conversely, a really big breakthrough for Type I could potentially allow a large varpi even with sigma up at the 1/6 boundary that obviates Type III entirely; this avenue becomes even more attractive when one bears in mind that there is currently a whole cluster of promising-looking options for improving the current Type II bound of varpi=1/68, which is independent of sigma.

Whether there is actually any real chance of significantly improving the Type I estimate is a different matter, of course, as Terry has noted above. Also, it might turn out that the Type III estimate can be greatly improved after all, in which case the rationale for hacking at the lower bound on sigma would correspondingly increase. Maybe this means it’s worth briefly exploring Type III again now, even though it’s currently irrelevant, just to get a feel for whether the bulk of future effort would be best focused on Type I or on sigma?

29 July, 2013 at 10:54 pm

Stephan Goldammer (@StephGoldammer)

Math is easy.

30 July, 2013 at 6:48 am

Kamran Alam Khan

Reblogged this on Observer.

30 July, 2013 at 7:54 pm

Terence Tao

I’ve been asked a few times what the best value of H we can get without Deligne’s theory (relying just on the Weil conjectures), so I am recording the numerology here.

Without Deligne’s theorems, we lose the Type III estimates and so need to be at least

to be at least  (rather than

(rather than  ). Also, the most advanced Type I estimate (the “Level 5” one given in this blog post) also relies on Deligne’s theory, and so we must fall back to the previous Type I estimate (confusingly numbered “Level 6”) from this previous post, which holds (for doubly densely divisible moduli) for

). Also, the most advanced Type I estimate (the “Level 5” one given in this blog post) also relies on Deligne’s theory, and so we must fall back to the previous Type I estimate (confusingly numbered “Level 6”) from this previous post, which holds (for doubly densely divisible moduli) for  , which when

, which when  becomes

becomes  . There are also the Type II sums, but these hold for

. There are also the Type II sums, but these hold for  without Deligne’s theorems and so are not dominant.

without Deligne’s theorems and so are not dominant.

So one has to optimise subject to

subject to  , using the doubly densely divisible value for

, using the doubly densely divisible value for  , namely

, namely

(note that in previous posts the erroneous value of was used instead). Setting

was used instead). Setting  ,

,  ,

,  I can reach

I can reach  (and hence, by the prime tuples website,

(and hence, by the prime tuples website,  ); this can probably be optimised a little more. So currently Deligne’s theorems are giving a factor of three improvement or so. :)

); this can probably be optimised a little more. So currently Deligne’s theorems are giving a factor of three improvement or so. :)

31 July, 2013 at 3:53 am

Aubrey de Grey

Thank you! Out of interest: is it still the case that, at the cost of a further numerical hit from iterating vdC, one can also avoid the Weil conjectures, as you intimated a few weeks ago (replacing q^{1/2} by q^{3/4} ), or is that possibility precluded at this point by your concluding paragraph in the sketch of the minimal proof a few days ago? If it’s not precluded, can one yet say what minimal H would currently result in that case?

31 July, 2013 at 8:03 am

Terence Tao

I asked a question on MathOverflow about this last point (at http://mathoverflow.net/questions/138193/is-there-a-cheap-proof-of-power-savings-for-exponential-sums-over-finite-fields ) but there is still no definitive answer on that point yet. If we do somehow get a cheap bound of for rational exponential sums (a typical thing we would need is a bound on

for rational exponential sums (a typical thing we would need is a bound on  for non-zero

for non-zero  ), this wouldn’t increase the number of van der Corput’s needed, but it would roughly speaking halve the value of

), this wouldn’t increase the number of van der Corput’s needed, but it would roughly speaking halve the value of  obtained as a result. (Basically, after all the Cauchy-Schwarz, one has to gain something like

obtained as a result. (Basically, after all the Cauchy-Schwarz, one has to gain something like  or

or  in the exponential sum over the trivial bound; Weil lets you save

in the exponential sum over the trivial bound; Weil lets you save  , but Kloosterman only saves

, but Kloosterman only saves  .) Halving

.) Halving  roughly corresponds to multiplying

roughly corresponds to multiplying  by

by  , and

, and  would increase by a little more than that, so we’d be looking at

would increase by a little more than that, so we’d be looking at  in the 50K range or so.

in the 50K range or so.

30 July, 2013 at 11:23 pm

Emmanuel Kowalski

I think example 4 (“trace weights”) might not, literally, work with your definition of structured class. The issue is the distinction between geometrically irreducible and arithmetically irreducible sheaves (in my papers with Fouvry and Michel, this explains why we work with geometrically isotypic sheaves instead of irreducible ones).

But this is relatively technical, and I am sure that there is no problem with the specific weights used for bounded gaps…

31 July, 2013 at 8:17 am

Terence Tao

Hmm, this is a subtlety that I did not previously appreciate, and it may complicate the axiomatisation I was hoping to use (in order not to have to deal with specific sheaves). Specifically, if one works with geometric isotypic sheaves only, then one loses the normalisation axiom; the (square of the ) L^2 norm of a trace weight is no longer , but instead

, but instead  for some algebraic integer

for some algebraic integer  (I think one can’t assume it to be a natural number, though I would like it if we could somehow prevent

(I think one can’t assume it to be a natural number, though I would like it if we could somehow prevent  from getting too small). As such, if two trace weights correlate, then they do not necessarily agree up to a phase, but instead could agree up to a more general algebraic number. In the specific application to the q-van der Corput process we are correlating trace weights which are translates of each other, so we probably can recover the phase property (at least if we have this lower bound on

from getting too small). As such, if two trace weights correlate, then they do not necessarily agree up to a phase, but instead could agree up to a more general algebraic number. In the specific application to the q-van der Corput process we are correlating trace weights which are translates of each other, so we probably can recover the phase property (at least if we have this lower bound on  ).

).

I’ll have to think about how to fix this in a non-messy fashion…

[ADDED LATER: I think I can recover most of what is needed if one works over all embeddings of into

into  rather than just a fixed embedding, so that one never just works with a single algebraic integer, but all Galois conjugates of that integer (since at least one of them is guaranteed to be of magnitude at least one. Still working out the details…]

rather than just a fixed embedding, so that one never just works with a single algebraic integer, but all Galois conjugates of that integer (since at least one of them is guaranteed to be of magnitude at least one. Still working out the details…]

31 July, 2013 at 9:52 am

Terence Tao

OK, I think I repaired the problem. Trace weights are now defined using geometrically isotypic sheaves instead of geometrically irreducible ones; also for technical reasons I have to exclude the zero function 0 from this class. I claim that the almost orthogonality relation (in the regime when is large compared to all the conductors) now takes the form

is large compared to all the conductors) now takes the form  , where

, where  are trace weights of bounded conductor defined on

are trace weights of bounded conductor defined on  respectively, and

respectively, and  is an algebraic integer (of potentially unbounded height and perhaps very close to zero in the Archimedean sense, e.g.

is an algebraic integer (of potentially unbounded height and perhaps very close to zero in the Archimedean sense, e.g.  ), which is non-vanishing if and only if the geometrically irreducible representations associated to

), which is non-vanishing if and only if the geometrically irreducible representations associated to  are isomorphic. (It is to get the “if” direction – which allows one to detect non-isomorphism through correlation – that I need to exclude 0 as a trace weight.) Furthermore, all Galois conjugates of the

are isomorphic. (It is to get the “if” direction – which allows one to detect non-isomorphism through correlation – that I need to exclude 0 as a trace weight.) Furthermore, all Galois conjugates of the  error remain of size

error remain of size  . In particular, I claim that if all Galois conjugates of

. In particular, I claim that if all Galois conjugates of  are

are  , then

, then  do not have common geometrically irreducible components. This allows us to demonstrate that the sheaves we need do not contain quadratic phase components, by reducing matters to bounding (all Galois conjugates of) a two-dimensional exponential sum which we can do by Hooley’s argument.

do not have common geometrically irreducible components. This allows us to demonstrate that the sheaves we need do not contain quadratic phase components, by reducing matters to bounding (all Galois conjugates of) a two-dimensional exponential sum which we can do by Hooley’s argument.

31 July, 2013 at 12:03 pm

Terence Tao

A slight correction: there is a complication due to the fact that even if are non-zero and come from geometrically isotypic sheaves

are non-zero and come from geometrically isotypic sheaves  with isomorphic geometric components, it is possible for the trace of Frobenius to cancel out to zero on the geometrically trivial component of

with isomorphic geometric components, it is possible for the trace of Frobenius to cancel out to zero on the geometrically trivial component of  , so one cannot quite use the correlation

, so one cannot quite use the correlation  to detect isomorphism of the geometric components in general. However, I don’t think this can occur when one of the sheaves is rank one, which is the case we care about (specifically, one of the sheaves will be the pullback of an Artin-Schreier sheaf by a polynomial).

to detect isomorphism of the geometric components in general. However, I don’t think this can occur when one of the sheaves is rank one, which is the case we care about (specifically, one of the sheaves will be the pullback of an Artin-Schreier sheaf by a polynomial).

1 August, 2013 at 3:37 am

Philippe Michel

In addition to what Emmanuel said, in order to insure that there no “accidental cancellation” one can compute the sum over all extension (say) of

(say) of  (which is possible here of course) the reason being that if

(which is possible here of course) the reason being that if  are complex numbers of modulus one,

are complex numbers of modulus one,  .

.

31 July, 2013 at 10:17 pm

Emmanuel Kowalski

The way we (i.e., FKM) handle this is to work with representatives of arithmetically irreducible, geometrically isotypic, weight 0 (middle extension) sheaves on P^1/F_p modulo geometric isomorphism.

Functions in such a set strongly quasi-orthogonal; when K=K’, the L^2 norm is about n^2, where n is the number of copies of the geometrically irreducible component of the sheaf.

They suffice to describe, e.g., general products, because the only missing (weight 0, etc) arithmetically irreducible sheaves are induced from P^1/F_q for some finite extension F_q/F_p, and the trace function of such an induced sheaf is zero.

(See, e.g., our paper on inverse theorems for Gowers norms of trace functions

Click to access gowers-prime-fields.pdf

to appear in Math. Proc. Cambridge Phil. Soc., especially section 5.)

I am not sure how good an axiomatiization one can really hope for (to my mind, this is somewhat similar to issues with the Selberg class compared with automorphic forms). For instance, one might want to add stabilitiy under the Fourier transform, and this adds further complexity; convolution (especially multiplicative convolution) is even trickier.

1 August, 2013 at 9:10 am

Terence Tao

Thanks for this! Yes, I have found Section 5 of your Gowers norm paper to be very helpful, thanks.

My motivation in trying to axiomatise things is not so much to try to get a complete axiomatisation of the trace weight functions (which is probably hopeless at this time, as you point out), but rather to try to “black box” all the information about trace weights that are needed for the application to bounded gaps between primes, for the benefit of readers who are not familiar with the trace weight formalism. I’ve tried to incorporate everything we actually use about trace weights into Definition 9 of this blog post; there is one additional thing we need beyond this, which is that functions of the form are sums of a bounded number of isotypic trace weights, where

are sums of a bounded number of isotypic trace weights, where  is a rational function of bounded degree such that

is a rational function of bounded degree such that  is not constant for any

is not constant for any  . (I think this follows from Deligne’s theorem and the Grothendieck-Lefschetz formula if one interprets the middle cohomology associated to the exponential sum

. (I think this follows from Deligne’s theorem and the Grothendieck-Lefschetz formula if one interprets the middle cohomology associated to the exponential sum  itself as a sheaf.)

itself as a sheaf.)

For working over , I can see that picking one representative modulo geometric isomorphism of each isotypic trace weight works well as one gets the nice quasi-orthogonality property over

, I can see that picking one representative modulo geometric isomorphism of each isotypic trace weight works well as one gets the nice quasi-orthogonality property over  as a consequence. But I think I have to keep all coefficients algebraic integers in order to avoid cancellation issues (though, as Philippe points out, one could escape this by working with the companion sums instead, but this is much harder to “black box”, and would look quite a circuitous way to estimate an exponential sum over

as a consequence. But I think I have to keep all coefficients algebraic integers in order to avoid cancellation issues (though, as Philippe points out, one could escape this by working with the companion sums instead, but this is much harder to “black box”, and would look quite a circuitous way to estimate an exponential sum over  to readers not familiar with how the Weil conjectures are proven and used). Specifically, I need to show that the above trace weight

to readers not familiar with how the Weil conjectures are proven and used). Specifically, I need to show that the above trace weight  is associated to a sheaf does not contain any component that is the pullback of an Artin-Schreier sheaf by a quadratic polynomial. By decomposing

is associated to a sheaf does not contain any component that is the pullback of an Artin-Schreier sheaf by a quadratic polynomial. By decomposing  into components (but not working with a fixed representative in each geometric isomorphism class), I think I can show that the only way the above assertion fails is if there is a quadratic phase whose inner product with

into components (but not working with a fixed representative in each geometric isomorphism class), I think I can show that the only way the above assertion fails is if there is a quadratic phase whose inner product with  is of the form

is of the form  for some non-zero algebraic integer

for some non-zero algebraic integer  , where all Galois conjugates of the error term

, where all Galois conjugates of the error term  are also

are also  . Now this algebraic integer may be very small in the Archimedean sense (due to “unwanted cancellation”), but at least one Galois conjugate of it will be large, so one can win as long as one can show that all Galois conjugates of the inner product of

. Now this algebraic integer may be very small in the Archimedean sense (due to “unwanted cancellation”), but at least one Galois conjugate of it will be large, so one can win as long as one can show that all Galois conjugates of the inner product of  with a quadratic phase are