This is the eighth “research” thread of the Polymath15 project to upper bound the de Bruijn-Newman constant , continuing this post. Discussion of the project of a non-research nature can continue for now in the existing proposal thread. Progress will be summarised at this Polymath wiki page.

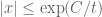

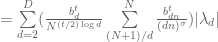

Significant progress has been made since the last update; by implementing the “barrier” method to establish zero free regions for by leveraging the extensive existing numerical verification of the Riemann hypothesis (which establishes zero free regions for

), we have been able to improve our upper bound on

from 0.48 to 0.28. Furthermore, there appears to be a bit of further room to improve the bounds further by tweaking the parameters

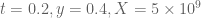

used in the argument (we are currently using

); the most recent idea is to try to use exponential sum estimates to improve the bounds on the derivative of the approximation to

that is used in the barrier method, which currently is the most computationally intensive step of the argument.

114 comments

Comments feed for this article

17 April, 2018 at 3:52 pm

Terence Tao

I have reorganised the writeup a bit to try to put the most interesting results in the introduction. Also I have written down a bound for both the x,y and t derivatives of the quantity , which in the writeup is now called

, which in the writeup is now called  . These should presumably be useful in the barrier part of the argument. I like KM’s idea of using some exponential sum estimates to do even better; the estimates in the paper of Cheng and Graham aren’t too fearsome, and it may be that all one needs to do is combine them with summation by parts to obtain reasonable estimates. The net savings could potentially be on the order of

. These should presumably be useful in the barrier part of the argument. I like KM’s idea of using some exponential sum estimates to do even better; the estimates in the paper of Cheng and Graham aren’t too fearsome, and it may be that all one needs to do is combine them with summation by parts to obtain reasonable estimates. The net savings could potentially be on the order of  which could be significant at the scales we are considering (in principle we can place our barrier as far out as

which could be significant at the scales we are considering (in principle we can place our barrier as far out as  ). I’ll take a look at it.

). I’ll take a look at it.

UPDATE: there is also some followup work to the Cheng-Graham paper by various authors which may also have some useable estimates in this regard.

18 April, 2018 at 8:35 am

Anonymous

citizen scientists don’t have mathscinet

18 April, 2018 at 9:34 am

Anonymous

See e.g. the recent paper by Platt and Trudgian

Click to access 1402.3953.pdf

18 April, 2018 at 8:45 am

KM

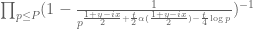

It seems the t/4 factor in the ddt bound formula should be 1/4. Also, I was facing a authentication wall with the mathscinet link, so tried Google Scholar instead (link), which provides links to many of the followup papers.

[Corrected, thanks – T.]

21 April, 2018 at 5:47 am

Bill Bauer

There may be sign error in the denominator of definition (16), γ. It currently reads

(16) γ(x+iy) := Mₜ(t,(1-y+i*x)/2)/Mₜ(t,(1+y-i*x)/2)

It should, perhaps, read

(16) γ(x+iy) := Mₜ(t,(1-y+i*x)/2)/Mₜ(t,(1+y+i*x)/2)

The mean value theorem, used in the proof of Proposition 6.6 (estimates) suggests that log|Mₜ(t,(σ+i*x)/2)| should be a function of σ only which it would not be if the signs of ix were different in numerator and denominator.

Apologies if I’m missing something obvious. As far as I’ve checked computationally, the inequality seems to be true in either case.

21 April, 2018 at 7:08 am

Terence Tao

The holomorphic function is real on the real axis and so obeys the reflection equation

is real on the real axis and so obeys the reflection equation  , so if one takes absolute values, the sign of the

, so if one takes absolute values, the sign of the  term becomes irrelevant. I’ll add a line of explanation in the proof of Prop 6.6 to emphasise this.

term becomes irrelevant. I’ll add a line of explanation in the proof of Prop 6.6 to emphasise this.

21 April, 2018 at 10:23 am

William Bauer

Thank you. Wish I’d seen that.

17 April, 2018 at 5:10 pm

anonymous

This is a question which is possibly a bit orthogonal to the main developments of this polymath, but may be able to be answered relatively easily using the insights that the contributors have gained. Is clear how far one can extend to other similar functions the result of Ki, Kim and Lee, that all but finitely many zeros of lie on the real line for any

lie on the real line for any  ? I’m thinking of a result along the lines of de Bruijn’s that applies generically to functions with zeros trapped in a certain strip, with the added condition that zeros become more dense away from the origin.

? I’m thinking of a result along the lines of de Bruijn’s that applies generically to functions with zeros trapped in a certain strip, with the added condition that zeros become more dense away from the origin.

For instance is there a pathological example of an entire function (with rapidly decaying and even Fourier transform) with all zeroes in some strip

(with rapidly decaying and even Fourier transform) with all zeroes in some strip  ,

,  still has infinitely many zeros off the real line? (One could even to make the question more precise suppose

still has infinitely many zeros off the real line? (One could even to make the question more precise suppose  even satisfies a Riemann- von Mangoldt formula with the same sort of error term as the Riemann xi-function.)

even satisfies a Riemann- von Mangoldt formula with the same sort of error term as the Riemann xi-function.)

17 April, 2018 at 7:49 pm

Terence Tao

Well, here is a simple example: the functions , for some constant

, for some constant  , has all zeroes in a strip that become all real at time

, has all zeroes in a strip that become all real at time  , but has infinitely many zeroes off the line before then (the function is

, but has infinitely many zeroes off the line before then (the function is  -periodic). But this function doesn’t obey a Riemann von Mangoldt formula.

-periodic). But this function doesn’t obey a Riemann von Mangoldt formula.

For a typical function obeying Riemann von Mangoldt, a complex zero above the real axis of real part would be expected to be attracted to the real axis at a velocity comparable to the

would be expected to be attracted to the real axis at a velocity comparable to the  nearby zeroes, at least half of which will be at or below the real axis. So within time

nearby zeroes, at least half of which will be at or below the real axis. So within time  one would expect such a zero to hit the real axis, suggesting that there are only finitely many non-real zeroes after any finite amount of time. But there could be some exceptional initial configurations of zeroes which somehow “defy gravity” – maybe “pulling each other up by their bootstraps” and stay above the real line for an unusually long period of time. I don’t know how to construct these, but on the other hand I can’t rule such a scenario out either – they seem somewhat inconsistent with physical intuition such as conservation of centre of mass, but I have not been able to make such intuition rigorous.

one would expect such a zero to hit the real axis, suggesting that there are only finitely many non-real zeroes after any finite amount of time. But there could be some exceptional initial configurations of zeroes which somehow “defy gravity” – maybe “pulling each other up by their bootstraps” and stay above the real line for an unusually long period of time. I don’t know how to construct these, but on the other hand I can’t rule such a scenario out either – they seem somewhat inconsistent with physical intuition such as conservation of centre of mass, but I have not been able to make such intuition rigorous.

17 April, 2018 at 11:53 pm

Anonymous

It seems important to classify (e.g. by Cartan’s equivalence method) all the invariants of the zeros ODE dynamics – to get more information and bounds for this dynamics.

18 April, 2018 at 7:49 am

Terence Tao

I’m not sure that there will be many invariants – this is a heat flow (with a very noticeable “arrow of time”) rather than a Hamiltonian flow. Since such flows tend to converge to an equilibrium state at infinity, it’s very hard to have any invariants (as they would have to match the value of the invariant at the equilibrium state). For such flows it is monotone (or approximately monotone) quantities, rather than invariant quantities, that tend to be more useful (such quantities played a key role in my paper with Brad, for instance). But there’s not really a systematic way (a la Cartan) to find monotone quantities (we found the ones we had by reasoning in analogy with quantities that were useful for a similar-looking flow, Dyson Brownian motion).

22 April, 2018 at 6:20 am

Raphael

Sounds a bit like there should be something like an entropy.

22 April, 2018 at 7:12 am

Raphael

I mean for the one the local entropy gain directly follows from the PDE, on the other hand side one could look at particular “subsystems”. I don’t know if the zeros can be interpreted meaningful as subsystems but in any case “phase change”s like what you suggest in your interpretation are nicely traceable with the entropy. Especially there is the interpretation of entropy as the number of degrees of freedom. In that sense if a zero can be complex or exclusively real directly goes into the entropy (like by a factor of two per particle). Just a loose thought.

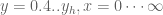

17 April, 2018 at 8:19 pm

Nazgand

It occurs me that another way to attack the problem is to use a fixed and calculate a mesh barrier over the variables

and calculate a mesh barrier over the variables  as

as  varies from the verified upper bound of the de Bruijn-Newman constant,

varies from the verified upper bound of the de Bruijn-Newman constant,  to a target upper bound,

to a target upper bound,  and as

and as  varies from 0 to an upper bound of the maximum possible

varies from 0 to an upper bound of the maximum possible  for the current

for the current  . This mesh will take care of any zeroes coming from horizontal infinity.Then one can follow some zero,

. This mesh will take care of any zeroes coming from horizontal infinity.Then one can follow some zero,  greater than

greater than  (at both

(at both  through the reverse heat dynamics, and verify that the same number of zeroes exist on the real line up to

through the reverse heat dynamics, and verify that the same number of zeroes exist on the real line up to  . I am assuming that zeroes on the real line are able to join together then exit the real line as

. I am assuming that zeroes on the real line are able to join together then exit the real line as  decreases, but if this is shown to be impossible for some

decreases, but if this is shown to be impossible for some  close enough to

close enough to  , then only the mesh calculation is required, not the counting of real zeroes.

, then only the mesh calculation is required, not the counting of real zeroes.

The current method is working, yet someone may find this idea a useful starting point, as this method nicely recycles previous upper bounds of the constant. This sort of elementary idea is the only thing I see which I can submit that may help, given my shallow understanding of the project.

18 April, 2018 at 7:51 am

Terence Tao

It’s a nice idea to try to move backwards in time from the previous best known bound of rather than move forward in time from

rather than move forward in time from  (which is our current approach that leverages numerical RH). The problem though is that when one moves backwards in time, the real zeroes become very “volatile” – it’s very hard to prevent them from colliding and then moving off the real axis (this basically would require one to somehow exclude “Lehmer pairs” at these values of t. One could potentially do so if one knew that the zeroes were all real at some earlier value of t, but this leads to circular-looking arguments, where one needs to lower the bound on

(which is our current approach that leverages numerical RH). The problem though is that when one moves backwards in time, the real zeroes become very “volatile” – it’s very hard to prevent them from colliding and then moving off the real axis (this basically would require one to somehow exclude “Lehmer pairs” at these values of t. One could potentially do so if one knew that the zeroes were all real at some earlier value of t, but this leads to circular-looking arguments, where one needs to lower the bound on  in order to lower the bound on

in order to lower the bound on  ).

).

18 April, 2018 at 3:25 pm

Nazgand

I do not understand what part of my argument is circular, as it is merely another way of verifying that the rectangular region of possible non-real zeroes of \(H_{t_0}\) has no non-real zeroes. This is the same rectangular region of possible non-real zeroes the current method uses for fixed \(t=t_0\).

If the zeroes are conserved expect for zeroes appearing from horizontal infinity and no zeroes pass through the barrier at \(x=x_0\) then counting the number of real zeroes up to \(z(t)\) for both \(H_{t_0}(z)\) and \(H_{t_0}(z)\) and verifying equality implies the rectangle is free of non-real zeroes. If zeroes collide and exit the real line, then the counts of real zeroes will not match.

Is your concern that multiple real zeroes near each other may be miscounted, and that avoiding such miscounts would be costly computation-wise? Much research has been done on counting real zeroes of \(H_0(z)\), and applying similar methods to \(H_t(z)\) is likely possible.

The idea that the counting of real zeroes might be skip-able is fluffy speculation based on the fact that one world only need to avoid colliding real zeroes for zeroes less than \(z(t)\).

Without such a shortcut, counting real zeroes can be expensive for large \(x_0\), yet the current method likely uses more computation to lower the bound to \(t_0\) because a barrier mesh needs to be calculated which has width \(x_0\), leaving only the cost of the mesh at \(x=x_0\) to consider, which may be cheaper than the current mesh calculation required.

18 April, 2018 at 4:10 pm

Terence Tao

If one is willing to try to count real zeroes of up to some large threshold

up to some large threshold  , then this approach could work in principle, but the time complexity of this is likely to scale like

, then this approach could work in principle, but the time complexity of this is likely to scale like  at best (to scan each unit interval for zeroes takes about

at best (to scan each unit interval for zeroes takes about  time assuming that the Odlyzko-Schonhage algorithm can be adapted for

time assuming that the Odlyzko-Schonhage algorithm can be adapted for  ). We already had a somewhat similar scan in previous arguments using the argument principle for a rectangular region above the real axis, and even with the additional convergence afforded by having

). We already had a somewhat similar scan in previous arguments using the argument principle for a rectangular region above the real axis, and even with the additional convergence afforded by having  equal to 0.4 rather than 0, it took a while to reach

equal to 0.4 rather than 0, it took a while to reach  or so. In contrast, the barrier method only has to scan a single unit interval

or so. In contrast, the barrier method only has to scan a single unit interval ![[X,X+1]](https://s0.wp.com/latex.php?latex=%5BX%2CX%2B1%5D&bg=ffffff&fg=545454&s=0&c=20201002) (albeit for multiple values of

(albeit for multiple values of  , which is an issue), and we’ve been able to make it work for

, which is an issue), and we’ve been able to make it work for  up to

up to  so far.

so far.

The circularity I was referring to would arise if one wanted to somehow avoid the computationally intensive process of counting real zeroes up to . In order to do this one would have to somehow exclude the possibility that say

. In order to do this one would have to somehow exclude the possibility that say  has a Lehmer pair up to this height, as such a pair would make the count of real zeroes very sensitive to the value of

has a Lehmer pair up to this height, as such a pair would make the count of real zeroes very sensitive to the value of  . Given that real zeroes repel each other, the non-existence of Lehmer pairs at

. Given that real zeroes repel each other, the non-existence of Lehmer pairs at  is morally equivalent to

is morally equivalent to  having real zeroes (up to scale

having real zeroes (up to scale  , at least) for some

, at least) for some  . Actually, now that I write this, I see that this is weaker than requiring one to already possess the bound

. Actually, now that I write this, I see that this is weaker than requiring one to already possess the bound  , since one could still have zeroes off the real axis near horizontal infinity. So there need not be a circularity here. Still, I don't see a way to force the absence of Lehmer pairs without either a scan of length

, since one could still have zeroes off the real axis near horizontal infinity. So there need not be a circularity here. Still, I don't see a way to force the absence of Lehmer pairs without either a scan of length  (and time complexity something like

(and time complexity something like  or a barrier method (outsourcing the length

or a barrier method (outsourcing the length  scan to the existing literature on numerical verification of RH).

scan to the existing literature on numerical verification of RH).

17 April, 2018 at 10:19 pm

pisoir

Dear prof. Tao,

I have been watching carefully your (fast) progress on this problem and from my point of view I am confused about several of your comments.

To me it seems that you are aiming for , but from the progress made so far, I have the feeling that this is a rather “artificially” picked bound and if you wanted you could push it even further. Also you mention several times the numerical complexity of the problem, but according to the fast results by KM, Rudolph and others, this also does not seem to be a problem at the moment.

, but from the progress made so far, I have the feeling that this is a rather “artificially” picked bound and if you wanted you could push it even further. Also you mention several times the numerical complexity of the problem, but according to the fast results by KM, Rudolph and others, this also does not seem to be a problem at the moment. and then close the project, because it could then go on forever (pushing the

and then close the project, because it could then go on forever (pushing the  by meaningless 0.01 each month) or you will try to push it as far as you can until you really hit the fundamental border of this method? Is there such border and what could that value be (can it go down to e.g.

by meaningless 0.01 each month) or you will try to push it as far as you can until you really hit the fundamental border of this method? Is there such border and what could that value be (can it go down to e.g.  )?

)?

So my question is, are you aiming at some specific value of

Thanks.

18 April, 2018 at 5:20 am

goingtoinfinity

“by meaningless 0.01 each month” – that would be rather surprising in july 2020.

18 April, 2018 at 7:57 am

Terence Tao

I view the bound on as a nice proxy for progress on understanding the behaviour of the

as a nice proxy for progress on understanding the behaviour of the  , but one should not identify this proxy too strongly with progress itself (cf. Goodhart’s law). Already, the existing efforts to improve the lower bound on

, but one should not identify this proxy too strongly with progress itself (cf. Goodhart’s law). Already, the existing efforts to improve the lower bound on  have revealed a number of useful new results and insights about

have revealed a number of useful new results and insights about  , such as an efficient Riemann-Siegel type approximation to

, such as an efficient Riemann-Siegel type approximation to  for large values of x, an efficient integral representation to

for large values of x, an efficient integral representation to  for small and medium values of x, a “barrier” technique to create large zero free regions for

for small and medium values of x, a “barrier” technique to create large zero free regions for  (assuming a corresponding zero free region for

(assuming a corresponding zero free region for  ), and an essentially complete understanding of the zeroes of

), and an essentially complete understanding of the zeroes of  at very large values of x (

at very large values of x ( ). Hopefully as we keep pushing, more such results and observations will follow. If such results start drying up, and the bound on

). Hopefully as we keep pushing, more such results and observations will follow. If such results start drying up, and the bound on  slowly improves from month to month purely from application of ever increasing amounts of computer time, then that would indicate to me that the project has come close to its natural endpoint and it would be a good time to declare victory and write up the results.

slowly improves from month to month purely from application of ever increasing amounts of computer time, then that would indicate to me that the project has come close to its natural endpoint and it would be a good time to declare victory and write up the results.

18 April, 2018 at 3:54 am

Anonymous

For a given measure of computational complexity (or “effort”), an interesting optimization problem is to find the optimal pair which minimize the bound

which minimize the bound  subject to a given computational complexity constraint.

subject to a given computational complexity constraint.

18 April, 2018 at 8:05 am

Terence Tao

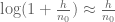

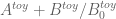

It might be worth resurrecting the toy approximation

for this purpose, where and

and  (see this page of wiki). For the purposes of locating the most efficient choice of

(see this page of wiki). For the purposes of locating the most efficient choice of  parameters that are still computationally feasible, we can just pretend that

parameters that are still computationally feasible, we can just pretend that  is exactly equal to this toy approximation (thus totally ignoring

is exactly equal to this toy approximation (thus totally ignoring  errors, and also replacing the somewhat messy

errors, and also replacing the somewhat messy  factors by a simplified version). Once we locate the most promising choice, we can then return to the more accurate approximation

factors by a simplified version). Once we locate the most promising choice, we can then return to the more accurate approximation  and combine it with upper bounds on

and combine it with upper bounds on  .

.

18 April, 2018 at 8:16 am

Terence Tao

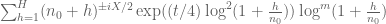

With regards to this toy problem at least, it seems like some version of the Odlyzko–Schönhage algorithm may be computationally efficient in the barrier verification step where we will have to evaluate for many mesh points of

for many mesh points of  (but with

(but with  in a fixed unit interval

in a fixed unit interval ![[X, X+1]](https://s0.wp.com/latex.php?latex=%5BX%2C+X%2B1%5D&bg=ffffff&fg=545454&s=0&c=20201002) . The first basic idea of this algorithm is to transform this problem by performing an FFT in the x variable, so that one is now evaluating things like

. The first basic idea of this algorithm is to transform this problem by performing an FFT in the x variable, so that one is now evaluating things like

for , where

, where  is a mesh size parameter. The point is that this FFT interacts nicely with the

is a mesh size parameter. The point is that this FFT interacts nicely with the  factors in the toy approximation to give a sum which is substantially less oscillatory, and which can be approximated fairly accurately by dividing up the sum into blocks and using a Taylor expansion on each block, which can allow one to reuse a lot of calculations as

factors in the toy approximation to give a sum which is substantially less oscillatory, and which can be approximated fairly accurately by dividing up the sum into blocks and using a Taylor expansion on each block, which can allow one to reuse a lot of calculations as  varies (basically reducing the time complexity of evaluating (1) for all values of k from the naive cost of

varies (basically reducing the time complexity of evaluating (1) for all values of k from the naive cost of  to something much smaller like

to something much smaller like  ; also one should be able to reuse many of these calculations when one varies t and y as well, leading to further time complexity savings). One still has to do a FFT at the end, but this is apparently a very fast step and is definitely not the bottleneck. I’ll have to read up on the algorithm to figure out how to adapt it to this toy problem at least. (In our language, the algorithm is generally formulated to compute the sum

; also one should be able to reuse many of these calculations when one varies t and y as well, leading to further time complexity savings). One still has to do a FFT at the end, but this is apparently a very fast step and is definitely not the bottleneck. I’ll have to read up on the algorithm to figure out how to adapt it to this toy problem at least. (In our language, the algorithm is generally formulated to compute the sum  for many values of

for many values of ![x \in [X,X+1]](https://s0.wp.com/latex.php?latex=x+%5Cin+%5BX%2CX%2B1%5D&bg=ffffff&fg=545454&s=0&c=20201002) simultaneously.)

simultaneously.)

18 April, 2018 at 8:01 am

Anonymous

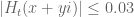

Is it possible to derive (perhaps by using exponential sums estimates) for each given positive an explicit numerical threshold

an explicit numerical threshold  with the property that

with the property that  whenever

whenever  and

and  ?

?

If so, then (clearly) should decrease with increasing

should decrease with increasing  and therefore is preferable over the currently used

and therefore is preferable over the currently used  (i.e. with

(i.e. with  ) in the barrier method.

) in the barrier method.

Since may also be used as a kind of "computational complexity measure", Is it possible that

may also be used as a kind of "computational complexity measure", Is it possible that  (i.e. with

(i.e. with  ) grows much slower than

) grows much slower than  as

as  ? (while still giving the bound

? (while still giving the bound  – comparable to the bound

– comparable to the bound  with

with  .)

.)

18 April, 2018 at 8:34 am

Terence Tao

In general it gets harder and harder to keep away from zero as y gets smaller – after all, we expect to have a lot of zeroes

away from zero as y gets smaller – after all, we expect to have a lot of zeroes  with

with  . The two methods that we have been able to use to actually improve the bound on

. The two methods that we have been able to use to actually improve the bound on  only require keeping

only require keeping  away from zero for

away from zero for  , where

, where  is something moderately large like 0.4. While we do have some capability to probe near

is something moderately large like 0.4. While we do have some capability to probe near  (in particular, in the asymptotics section there are some arguments using Jensen’s formula and the argument principle that make all the zeroes real beyond

(in particular, in the asymptotics section there are some arguments using Jensen’s formula and the argument principle that make all the zeroes real beyond  for some large absolute constant

for some large absolute constant  ), I think numerically this sort of control will be much weaker for computationally feasible values of

), I think numerically this sort of control will be much weaker for computationally feasible values of  than the control we can get for large values of

than the control we can get for large values of  . (One can already see this when considering the main term

. (One can already see this when considering the main term  of the toy expansion, in which the role of increasing y in improving the convergence of the sum is quite visible.)

of the toy expansion, in which the role of increasing y in improving the convergence of the sum is quite visible.)

18 April, 2018 at 10:31 am

Anonymous

Correction: in the above required property of , it should be

, it should be  (instead of

(instead of  ).

).

18 April, 2018 at 1:29 pm

Mark

I haven’t been following this project at all, so apologies for the basic question which is perhaps covered in the discussion elsewhere:

But how much of a dictionary relating \Lambda and zero-free regions do we have? The kinds of things that I mean by a “dictionary” might be:

1) If we could prove there is a zero free strip, does that imply anything about \Lambda?

2) If there were only a single pair of off critical line zeros, can we convert this into a value of \Lambda depending on their coordinates?

3) If we knew \lambda < 10^{-1000}, would that give us any usable zero-free region?

More philosophically, I'm curious why in the "de Bruijn-Newman score system" it feels like real progress on the Riemann-Hypothesis is being made while (and I'd love to be wrong here) it doesn't seem that the ideas being applied (numerical verification, etc) are circumventing any of the obstructions to progress in other the other "score systems" (zero-free regions, etc.)

18 April, 2018 at 2:02 pm

Anonymous

wait, are you saying when we reach then we’re *not* really half way to proving rieman hypothesis???

then we’re *not* really half way to proving rieman hypothesis???

18 April, 2018 at 3:57 pm

Terence Tao

The basic theorem here is due to de Bruijn (Theorem 13 of https://pure.tue.nl/ws/files/1769368/597490.pdf ), which in our language says that if has all zeroes in the strip

has all zeroes in the strip  , then one has the bound

, then one has the bound  . For instance, the proof of the prime number theorem gives this for

. For instance, the proof of the prime number theorem gives this for  , yielding de Bruijn’s bound

, yielding de Bruijn’s bound  . So zero free regions for zeta or for

. So zero free regions for zeta or for  can give bounds on

can give bounds on  . However we don’t seem to have any converse implication, beyond the tautological assertion that

. However we don’t seem to have any converse implication, beyond the tautological assertion that  has all real zeroes for all

has all real zeroes for all  . Having a really good upper bound on

. Having a really good upper bound on  such as

such as  may rule out certain scenarios very far from RH (such as having a single pair of zeroes far from the critical line, and also relatively far from all other zeroes) but it would be tricky to convert it into a clean statement about, say, a zero-free region. So perhaps one reason why we can make progress on bounding

may rule out certain scenarios very far from RH (such as having a single pair of zeroes far from the critical line, and also relatively far from all other zeroes) but it would be tricky to convert it into a clean statement about, say, a zero-free region. So perhaps one reason why we can make progress on bounding  , but not on other approximations to RH, is that the implications only go in one direction.

, but not on other approximations to RH, is that the implications only go in one direction.

If somehow we could show that RH is true with a single exceptional complex pair of zeroes, it should be possible to track that zero numerically with high precision and get a precise value on , assuming that pair was within reach of numerical verification. Otherwise one could use de Bruijn’s theorem to obtain a bound, though it would not be optimal.

, assuming that pair was within reach of numerical verification. Otherwise one could use de Bruijn’s theorem to obtain a bound, though it would not be optimal.

18 April, 2018 at 4:42 pm

Terence Tao

I have a sort of statistical mechanics intuition for the zeroes of which may help clarify the situation. A bit like the three classical states of matter, the zeroes of

which may help clarify the situation. A bit like the three classical states of matter, the zeroes of  seem to take one of three forms: “gaseous”, in which the zeroes are complex, “liquid”, in which the zeroes are real but not evenly spaced, and “solid” in which the zeroes are real and evenly spaced. (This should somehow correspond to the

seem to take one of three forms: “gaseous”, in which the zeroes are complex, “liquid”, in which the zeroes are real but not evenly spaced, and “solid” in which the zeroes are real and evenly spaced. (This should somehow correspond to the  ,

,  , and

, and  limiting cases of beta ensembles, but I do not know how to make this intuition at all rigorous.) At time zero

limiting cases of beta ensembles, but I do not know how to make this intuition at all rigorous.) At time zero  , the zeroes are some mixture of liquid and gaseous, and the RH (as well as supporting hypotheses such as GUE) asserts that it is purely liquid. At positive time, the situation is similar to

, the zeroes are some mixture of liquid and gaseous, and the RH (as well as supporting hypotheses such as GUE) asserts that it is purely liquid. At positive time, the situation is similar to  in a region of the form

in a region of the form  (though perhaps slightly "cooler", with some of the gas becoming liquid), but for

(though perhaps slightly "cooler", with some of the gas becoming liquid), but for  the zeroes have "frozen" into a solid state, so in particular there is only a finite amount of gas or liquid at positive time. As time advances, more of the gas (if any) condenses into liquid, and the liquid freezes into solid; by time

the zeroes have "frozen" into a solid state, so in particular there is only a finite amount of gas or liquid at positive time. As time advances, more of the gas (if any) condenses into liquid, and the liquid freezes into solid; by time  , all the gas is gone, and pretty soon after that it all looks solid. In the reverse direction, we know that at any negative time, there is at least some gas.

, all the gas is gone, and pretty soon after that it all looks solid. In the reverse direction, we know that at any negative time, there is at least some gas.

With this perspective, the reason why it is easier to make progress on than on other metrics towards RH is that we are dealing with an object

than on other metrics towards RH is that we are dealing with an object  which is already almost completely "solidified" into something well understood: the mysterious liquid and gaseous phases that make the Riemann zeta function so hard to understand only have a finite (albeit large) spatial extent, and so can be treated numerically, at least in principle.

which is already almost completely "solidified" into something well understood: the mysterious liquid and gaseous phases that make the Riemann zeta function so hard to understand only have a finite (albeit large) spatial extent, and so can be treated numerically, at least in principle.

19 April, 2018 at 9:48 am

Rudolph01

I really like the analogy and it makes me wonder what then is causing the phase-transitions between solid, liquid and gaseous. Here’s a free-flow of some thoughts, going backwards in time and assuming RH.

From solid to liquid: . So, with

. So, with  , we could reason that this partial Euler product needs to incrementally grow back towards its well-known infinite version at

, we could reason that this partial Euler product needs to incrementally grow back towards its well-known infinite version at  . When we follow the trajectories of the real zeros going back in (positive) time, they will feel an increasing competitive pressure w.r.t. their neighbouring zeros to occupy the available space to encode all the required information about the primes. This increasingly ‘heated battle’ for available locations when

. When we follow the trajectories of the real zeros going back in (positive) time, they will feel an increasing competitive pressure w.r.t. their neighbouring zeros to occupy the available space to encode all the required information about the primes. This increasingly ‘heated battle’ for available locations when  , does ‘bend’ their trajectories and determines the angle and the velocity by which they hit the line

, does ‘bend’ their trajectories and determines the angle and the velocity by which they hit the line  . Statistics will force some angles to become very small and these will then induce a Lehmer-pair. So, it could be the increasing need to encode an infinite amount of information about the primes, that turns up the ‘heat/pressure’ and moves the statistical ensembles of real lines from solid to liquid.

. Statistics will force some angles to become very small and these will then induce a Lehmer-pair. So, it could be the increasing need to encode an infinite amount of information about the primes, that turns up the ‘heat/pressure’ and moves the statistical ensembles of real lines from solid to liquid.

When we were starting to ‘mollify’ the toy-model, I recall the surprise to still see a vestige of a partial Euler product even at

Liquid to gaseous: slowly turns into a gaseous state? I don’t think so, since the ‘heat is already on’ at the line

slowly turns into a gaseous state? I don’t think so, since the ‘heat is already on’ at the line  and all the collision courses for the real zeros have been determined. The real zeros ‘plunge’ as it were into the domain below

and all the collision courses for the real zeros have been determined. The real zeros ‘plunge’ as it were into the domain below  ((like particles flying into a bubble chamber) and their angles and velocities determine at which

((like particles flying into a bubble chamber) and their angles and velocities determine at which  a collision will occur. So, no extra heat source is needed and only the ‘backward heat flow’ logic is required to explain the trajectories below

a collision will occur. So, no extra heat source is needed and only the ‘backward heat flow’ logic is required to explain the trajectories below  .

.

Is there a need for an extra ‘heat/pressure’ source to explain why a perfect liquid at

Of course the key question is why the collisions of real zeros can only occur below . What is it that suddenly allows liquidized real zeros to collide and send off some gaseous complex zeros in

. What is it that suddenly allows liquidized real zeros to collide and send off some gaseous complex zeros in  -steps? What has really changed below

-steps? What has really changed below  ? I looked at potential ‘switches’ in our formulae like the

? I looked at potential ‘switches’ in our formulae like the  in the 3rd-approach integral, but that doesn’t lead us anywhere. The only thing that I can think of that is really different for

in the 3rd-approach integral, but that doesn’t lead us anywhere. The only thing that I can think of that is really different for  below

below  , is that there is no longer extra ‘heat/pressure’ being added (since the infinite Euler product has been completed at

, is that there is no longer extra ‘heat/pressure’ being added (since the infinite Euler product has been completed at  ). It seems therefore that somehow this incremental ‘heat/pressure’ build-up when

). It seems therefore that somehow this incremental ‘heat/pressure’ build-up when  , is at the same time also the prohibiting force for real zeros to collide. And this feels like a circular reasoning and probably brings us back again to the duality between the primes and the non-trivial zeros…

, is at the same time also the prohibiting force for real zeros to collide. And this feels like a circular reasoning and probably brings us back again to the duality between the primes and the non-trivial zeros…

19 April, 2018 at 10:33 am

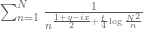

Rudolph01

Couldn’t resist producing an illustrative visual of the analogy.. The trajectory of the real zeros is based on real data, I manually added fictitious data to reflect the trajectory of the complex zeros.

18 April, 2018 at 6:04 pm

Terence Tao

Consider the model problem of evaluating

for multiple choices of , with

, with  in an interval

in an interval ![[X,X+1]](https://s0.wp.com/latex.php?latex=%5BX%2CX%2B1%5D&bg=ffffff&fg=545454&s=0&c=20201002) . If one does this naively,

. If one does this naively,  different evaluations of this sum would require about

different evaluations of this sum would require about  operations. There is a relatively cheap way to cut down this computation (at the cost of a bit of accuracy) without any FFT, simply by performing a Taylor expansion on intervals. Namely, pick some

operations. There is a relatively cheap way to cut down this computation (at the cost of a bit of accuracy) without any FFT, simply by performing a Taylor expansion on intervals. Namely, pick some  between

between  and

and  and split the sum into about

and split the sum into about  subsums of the form

subsums of the form

(actually it is slightly more advantageous to use summation ranges centred around , rather than to the right of

, rather than to the right of  , but let us ignore this for the time being). Writing

, but let us ignore this for the time being). Writing  , and also

, and also  , we can write this expression as

, we can write this expression as

For small compared to

small compared to  , we can hope to Taylor approximate

, we can hope to Taylor approximate  , leading eventually to the first-order approximation

, leading eventually to the first-order approximation

The point of this is that if one wants to evaluate this subsum at different choices of

different choices of  , one only needs

, one only needs  evaluations rather than

evaluations rather than  because one only needs to compute the sums

because one only needs to compute the sums  and

and  once rather than

once rather than  times, as they can be reused across the evaluations. Putting together the

times, as they can be reused across the evaluations. Putting together the  subsums, this cuts down the time complexity from

subsums, this cuts down the time complexity from  to

to  . One can also Taylor expand to higher order than first-order which allows one to take

. One can also Taylor expand to higher order than first-order which allows one to take  larger at the cost of worsening the implied constant in the

larger at the cost of worsening the implied constant in the  notation. There is some optimisation to be done but this should already lead to some speedup, possibly as much an order of magnitude or so.

notation. There is some optimisation to be done but this should already lead to some speedup, possibly as much an order of magnitude or so.

19 April, 2018 at 3:41 am

Anonymous

The “first sum” can be represented as

is Hurwitz Zeta function

is Hurwitz Zeta function

where

https://dlmf.nist.gov/25.11

https://en.wikipedia.org/wiki/Hurwitz_zeta_function

The “second sum” is

which has a similar representation in terms of Hurwitz Zeta function.

Efficient algorithms for Hurwitz Zeta and related functions and oscillatory series are given in Vepstas’ paper

Click to access 0702243.pdf

20 April, 2018 at 10:12 pm

KM

With H=10 and near X=6*10^10, I have been able to get a speedup of 3x to 4x (although more should be realizable) which increases gradually as M increases.

To keep H away from n0, the first few terms in the sum are computed exactly (currently I used first 1000 terms for this part), and approximate estimates are used for the remainder sum. Divergence from actual is near the 7th or 8 decimal point.

21 April, 2018 at 7:24 am

Terence Tao

That’s promising! Do you have a rough estimate as to what M (the number of values that need to be evaluated) might be for the barrier computation, based on what happened back at

values that need to be evaluated) might be for the barrier computation, based on what happened back at  ? The main boost to speed should come in the large M regime. Also, if one uses symmetric sums

? The main boost to speed should come in the large M regime. Also, if one uses symmetric sums  rather than asymmetric sums

rather than asymmetric sums  then the error term should be a bit smaller (by a factor of 4 or so), which should allow one to make H a bit bigger for a given level of accuracy. One could also Taylor expand to second order rather than first to obtain significantly more accuracy, though the formulae get messier when one does so which may counteract the speedup. (Presumably one should start storing quantities such as

then the error term should be a bit smaller (by a factor of 4 or so), which should allow one to make H a bit bigger for a given level of accuracy. One could also Taylor expand to second order rather than first to obtain significantly more accuracy, though the formulae get messier when one does so which may counteract the speedup. (Presumably one should start storing quantities such as  that appear multiple times in such formulae so that one does not compute them multiple times, though this is probably not the bottleneck in the computation.)

that appear multiple times in such formulae so that one does not compute them multiple times, though this is probably not the bottleneck in the computation.)

21 April, 2018 at 10:57 am

KM

I checked the ddx bound at N=69098, y=0.3, t=0 and it comes out to around 1000. If we choose a strip where |(A+B)/B0| seems to stay high (for eg. X = 6*10^10 + 2099 + [0,1] looks good in this respect) and assume it will stay above 1, M too seems to be around 1000. At N=69098, y=0.3, t=0.2, the ddx bound drops significantly to around 5.

The earlier script was refined so that it is now 6x to 7x faster than direct summation. I will check how varying H, number of Taylor terms and optimizing further can result in further speedup.

22 April, 2018 at 1:18 am

KM

Writing the Taylor expansion of the exponential in a more general form, it seems possible to improve the accuracy to as much as desired. Also, retaining and not approximating the two log factors does not incur a noticeable cost (storing the repeatedly occuring quantities kind of offsets it) while improving accuracy a bit. For eg. at H=10 and 4 Taylor terms, accuracy improves to 12 digits, and with 10 terms improves to 28 digits taking only 1.5x more time).

Near X=6*10^10, with H=50 and 4 Taylor terms, I was able to get estimates for 1000 points in around 1.5 minutes with 9 digit accuracy, which was a 20x speedup vs direct estimation.

22 April, 2018 at 7:12 am

Terence Tao

Sounds good! Could you expand a little on which log factors you found it better to not approximate? [EDIT: I guess you mean doing a Taylor expansion in rather than in

rather than in  ? I can see that that would be slightly more efficient.]

? I can see that that would be slightly more efficient.]

For some very minor accuracy improvements, one could work in a range![x \in X + [-1/2,1/2]](https://s0.wp.com/latex.php?latex=x+%5Cin+X+%2B+%5B-1%2F2%2C1%2F2%5D&bg=ffffff&fg=545454&s=0&c=20201002) rather than

rather than ![x \in X + [0,1]](https://s0.wp.com/latex.php?latex=x+%5Cin+X+%2B+%5B0%2C1%5D&bg=ffffff&fg=545454&s=0&c=20201002) ; also, since we have some lower bound

; also, since we have some lower bound  on

on  , we can write

, we can write  (say) and pull out a factor of

(say) and pull out a factor of  rather than

rather than  in order to replace the

in order to replace the  factors with the slightly smaller

factors with the slightly smaller  . This should improve the accuracy of the Taylor expansions by a factor of two or so with each term in the expansion, especially in the most important case when t is close to 0 and y close to y_0.

. This should improve the accuracy of the Taylor expansions by a factor of two or so with each term in the expansion, especially in the most important case when t is close to 0 and y close to y_0.

22 April, 2018 at 8:11 am

KM

I will try these out for more improvements. Also, it seems replacing log(N) +- i*Pi/8 with arrays of alpha(s) or alpha(1-s) values to get a close match to the exact (A+B)/B0 estimates also works. I was able to run the script for an entire rectangle (4000 points) in around 6 minutes.

22 April, 2018 at 8:43 am

KM

For the n0 sums, I calculated and stored sums of the form

with

with  in the sums did not result in a noticeable speed difference overall, so I kept it in its original form.

in the sums did not result in a noticeable speed difference overall, so I kept it in its original form.

where m ranges from 0 to T-1 and T is the number of desired Taylor terms which can be passed to the function to approximate the larger exponential.

Replacing

22 April, 2018 at 1:09 pm

Terence Tao

Thanks for the clarification. I guess that while we will need to evaluate at thousands of values of (and perhaps hundreds of values of

(and perhaps hundreds of values of  as well), we won’t need as many values of

as well), we won’t need as many values of  to evaluate (and the evaluation becomes much faster as

to evaluate (and the evaluation becomes much faster as  increases), so there is not much advantage to remove the t-dependence in the inner sums, so it makes sense to keep factors such as

increases), so there is not much advantage to remove the t-dependence in the inner sums, so it makes sense to keep factors such as  unexpanded. In that case one can simplify the expressions a bit by writing

unexpanded. In that case one can simplify the expressions a bit by writing

for ,

,  ,

,  as

as

and use Taylor expansion of the exponential to decouple the variable from the

variable from the  variables.

variables.

It seems like we may have a choice of approximations to use: the approximation is our most accurate one (especially if we also insert the

approximation is our most accurate one (especially if we also insert the  correction), but the toy approximation

correction), but the toy approximation  may have acceptable accuracy at the

may have acceptable accuracy at the  level and will be easier to work with (for instance I’d be likely to make fewer typos with computing d/dt type expressions in the latter : ). Do you have a sense of how complicated it would be to adapt these Taylor expansion techniques to the sums that show up in the

level and will be easier to work with (for instance I’d be likely to make fewer typos with computing d/dt type expressions in the latter : ). Do you have a sense of how complicated it would be to adapt these Taylor expansion techniques to the sums that show up in the  expansion? If it is too messy or slow then it may be worth focusing efforts on establishing a barrier for

expansion? If it is too messy or slow then it may be worth focusing efforts on establishing a barrier for  instead and reworking some of the writeup to provide effective estimates for the error of the toy approximation rather than the effective one.

instead and reworking some of the writeup to provide effective estimates for the error of the toy approximation rather than the effective one.

23 April, 2018 at 10:25 am

KM

I have to still try out the y0+z form of the estimate, but in the earlier one it seems instead of calculating

the sum in the earlier comment, and using an array of

the sum in the earlier comment, and using an array of  instead of

instead of  (also with appropriate adjustments for the A sum and when y varies instead of x),

(also with appropriate adjustments for the A sum and when y varies instead of x),

we calculate

and

we can switch from approximations of the toy model to approximations of the effective model, with some additional overhead (however the parigp library atleast does well and keeps the overhead small).

Also, on the discrepancy between the toy and effective estimates, at X=6*10^10, y=0.3, t=0.2 with divergence around the 6th or 7th decimal, but

with divergence around the 6th or 7th decimal, but  are different.

are different.

Some sample values:

theta,[(A+B)/B0]_toy, [(A+B)/B0]_eff, [(A+B)/B0]_toy_with_exact_gamma

0.0, 0.844 + 0.192i, 0.802 + 0.228i, 0.802 + 0.228i

0.5, 0.812 + 0.165i, 0.792 + 0.199i, 0.792 + 0.199i

1.0, 0.787 + 0.128i, 0.782 + 0.150i, 0.782 + 0.150i

the difference being only due to the different gamma factor.

23 April, 2018 at 6:31 pm

Terence Tao

OK, thanks for this. It sounds like the numerics will be able to handle the effective approximation without too much additional difficulty, so this looks to be a better path than trying to fix up the toy approximation to become more accurate (though this could probably be done by using a more careful Taylor expansion of gamma). It will be a bit messier to rigorously control the error in the Taylor expansion for the effective approximation than the toy approximation, but there should be a lot of room in the error bounds (it looks like we could in fact lose several orders of magnitude in the error bounds if we Taylor expand to a reasonably high order) so hopefully we could use relatively crude estimates to handle this.

24 April, 2018 at 10:25 am

KM

After making the three changes (y0+z, [X-1/2,X+1/2], and symmetric n0 sums), accuracy seems to improve by 2-3 digits.

25 April, 2018 at 6:35 am

Terence Tao

Excellent! Hopefully we can trade in surplus accuracy for speed later by increasing the H parameter.

Since we have a fixed value of , and the barrier computation is largely insensitive to the choice of

, and the barrier computation is largely insensitive to the choice of  (in particular, a barrier computation for large t can be re-used as is for any smaller value of t, or for any larger value of

(in particular, a barrier computation for large t can be re-used as is for any smaller value of t, or for any larger value of  ), it seems the next thing to do is to figure out what the smallest value of

), it seems the next thing to do is to figure out what the smallest value of  is for which the barrier computation is feasible. Given that, the main numerical task remaining would be to find the best values of

is for which the barrier computation is feasible. Given that, the main numerical task remaining would be to find the best values of  above this

above this  threshold for which the Euler product calculations work at

threshold for which the Euler product calculations work at  and above; combining that with analytic error estimates and some treatment of the case of very large

and above; combining that with analytic error estimates and some treatment of the case of very large  will then presumably yield a bound for

will then presumably yield a bound for  which is close to the limits of our methods.

which is close to the limits of our methods.

25 April, 2018 at 9:10 am

Anonymous

If t0 is small enough and X is carefully chosen, would it be possible to have y0=0 for the barrier computation?

25 April, 2018 at 10:26 am

Rudolph01

Intriguing question, Anonymous!

Just a few thoughts on this, I might be incorrect. If would be in reach of numerical verification to achieve a ‘fully closed’ barrier, isn’t then the issue that at

would be in reach of numerical verification to achieve a ‘fully closed’ barrier, isn’t then the issue that at  we will find real zeros when

we will find real zeros when  goes from

goes from  ? Of course, we could carefully pick a

? Of course, we could carefully pick a  and avoid a known real zero at

and avoid a known real zero at  , however we also have to account for all other real zeros that are ‘marching up leftwards’ and could travel through the barrier at

, however we also have to account for all other real zeros that are ‘marching up leftwards’ and could travel through the barrier at  .

.

However, would we actually still require a ‘double’ barrier when the barrier reaches all the way to (i.e. no complex zeros can fly from right to left around the

(i.e. no complex zeros can fly from right to left around the  -corner anymore)? If indeed not, then maybe the way out is to just count all real zeros on the line

-corner anymore)? If indeed not, then maybe the way out is to just count all real zeros on the line  and

and  and subtract them from the total winding number found when scanning the entire barrier ‘rectangle’?

and subtract them from the total winding number found when scanning the entire barrier ‘rectangle’?

25 April, 2018 at 2:05 pm

Terence Tao

Yes, it seems theoretically possible to achieve this, though it may require a stupendous amount of numerical accuracy to actually pull off. It will probably help to have vary a bit in time, moving to the left to stay away from zeroes. If we can find a continuously varying function

vary a bit in time, moving to the left to stay away from zeroes. If we can find a continuously varying function  such that for all

such that for all  , there are no zeroes in the line segment

, there are no zeroes in the line segment  (including at the real endpoint

(including at the real endpoint  ), and all zeroes to the left of

), and all zeroes to the left of  are known to be real at time

are known to be real at time  , then this already implies that all zeroes to the left of

, then this already implies that all zeroes to the left of  are real at times

are real at times  , since no complex zeroes can penetrate the barrier, and no real zeroes can become complex at later times. But this would require a very accurate approximation of

, since no complex zeroes can penetrate the barrier, and no real zeroes can become complex at later times. But this would require a very accurate approximation of  near

near  , since no matter where one places

, since no matter where one places  one is going to be quite close to one of the real zeroes. In particular the

one is going to be quite close to one of the real zeroes. In particular the  type approximations we have been using may be inadequate for this, one may need to use

type approximations we have been using may be inadequate for this, one may need to use  or an even higher order expansion. (My understanding is that similar higher order Riemann-Siegel expansions were what were already used in existing numerical verifications of RH. It may be that this is a task better suited for some experts in numerical RH verification, rather than our current Polymath group.)

or an even higher order expansion. (My understanding is that similar higher order Riemann-Siegel expansions were what were already used in existing numerical verifications of RH. It may be that this is a task better suited for some experts in numerical RH verification, rather than our current Polymath group.)

25 April, 2018 at 5:53 pm

Anonymous

> some experts in numerical RH verification, rather than our current Polymath group

it’s about 20 degrees cooler in this shade

26 April, 2018 at 11:19 am

KM

At N=69098 and t=0.2, we are getting these lower bounds

y, Bound type, Bound value, verification required upto approx N

0.20, Euler 5 Lemma, 0.0699, 1.5 million

0.25, Euler 3 Triangle, 0.0270, 900k

The first one currently looks quite slow to compute and also requires a large verification range. The second one looks feasible.

27 April, 2018 at 2:24 pm

Terence Tao

OK, so I guess we can try to target then (which would give

then (which would give  ). It all hinges on whether we can still compute enough of a mesh for the barrier though…

). It all hinges on whether we can still compute enough of a mesh for the barrier though…

26 April, 2018 at 11:39 am

Anonymous

Since the bound is , it seems that it does not make sense for a given

, it seems that it does not make sense for a given  to expand much effort to reduce

to expand much effort to reduce  much below

much below  .

.

28 April, 2018 at 2:25 am

Anonymous

Another possible strategy is to reduce slightly from 0.2 to 0.15 (say), and compensate for the added computational complexity by increasing(!) slightly

from 0.2 to 0.15 (say), and compensate for the added computational complexity by increasing(!) slightly  from 0.25 to 0.3 (say), with the resulting bound

from 0.25 to 0.3 (say), with the resulting bound  – (better even than the limiting choice

– (better even than the limiting choice  for

for  ).

).

The idea behind this optimization of and

and  is that if

is that if  is sufficiently smaller than

is sufficiently smaller than  , it seems better to reduce slightly

, it seems better to reduce slightly  and increase(!) slightly

and increase(!) slightly  to keep reasonable computational complexity rather than the strategy of reducing both

to keep reasonable computational complexity rather than the strategy of reducing both  and

and  .

.

28 April, 2018 at 6:43 am

KM

I was kind of intrigued by the n0 sums so have been working to optimize it further. Firstly, just like X, after making y0+z symmetric so that the anchor point is (1+y0)/2, it seems the accuracy is more consistent along the rectangle.

Eliminating any head or tail exact partial sums, making H=N, and increasing the number of Taylor terms to something like 30 or 50 (with 50 terms, we seem to get above 25 digit accuracy), the same rectangle (4000 mesh points) can be computed under 10 seconds (from 6 min earlier).

It seems this way the entire barrier strip can be covered within a day. Working now on combining this with the adaptive t mesh.

29 April, 2018 at 1:29 pm

Terence Tao

Wow, that is surprisingly good performance! It actually surprises me that H can be taken as large as N, I would have expected the Taylor series expansion to start becoming very poorly convergent at this scale, but I guess the point is that by taking a huge number of Taylor terms one can compensate for this. An analogy would be computing by Taylor expansion (where for our purposes

by Taylor expansion (where for our purposes  could be something on the order of

could be something on the order of  after the various symmetrising tricks). Normally one would only want to perform Taylor expansion for x in a range like [-1,1], but if one is willing to take 50 terms then I guess things are good up to something like [-10,10] (the Taylor series

after the various symmetrising tricks). Normally one would only want to perform Taylor expansion for x in a range like [-1,1], but if one is willing to take 50 terms then I guess things are good up to something like [-10,10] (the Taylor series  for

for  peaks at around

peaks at around  ) and so perhaps one can indeed treat expressions that are of magnitude

) and so perhaps one can indeed treat expressions that are of magnitude  or so for our current choice of

or so for our current choice of  .

.

28 April, 2018 at 8:49 pm

KM

The barrier strip X=6*10^10+2099 + [0,1] (N=69098), y=[0.2,1], t=[0,0.2] was covered (moving forward in t) and the output is kept here and here. The first file gives summary stats for each t in the adaptive t mesh, and the second file gives (A+B)/B0 values for all (x,y,t) mesh points considered. The winding number came out to be zero for each rectangle.

The multieval method with 50 Taylor terms was used to estimate (A+B)/B0 values along each rectangle. The overall computation time for the strip was around 2.5 hours. Since ddt bounds and ddx bounds take time to compute, they were updated only after every 100 rectangles. For each rectangle, the number of mesh points for each side was kept as ceil(ddx bound) (the strip was chosen where minabb = min rectangle mesh |(A+B)/B0| value was expected to be above 1, indicating an allowable step size above 1/(ddx bound)). This overcompensates a bit for the constant x sides since they are a bit shorter. The t jump used was minabb/(2*ddt bound).

29 April, 2018 at 1:51 pm

Terence Tao

That’s great progress! What is the minimum distance of amongst the mesh points? At such large values of

amongst the mesh points? At such large values of  , the error terms

, the error terms  should be essentially negligible in this setting, though now that

should be essentially negligible in this setting, though now that  and

and  are a bit lower than what we have dealt with in the past, one may have to be a bit more careful, particularly when

are a bit lower than what we have dealt with in the past, one may have to be a bit more careful, particularly when  . However, there is a tradeoff between t and y that we could potentially exploit: I think it is possible to replace the condition

. However, there is a tradeoff between t and y that we could potentially exploit: I think it is possible to replace the condition  in the barrier with the slightly weaker

in the barrier with the slightly weaker  , thus one can make the barrier higher at small values of t and only reach

, thus one can make the barrier higher at small values of t and only reach  at the final time

at the final time  , basically because even if a zero sneaks underneath the elevated barrier at the earlier time it will still be attracted to its complex conjugate and eventually fall down to height

, basically because even if a zero sneaks underneath the elevated barrier at the earlier time it will still be attracted to its complex conjugate and eventually fall down to height  or below at time

or below at time  . I haven’t fully checked this claim and having such a moving barrier would also complicate the derivative analysis (one has to compute a total derivative rather than a partial derivative). But it looks like the numerics on the barrier side are now good enough that it is no longer the bottleneck (if I understand your previous comments correctly, the Euler mollifier component of the argument may now be the dominant remaining computation), so maybe this refinement is not needed at this stage.

. I haven’t fully checked this claim and having such a moving barrier would also complicate the derivative analysis (one has to compute a total derivative rather than a partial derivative). But it looks like the numerics on the barrier side are now good enough that it is no longer the bottleneck (if I understand your previous comments correctly, the Euler mollifier component of the argument may now be the dominant remaining computation), so maybe this refinement is not needed at this stage.

28 April, 2018 at 8:57 pm

KM

Also forgot to mention that y=[0.2,1] was used since the Euler 5 Lemma bound is positive at N=69098,y=0.2,t=0.2 and maybe there will be a faster way to calculate this up to the required N. Although right now I am finding it too slow, so will start with y=0.25, t=0.2 and the Euler 3 and 2 triangle bounds.

29 April, 2018 at 8:46 am

Rudolph01

Using KM’s latest script, a rerun for the same barrier strip![X=6 \cdot 10^{10}+2099 + [0,1], y=[0.2,1], t=[0,0.2]](https://s0.wp.com/latex.php?latex=X%3D6+%5Ccdot+10%5E%7B10%7D%2B2099+%2B+%5B0%2C1%5D%2C+y%3D%5B0.2%2C1%5D%2C+t%3D%5B0%2C0.2%5D&bg=ffffff&fg=545454&s=0&c=20201002) , but now recalculating the ddx and ddt-bounds for each rectangle, completed in 2 hours and 17 minutes. As expected the number of rectangles (or t-steps) to be verified, dropped from

, but now recalculating the ddx and ddt-bounds for each rectangle, completed in 2 hours and 17 minutes. As expected the number of rectangles (or t-steps) to be verified, dropped from  to

to  .

.

Here is the output:

Summary per rectangle

Full detail

30 April, 2018 at 6:50 am

KM

At t=0, y=0.2, N=69098, e_A + e_B + e_C,0 is 0.0012, hence not that small. For the t=0 rectangle, the gap between mesh points was taken as around 1/(ddx bound at t=0) for the sides where x varies and 0.8/ddx bound for the sides where y varies (using the ddx terminology for both ddx and ddy).

In this particular strip, the minimum |(A+B)/B0| for the t=0 mesh point set turned out to be around 2.374, and above 1.3 for the all the mesh points in all the rectangles considered. From this it seems that between the mesh points, |(A+B)/B0| here can’t go below about 1.185 for t=0 or below 0.65 for the other rectangles (or maybe a bit larger since the step size along the rectangles was conservative than what a adaptive ddx based mesh would have allowed).

Since t_next was set as t + min_|(A+B)/B0|_t / ddt_bound, it seems |(A+B)/B0| can’t go below 0.325 between the t points, though not sure whether the |f(z)|/2 concept can be applied in the t dimension.

30 April, 2018 at 8:30 am

Terence Tao

It’s interesting that is now exceeding 1 at t=0. Is this the reason for selecting

is now exceeding 1 at t=0. Is this the reason for selecting ![X = 6 \times 10^{10} + 2099 + [0,1]](https://s0.wp.com/latex.php?latex=X+%3D+6+%5Ctimes+10%5E%7B10%7D+%2B+2099+%2B+%5B0%2C1%5D&bg=ffffff&fg=545454&s=0&c=20201002) ? I would have expected this function to have average size comparable to 1, so the minimum would usually be less than 1.

? I would have expected this function to have average size comparable to 1, so the minimum would usually be less than 1.

I think the lower bound should work in the t variable as it does in the x or y variables (as long as the quantity

lower bound should work in the t variable as it does in the x or y variables (as long as the quantity  is estimated on the entire contour, and not just on the mesh, so one also needs some control on x and y derivatives). But it does seem that there is plenty of room between

is estimated on the entire contour, and not just on the mesh, so one also needs some control on x and y derivatives). But it does seem that there is plenty of room between  and the error terms

and the error terms  to accommodate any other errors we have to deal with (e.g. the error term in the 50-term Taylor expansion; I’m not sure how best to control that error yet, but given how small it is compared to everything else presumably we will be able to get away with a quite crude estimate here).

to accommodate any other errors we have to deal with (e.g. the error term in the 50-term Taylor expansion; I’m not sure how best to control that error yet, but given how small it is compared to everything else presumably we will be able to get away with a quite crude estimate here).

30 April, 2018 at 11:06 am

KM

Yes, this particular X was chosen after some exploration to get large jumps in t. |(A+B)/B0| does seem to have a tendency to jump sharply occasionally near t=0.

For example, I recently accidentally hit upon X=201062000500 (N=126491), y=0.2, t=0, where the estimate is 15.92 (error bound 0.0009). Changing y to 0.1 gives 23.16, and changing y to 0 gives 38.51. It seems local spikes of H_0 do not die down that quickly.

30 April, 2018 at 11:52 am

KM

To accelerate the mollifier bounds calculations (2-b-a), can we use a strategy like this:

Suppose we know the bound at N and it is positive enough and we are trying to reuse it for the bound at N+1. The DN terms each for the A and B parts) for the bounds at N and N+1 are similar except for the (t/2)Re(alpha) factor. Hence we subtract the the extra D terms from the bound at N to get a conservative estimate for that at N+1. Similarly to get the bound at N+2 from that at N+1. The conservative bound slowly decreases towards a postive predetermined threshold and we then update the base N again with the exact bound. Actually it seems this looks quite similar to a N mesh except there are a few calculations for each N.

30 April, 2018 at 5:23 pm

Terence Tao

This looks like it should work. Another variant, which may be a little simpler, is to take the current approximation, which I will call

approximation, which I will call  to make the dependence on

to make the dependence on  explicit, and approximate it by

explicit, and approximate it by  for

for  in a mesh interval

in a mesh interval  . For

. For  small, say

small, say  , one can hopefully estimate the error between the two approximations and keep it well under acceptable levels. Then we can apply the Euler mollifier method to

, one can hopefully estimate the error between the two approximations and keep it well under acceptable levels. Then we can apply the Euler mollifier method to  and bound this for all

and bound this for all  in the range with

in the range with  . With this approach, one doesn't have to analyse the more complicated sums arising from the Euler mollifications of

. With this approach, one doesn't have to analyse the more complicated sums arising from the Euler mollifications of  .

.

Also, relating to Euler mollification: the vestige of the Euler product formula suggests, heuristically at least, that

suggests, heuristically at least, that  will be a little larger than usual when the argument of

will be a little larger than usual when the argument of  is close to zero for small primes

is close to zero for small primes  , or equivalently if

, or equivalently if  is close to an integer for (say)

is close to an integer for (say)  . This heuristic seems to be in good agreement with your choice of

. This heuristic seems to be in good agreement with your choice of  , where

, where  has fractional part

has fractional part  and

and  has fractional part

has fractional part  (though things are worse at p=5). It might be that one could use this heuristic to locate some promising further candidate scales x which one could then test numerically to see if they outperform the ones you already found. In particular, a partial Euler product

(though things are worse at p=5). It might be that one could use this heuristic to locate some promising further candidate scales x which one could then test numerically to see if they outperform the ones you already found. In particular, a partial Euler product  for some small value of

for some small value of  (e.g.

(e.g.  ) could perhaps serve as a proxy for how large one might expect

) could perhaps serve as a proxy for how large one might expect  to be. (Not coincidentally, these factors also show up in the Euler mollification method. One might also work with the more complicated variant

to be. (Not coincidentally, these factors also show up in the Euler mollification method. One might also work with the more complicated variant  , though this may not make too much difference especially since we are primarily concerned with

, though this may not make too much difference especially since we are primarily concerned with  near zero. )

near zero. )

1 May, 2018 at 4:54 am

Anonymous

Dirichlet’s theorem on simultaneous Diophantine approximation (which follows from the pigeonhole principle) states that for any sequence of real numbers , any sequence of consecutive integers

, any sequence of consecutive integers  contains an integer

contains an integer  such that

such that

Where denotes the distance from

denotes the distance from  to its nearest integer.

to its nearest integer.

Hence (with ) where

) where  denotes the j-th prime, it follows that for a mollifier based on three primes, and

denotes the j-th prime, it follows that for a mollifier based on three primes, and  , it is possible to find

, it is possible to find  such that

such that

1 May, 2018 at 8:37 am

KM

By evaluating sum(||x * alpha_p||) or evaluating the Euler product for a large number of x (for eg. 6*10^10 + n, n the first 100k integers), and sorting to get small sums or large products, we get several candidates where |(A+B)/B0| is greater than 50. It seems this can be turned into a general method to choose a good barrier strip.

Some optimizations which further help are to compute the Euler products for x-0.5,x,x+0.5 and filter for x where all three are high, and to use a larger number of primes. Even with these the whole exercise is quite fast (about 2-3 minutes). Also since min |(A+B)/B0| for a rectangle generally occurs on the y=1 side, it helps to search for candidates using the Euler products at both y=0 and y=1.

Using first 5 primes and the above filters, I could find a candidate X=6*10^10+83952, where the estimate is 72.5 at y=0 and 5.13 at y=1. Running the barrier strip with X+[-0.5,0.5], y=[0.2,1], min |(A+B)/B0| started at 4.32 for the t=0 rectangle (2.374 for the earlier strip), and much fewer t steps were overall needed (280 instead of 440).

2 May, 2018 at 2:36 am

Rudolph01