Nonstandard analysis is a mathematical framework in which one extends the standard mathematical universe of standard numbers, standard sets, standard functions, etc. into a larger nonstandard universe

of nonstandard numbers, nonstandard sets, nonstandard functions, etc., somewhat analogously to how one places the real numbers inside the complex numbers, or the rationals inside the reals. This nonstandard universe enjoys many of the same properties as the standard one; in particular, we have the transfer principle that asserts that any statement in the language of first order logic is true in the standard universe if and only if it is true in the nonstandard one. (For instance, because Fermat’s last theorem is known to be true for standard natural numbers, it is automatically true for nonstandard natural numbers as well.) However, the nonstandard universe also enjoys some additional useful properties that the standard one does not, most notably the countable saturation property, which is a property somewhat analogous to the completeness property of a metric space; much as metric completeness allows one to assert that the intersection of a countable family of nested closed balls is non-empty, countable saturation allows one to assert that the intersection of a countable family of nested satisfiable formulae is simultaneously satisfiable. (See this previous blog post for more on the analogy between the use of nonstandard analysis and the use of metric completions.) Furthermore, by viewing both the standard and nonstandard universes externally (placing them both inside a larger metatheory, such as a model of Zermelo-Frankel-Choice (ZFC) set theory; in some more advanced set-theoretic applications one may also wish to add some large cardinal axioms), one can place some useful additional definitions and constructions on these universes, such as defining the concept of an infinitesimal nonstandard number (a number which is smaller in magnitude than any positive standard number). The ability to rigorously manipulate infinitesimals is of course one of the most well-known advantages of working with nonstandard analysis.

To build a nonstandard universe from a standard one

, the most common approach is to take an ultrapower of

with respect to some non-principal ultrafilter over the natural numbers; see e.g. this blog post for details. Once one is comfortable with ultrafilters and ultrapowers, this becomes quite a simple and elegant construction, and greatly demystifies the nature of nonstandard analysis.

On the other hand, nonprincipal ultrafilters do have some unappealing features. The most notable one is that their very existence requires the axiom of choice (or more precisely, a weaker form of this axiom known as the boolean prime ideal theorem). Closely related to this is the fact that one cannot actually write down any explicit example of a nonprincipal ultrafilter, but must instead rely on nonconstructive tools such as Zorn’s lemma, the Hahn-Banach theorem, Tychonoff’s theorem, the Stone-Cech compactification, or the boolean prime ideal theorem to locate one. As such, ultrafilters definitely belong to the “infinitary” side of mathematics, and one may feel that it is inappropriate to use such tools for “finitary” mathematical applications, such as those which arise in hard analysis. From a more practical viewpoint, because of the presence of the infinitary ultrafilter, it can be quite difficult (though usually not impossible, with sufficient patience and effort) to take a finitary result proven via nonstandard analysis and coax an effective quantitative bound from it.

There is however a “cheap” version of nonstandard analysis which is less powerful than the full version, but is not as infinitary in that it is constructive (in the sense of not requiring any sort of choice-type axiom), and which can be translated into standard analysis somewhat more easily than a fully nonstandard argument; indeed, a cheap nonstandard argument can often be presented (by judicious use of asymptotic notation) in a way which is nearly indistinguishable from a standard one. It is obtained by replacing the nonprincipal ultrafilter in fully nonstandard analysis with the more classical Fréchet filter of cofinite subsets of the natural numbers, which is the filter that implicitly underlies the concept of the classical limit of a sequence when the underlying asymptotic parameter

goes off to infinity. As such, “cheap nonstandard analysis” aligns very well with traditional mathematics, in which one often allows one’s objects to be parameterised by some external parameter such as

, which is then allowed to approach some limit such as

. The catch is that the Fréchet filter is merely a filter and not an ultrafilter, and as such some of the key features of fully nonstandard analysis are lost. Most notably, the law of the excluded middle does not transfer over perfectly from standard analysis to cheap nonstandard analysis; much as there exist bounded sequences of real numbers (such as

) which do not converge to a (classical) limit, there exist statements in cheap nonstandard analysis which are neither true nor false (at least without passing to a subsequence, see below). The loss of such a fundamental law of mathematical reasoning may seem like a major disadvantage for cheap nonstandard analysis, and it does indeed make cheap nonstandard analysis somewhat weaker than fully nonstandard analysis. But in some situations (particularly when one is reasoning in a “constructivist” or “intuitionistic” fashion, and in particular if one is avoiding too much reliance on set theory) it turns out that one can survive the loss of this law; and furthermore, the law of the excluded middle is still available for standard analysis, and so one can often proceed by working from time to time in the standard universe to temporarily take advantage of this law, and then transferring the results obtained there back to the cheap nonstandard universe once one no longer needs to invoke the law of the excluded middle. Furthermore, the law of the excluded middle can be recovered by adopting the freedom to pass to subsequences with regards to the asymptotic parameter

; this technique is already in widespread use in the analysis of partial differential equations, although it is generally referred to by names such as “the compactness method” rather than as “cheap nonstandard analysis”.

Below the fold, I would like to describe this cheap version of nonstandard analysis, which I think can serve as a pedagogical stepping stone towards fully nonstandard analysis, as it is formally similar to (though weaker than) fully nonstandard analysis, but on the other hand is closer in practice to standard analysis. As we shall see below, the relation between cheap nonstandard analysis and standard analysis is analogous in many ways to the relation between probabilistic reasoning and deterministic reasoning; it also resembles somewhat the preference in much of modern mathematics for viewing mathematical objects as belonging to families (or to categories) to be manipulated en masse, rather than treating each object individually. (For instance, nonstandard analysis can be used as a partial substitute for scheme theory in order to obtain uniformly quantitative results in algebraic geometry, as discussed for instance in this previous blog post.)

— 1. Details —

To set up cheap nonstandard analysis, we will need an asymptotic parameter , which we will take to initially lie in the natural numbers

(though it is certainly possible to set up cheap nonstandard analysis on other non-compact spaces than

if one wishes). However, we reserve the right in the future to restrict the parameter space from

to a smaller infinite subset

(which corresponds to the familiar operation of passing from a sequence

to a subsequence, except that we do not bother to relabel the subsequence to be indexed by

again). The dynamic nature of the parameter space

makes it a little tricky to properly formalise cheap nonstandard analysis in the usual static framework of mathematical logic, but it turns out not to make much difference in practice, because in cheap nonstandard analysis one only works with statements which remain valid under the operation of restricting the underlying domain of the asymptotic parameter. (This is analogous to how in probability theory one only works with statements which remain valid under the operation of extending the underlying probability space, as discussed in this blog post.)

We then distinguish two types of mathematical objects:

- Standard objects

, which do not depend on the asymptotic parameter

; and

- Nonstandard objects

, which are allowed to depend on the asymptotic parameter

.

Similarly with “object” replaced by other mathematical concepts such as “number”, “point”, “set”, “function”, etc. Thus, for instance, a nonstandard real is a real number that depends on the asymptotic parameter

; a nonstandard function is a function

from a domain

to a range

, which are all allowed to depend on the asymptotic parameter

; and so forth.

Of course, with these conventions, every standard object is automatically also a nonstandard object. (One can use the terminology strictly nonstandard object to refer to a nonstandard object which is not a standard object.) This will lead to the following slight abuse of notation: if is a standard set, we consider a cheap nonstandard element of

to be a nonstandard object

, such that each

is an element of

. In the strict set-theoretic sense, this is not an actual element of

, which consists only of standard objects, but we will abuse notation and consider it as a nonstandard element of

. (This is analogous to how, in probability theory, a real-valued random variable is not actually a (deterministic) element of

, but can be considered as a probabilistic element of

instead.) If one wants to be more pedantic, one can use the notation

to denote the collection of all nonstandard elements of

, which is what one usually does in fully nonstandard analysis (after quotienting by equivalence with respect to a non-principal ultrafilter), but we will avoid trying to collect cheap nonstandard elements into a set here because it interferes with the freedom to pass to subsequences. Because of this, though, it is best not to combine cheap nonstandard analysis with any advanced set theory unless one knows exactly what one is doing, as one can get quite confused if one is not already experienced in both set theory and nonstandard analysis. (In such circumstances, it is best to instead use fully nonstandard analysis, which can treat the nonstandard universe as an actual set rather than a “potential” one.)

Example 1 Suppose

is a bounded sequence of real numbers, with the uniform upper bound

for all

. Then one can interpret

as a single nonstandard real, while the bound

remains a standard real. Note the slight conceptual distinction between the cheap nonstandard approach of treating

as a single nonstandard object, and the classical approach of treating

as a sequence of standard objects. This distinction is analogous the distinction between treating a random variable as a single probabilistic object (as is done in probability theory), as opposed to a measurable function mapping the underlying measure space to a deterministic range space (which is the viewpoint taken in measure theory). The two viewpoints are mathematically equivalent, but can lead to somewhat different ways of thinking about such objects.

Example 2 In graph theory, an expander family is a sequence of finite graphs

which have uniform edge expansion in the following sense: there exists an

independent of

, such that for any

and any subset

of

of size at most

is adjacent to at least

other vertices in

. Adopting a cheap nonstandard analysis perspective, one can view this expander family as a single cheap nonstandard graph

(with a cheap nonstandard finite vertex set

and edge set

, while the expansion constant

remains a standard real number.

Example 3 In the above two examples, the asymptotic parameter

was already inherent in the mathematical structure being studied. In other situations, there is initially no asymptotic parameter present, but one can be created by arguing by contradiction and selecting a sequence of counterexamples (in some cases, this requires countable version of the axiom of choice). For instance, suppose one has a (standard) function

on some domain

, and one wishes to show that

is bounded. If

were not bounded, then for each natural number

one could find a point

in the domain with

; one can then collect all of these standard points

together to form a cheap nonstandard point

, with the property that

is unbounded (we will define what this means shortly). Taking contrapositives, we see that to show that

is a bounded function, it suffices to show that

is bounded for each cheap nonstandard point

in

.

We will only be interested in the behaviour of cheap nonstandard mathematical objects in the asymptotic limit

. To this end, we will reserve the right to delete an arbitrary finite number of values from the domain

of the parameter

, and work only with sufficiently large choices of this parameter. In particular, two nonstandard objects

,

will be considered equal if one has

for all sufficiently large

. (This is where the Frechet filter is implicitly coming into play.)

Any operation which can be applied to standard objects , can also be applied to cheap nonstandard objects

by applying the standard operation separately for each choice of asymptotic parameter

in the current domain

, with no interaction between different values of the asymptotic parameter. For instance, the sum

of two cheap nonstandard reals

and

is given by

. Similarly, if

is a cheap nonstandard function from a cheap nonstandard set

to another cheap nonstandard set

, and

is a cheap nonstandard element of

(thus

is an element of

for all

in the current parameter space

), then

is the cheap nonstandard element defined by

. Since every standard function is also a nonstandard function, this also defines

when

is standard and

is nonstandard, and also vice versa.

(Again, one should note the analogy here with probability theory; any operation that can be applied to deterministic objects, can also be applied to probabilistic objects, by applying the deterministic operation to each individual point in the event space separately. For instance, the sum of two random variables is defined by the formula

for all points

in the event space. Because of things like this, the event parameter

can usually be suppressed entirely from view when doing probability, and similarly the parameter

can also usually be suppressed from view when performing cheap nonstandard analysis.)

Similarly, any relation or predicate which can be applied to standard objects, can be applied to cheap nonstandard objects, with the relation or predicate considered to be true for some cheap nonstandard objects if it is true for all sufficiently large values of the asymptotic parameter (in the current domain ). For instance, given two nonstandard real numbers

and

, we say that

if one has

for all sufficiently large

. Similarly, we say that the relation is false if it is false for all sufficiently large values of the asymptotic parameter.

It is here that we run into the main defect of the cheap version of nonstandard analysis: it is possible for a statement concerning cheap nonstandard objects to neither be fully true nor fully false, but instead merely being both potentially true and potentially false. (Again, this is in analogy with probability theory, in which a probabilistic statement need not be almost surely true or almost surely false, but could instead be true with positive probability and also false with positive probability.) For instance, using the natural numbers as the initial domain of the asymptotic parameter, the nonstandard real number

is neither positive, negative, nor zero, because none of the three statements

,

, or

are true for all sufficiently large

. However, if one restricts the asymptotic parameter to the even integers, then this real number

becomes positive, while if one restricts the asymptotic parameter instead to the odd integers, then the real number becomes negative. Until we make such a restriction, though, we cannot assign a definite truth value to statements such as “

is positive”, and so we must accept the existence of statements in cheap nonstandard analysis whose truth value is currently indeterminate, although once the truth value does become determinate, it remains that way under any further refinement of the underlying domain

. (This problem is precisely what is rectified by the use of an ultrafilter in fully nonstandard analysis, as ultrafilters always contain exactly one of a given subset of

and its complement.) But there is certainly substantial precedent in mathematics for reasoning with statements with an indeterminate truth value: probability theory, intuitionistic logic, and modal logic, for instance, all contain this issue in some shape or form.

Despite this indeterminacy, we still largely retain the fundamental transfer principle of nonstandard analysis: many statements of first-order logic which are true (resp. false) when quantified over standard objects, remain true (resp. false) when quantified over nonstandard objects, regardless of what the current value of the domain of the asymptotic parameter is. For instance, addition and multiplication are commutative and associative for the standard real numbers, and thus for the cheap nonstandard real numbers also, as can be easily verified after a moment’s thought. Because of the lack of the law of excluded middle, though, sometimes one has to take some care in phrasing statements properly before they will transfer. For instance, the statement “If

, then either

or

” is of course true for standard reals, but not for nonstandard reals; a counterexample can be given for instance by

and

. However, the rephrasing “If

and

, then

” is true for nonstandard reals (why?). As a rough rule of thumb, as long as the logical connectives “or” and “not” are avoided, one can transfer standard statements to cheap nonstandard ones, but otherwise one may need to reformulate the statement first before transfer becomes possible. Even when one’s statements do contain an “or” or a “not”, one can often still recover a nonstandard formulation after invoking the freedom to pass to a subsequence. For instance, “If

, then after passing to a subsequence if necessary, either

or

” is a true statement in cheap nonstandard analysis (why? this is basically the infinite pigeonhole principle). Note that for fully nonstandard analysis, one does not need to be so careful with transfer, as all first-order statements transfer properly (this fact is known as Los’s theorem).

We also retain a version of the equally fundamental countable saturation property of nonstandard analysis, although the cheap version is often referred to instead as the Arzelá-Ascoli diagonalisation argument. Let us call a nonstandard property pertaining to a nonstandard object

satisfiable if, no matter how one restricts the domain

from its current value, one can find an

for which

becomes true, possibly after a further restriction of the domain

. The countable saturation property is then the assertion that if one has a countable sequence

of properties, such that

is jointly satisfiable for any finite

, then the entire sequence

is simultaneously satisfiable. The proof proceeds by mimicking the diagonalisation component of the proof of the Arzelá-Ascoli theorem, which explains the above terminology.

— 2. Asymptotic notation —

Thus far, there has been essentially no interaction between different choices of the parameter . In classical analysis, such an interaction is obtained by the device of introducing the limit

of a convergent sequence, and also by introducing asymptotic notation such as

and

which tracks the dependence of various bounds on the parameter

. These devices can be easily translated to the cheap nonstandard analysis setting without much difficulty, as follows.

Firstly, given two cheap nonstandard numbers and

, we may write

(or

, or

) if one has

for some standard real number

(or equivalently, if

for all sufficiently large

). Similarly, we write

if one has

for every standard real number

(or equivalently, if

for all

and some quantity

that goes to zero as

). Note that these conventions are essentially the same as the usual asymptotic notation (except that sometimes the bound for the

notation is required to hold for all

, rather than merely for sufficiently large

).

A cheap nonstandard quantity is said to be bounded if one has

, and infinitesimal if

.

Many quantitative statements about standard objects can be converted into equivalent qualitative statements about cheap nonstandard objects. We illustrate this with some simple examples.

Exercise 1 (Boundedness) Let

be a standard function. Show that the following are equivalent:

- (Quantitative standard version) There exists a standard

such that

for all standard

.

- (Quantitative nonstandard version) There exists a standard

such that

for all nonstandard elements

of

.

- (Qualitative nonstandard version)

is bounded for each nonstandard element

of

.

- (Qualitative subsequential nonstandard version) For each nonstandard element

of

,

is bounded after passing to a subsequence.

Call two nonstandard elements of a standard metric space

infinitesimally close if

.

Exercise 2 (Continuity) Let

be a standard function on a standard metric space

. Show that the following are equivalent:

- (Standard version)

is continuous.

- (Nonstandard version) If

is a standard element of

and

is a cheap nonstandard element that is infinitesimally close to

, then

is infinitesimally close to

.

- (Subsequential nonstandard version) If

is a standard element of

and

is a cheap nonstandard element that is infinitesimally close to

, then

is infinitesimally close to

after passing to a subsequence.

Exercise 3 (Uniform continuity) Let

be a standard function on a standard metric space

. Show that the following are equivalent:

- (Standard version)

is uniformly continuous.

- (Nonstandard version) If

is a nonstandard element of

and

is a cheap nonstandard element that is infinitesimally close to

, then

is infinitesimally close to

.

- (Subsequential nonstandard version) If

is a nonstandard element of

and

is a cheap nonstandard element that is infinitesimally close to

, then

is infinitesimally close to

after passing to a subsequence.

Exercise 4 (Differentiability) Let

and

be standard functions. Show that the following are equivalent:

- (Standard version)

is everywhere differentiable, with derivative

.

- (Nonstandard version) If

is a standard real and

is infinitesimal, then

.

- (Subsequential nonstandard version) If

is a standard real and

is infinitesimal, then

after passing to a subsequence.

One nice feature about nonstandard analysis is that it tends to automatically perform “epsilon management”, in that it tends to correctly figure out on its own what parameters are allowed to depend on what other parameters. Let us illustrate this with an simple example:

Exercise 5 Let

be a predicate depending on three natural numbers

. Show that the following are equivalent:

- (Standard version) For every standard

, there exists a standard constant

, such that for every

, there exists

with

such that

holds.

- (Nonstandard version) For every standard natural number

and cheap nonstandard natural number

, there exists a cheap nonstandard natural number

such that

holds.

Furthermore, show that requiring in the standard version that the constant

is actually uniform in

is equivalent to allowing

to be nonstandard rather than standard in the nonstandard version.

As a consequence, the asymptotic notation in a nonstandard argument tends to be slightly simpler than in a standard counterpart; whereas in the standard world one usually has to subscript the asymptotic notation by various parameters (e.g. the bound in the above exercise could be written instead as

), the subscripting is done automatically once one decides which variables are standard and which ones are nonstandard.

If a nonstandard real is of the form

for some standard real

, we call

the standard part of

and write

; viewing

as a sequence

, we see that this occurs precisely when the sequence

converges to

as

. But because not all sequences are convergent, not all cheap nonstandard reals have a standard part. However, thanks to the Bolzano-Weierstrass theorem, we know that any bounded nonstandard number

will have a standard part after passing to a subsequence; more generally, we can extract a standard part from any nonstandard object lying in a sequentially compact set by passing to a subsequence.

We can then reformulate many basic compactness arguments in analysis using this observation (at least if the topologies involved are sufficiently non-pathological that they can be accurately probed by sequences). For instance, let us give the cheap non-standard proof that a continuous function on a compact metric space is bounded. By Exercise 1, it suffices to show that

is bounded for every nonstandard element

of

after passing to a subsequence. But by the Bolzano-Weierstrass theorem,

has a standard part

; by continuity,

is then infinitesimally close to

. But

is standard, and so

is bounded as well.

Exercise 6 Give a cheap nonstandard analysis proof of the fact that on a compact domain, every continuous function is uniformly continuous.

Readers who are familiar with traditional real analysis will note that the above proofs are basically just very lightly disguised versions of the usual sequence-based traditional proofs. Indeed, cheap nonstandard analysis is very close to sequence-based analysis (much as probability theory is very close to measure theory), and it is easy to translate from one to the other. (Fully nonstandard analysis is a bit different in this regard, because it relies on ultralimits rather than classical limits, but it is still analogous in many ways to sequence-based analysis.)

38 comments

Comments feed for this article

2 April, 2012 at 10:38 pm

Ted Gast

Excellent post. You mentioned that this cheap version of nonstandard analysis has a logic that somewhat intuitionistic. Does this mean that one can use Gödel–Gentzen negative translation in order to phrase things properly so that they transfer?

3 April, 2012 at 8:03 am

Terence Tao

Something like this does seem to be the case. Indeed, to use the example in the text, Godel-Gentzen translates “If , then

, then  or

or  ” “If

” “If  is not true, then (

is not true, then ( and

and  ) is not true” which is the contrapositive of the translation “If

) is not true” which is the contrapositive of the translation “If  and

and  , then

, then  ” offered in the text. (I am slightly worried about the use of the contrapositive, which is not allowed in intuitionistic logic, but I think it is OK because the metatheory remains in the realm of classical logic even after one restricts the internal logic of cheap nonstandard analysis to be intuitionistic.)

” offered in the text. (I am slightly worried about the use of the contrapositive, which is not allowed in intuitionistic logic, but I think it is OK because the metatheory remains in the realm of classical logic even after one restricts the internal logic of cheap nonstandard analysis to be intuitionistic.)

3 April, 2012 at 12:23 am

g

Trivial fix: you wrote “an arbitrary number of finite values” but meant “an arbitrary finite number of values”. (*All* the values are finite.)

[Corrected, thanks – T.]

3 April, 2012 at 5:04 am

Alexander Kreuzer

Dear Prof Tao,

thank you for your great post.

I would like to add that one can get even better results if one replaces the ultrafilter not with the Frechet filter but with other filters.

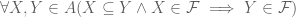

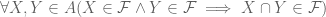

In fact, one can show that for any countable collection of set one can construct (without AC) an approximate ultrafilter

one can construct (without AC) an approximate ultrafilter  in the sense that

in the sense that

is infinite

is infinite

For instance, Solovay constructed a filter which acts on the hyperarithmetical sets like an ultrafilter using such methods.

Thus, if one knows in advance that one uses an ultrafilter U only one countable many sets and these sets are given, then one can construct an approximate ultrafilter which could replace U in the proof.

This can be refined to eliminate general uses of ultrafilters over reasonable fragments of arithmetic (ACA_0).

See this paper by Henry Towsner for a treatment based on forcing

http://arxiv.org/abs/1109.3902

and this paper of mine which is based on proof-mining techniques

Click to access ultra.pdf

Best wishes,

Alexander Kreuzer

3 April, 2012 at 12:08 pm

Craig Helfgott

Random question: Is anyone else seeing the background of this page as dark grey? Is it just me? Terry, is this intentional?

3 April, 2012 at 12:12 pm

Anonymous

Me too.

3 April, 2012 at 12:19 pm

Terence Tao

That’s strange; I reset it just now.

4 April, 2012 at 12:30 am

andrejbauer

When you said “there exist statements in cheap nonstandard analysis which are neither true nor false”, it should be emphasized that this is a meta-statement about the cheap model. Inside the cheap model it is of course the case that the statement “there is a proposition which is neither true not false” is false, i.e., inside the model we do have “¬(P ∧¬P)” simply because this is intuitionisticically valid.

Erik Palmgren has studied constructive non-standard analysis, see http://www.jstor.org/stable/421031. Rather than fixing a particular filter, like you do, he used a variable filter. I think that got him more mileage.

4 April, 2012 at 12:34 am

andrejbauer

Oh, and I forgot to mention that Erik’s paper contains a reference to the first construction of a non-standard model, by Schmeiden and Laugwitz from 1958, which was in fact the cheap model you describe, and it was constructive.

10 April, 2012 at 10:19 am

Christian Elsholtz

Here is a link to the paper by Curt Schmieden and Detlef Laugwitz:

Eine Erweiterung der Infinitesimalrechnung. Mathematische Zeitschrift 69, 1958, pages.1-39.

http://gdz.sub.uni-goettingen.de/no_cache/dms/load/img/?IDDOC=159786

As Robinson’s work on Nonstandandard analysis appeared soon after this paper, and as Robinson’s approach was quite succesful, the paper by Schmieden and Laugwitz is possibly not as well known as it deserves to be. It appears to me that for many applications an easier version (like Schmieden and Laugwitz and the current blog post) may have indeed pedagogical value.

9 May, 2012 at 6:34 am

katz

I would take the claim that the “first” construction of a non-standard model was given in 1958 by Schmieden and Laugwitz, with a grain of salt. First, Skolem constructed non-standard integers in 1934. Furthermore, E. Hewitt in 1948 constructed the hyper-reals (he coined the term), and Los proved what amounted to the transfer principle for them in 1955. Thus, three years before Schmieden and Laugwitz, a full infinitesimal theory was in place. It took Robinson to notice this.

4 April, 2012 at 4:29 pm

a

I think that in parts 2,3 of exercises 2 and 3, “ is infinitesimally close to

is infinitesimally close to  ” should be “

” should be “ is infinitesimally close to

is infinitesimally close to  “.

“.

[Corrected, thanks – T.]

21 April, 2012 at 7:06 pm

Tom Church

The second parts of Exercises 2 and 3 still have $x$ instead of $f(x)$.

[Hopefully this edit fixes the problem -T.]

18 April, 2012 at 6:40 am

Cheap non-standard analysis and expressions of certainty « Quomodocumque

[…] liked Terry’s post on cheap nonstandard analysis. I’ll add one linguistic comment. As Terry points out, you lose the law of the excluded […]

7 May, 2012 at 1:25 am

katz

Thanks for this nice post. I have a question about applications. Can the cheap vesion of nsa as above be used in order to prove, for example, the mean value theorem?

7 May, 2012 at 5:51 am

Terence Tao

Yes, but the proofs are basically thinly disguised versions of more familiar sequential type proofs on analysis. For instance, consider the simpler result that every continuous function![f:[0,1] \to {\bf R}](https://s0.wp.com/latex.php?latex=f%3A%5B0%2C1%5D+%5Cto+%7B%5Cbf+R%7D&bg=ffffff&fg=545454&s=0&c=20201002) is bounded. If not, we can find standard

is bounded. If not, we can find standard ![x \in [0,1]](https://s0.wp.com/latex.php?latex=x+%5Cin+%5B0%2C1%5D&bg=ffffff&fg=545454&s=0&c=20201002) for which

for which  for any given standard n, hence by saturation we can find cheap-nonstandard

for any given standard n, hence by saturation we can find cheap-nonstandard ![x \in {}^*[0,1]](https://s0.wp.com/latex.php?latex=x+%5Cin+%7B%7D%5E%2A%5B0%2C1%5D&bg=ffffff&fg=545454&s=0&c=20201002) for which

for which  is unbounded. Passing to a subsequence so that

is unbounded. Passing to a subsequence so that  has a standard part

has a standard part  , we see that

, we see that  is not infinitesimally close to

is not infinitesimally close to  , contradicting differentiability. Similar arguments show that every continuous function attains a maximum, and then one can prove Rolle’s theorem, and then the mean value theorem, by minor variants of the usual arguments.

, contradicting differentiability. Similar arguments show that every continuous function attains a maximum, and then one can prove Rolle’s theorem, and then the mean value theorem, by minor variants of the usual arguments.

8 May, 2012 at 12:26 am

katz

What I was wondering about is the mean value theorem for an infinitesimal interval, rather than the traditional mean value theorem. This question was posed by Felix Klein in 1908 and also by Abraham Fraenkel in 1926. They both viewed this as a criterion for the usefulness of a theory of infinitesimals. They both concluded that the theories available at the time did not meet this criterion. Note that the combination of the work of Hewitt of 1948 and that of Los of 1955 provides a framework that does satisfy the Klein-Fraenkel criterion. This is of course Robinson’s framework. Is there a cheap version of nsa that would satisfy the Klein-Fraenkel criterion?

14 October, 2012 at 3:57 am

Jim Henle

I believe a version of the Mean Value Theorem holds in the cheap

version of nsa described here. The Transfer Principle holds for the

following set $\mathcal H$ of statements: Take all atomic statements

and close under conjunction, universal and existential quantification,

and what I call “universal implication”. What I mean is, if $P(a)$

and $Q(a)$ are in $\mathcal H$ for all constants a (standard or

nonstandard), then $\forall x(P(x)\Rightarrow Q(x))$ is also in

$\mathcal H$. You can prove that any statement in $\mathcal H$ true

about the real numbers is true about the cheap hyperreal numbers.

Then for real functions $f$ and $g$, there is a statement in $\mathcal

H$ which says that $g$ is the derivative of $f$ at all points or at

all points in an interval. It is not difficult then to write a

statement $\mathcal H$ equivalent to the Mean Value Theorem for $f$.

26 September, 2012 at 4:09 am

Jim Henle

David Cohen and I wrote a calculus text which uses cheap nonstandard analysis in a disguised form, “Calculus: The Language of Change”, Jones and Bartlett, 2005. See especially chapters 5 and 12.

29 December, 2012 at 1:04 pm

A mathematical formalisation of dimensional analysis « What’s new

[…] objects is analogous to the distinction between standard and (cheap) nonstandard objects in (cheap) nonstandard analysis. However, whereas in nonstandard analysis the underlying parameter is usually thought of as an […]

3 June, 2013 at 8:57 am

The prime tuples conjecture, sieve theory, and the work of Goldston-Pintz-Yildirim, Motohashi-Pintz, and Zhang | What's new

[…] will also need some asymptotic notation (in the spirit of “cheap nonstandard analysis“). We will need a parameter that one should think of going to infinity. Some mathematical […]

7 December, 2013 at 4:06 pm

Ultraproducts as a Bridge Between Discrete and Continuous Analysis | What's new

[…] to construct. We will not discuss such methods here, but see e.g. this paper of Palmgren. (See also this previous blog post for a “cheap” version of nonstandard analysis which uses the Frechet filter rather than […]

15 July, 2014 at 4:22 am

Real analysis relative to a finite measure space | What's new

[…] is particularly close when comparing with the “cheap nonstandard analysis” discussed in this previous blog post. We will also use “relative to ” as a synonym for […]

26 August, 2015 at 4:24 pm

Heath-Brown’s theorem on prime twins and Siegel zeroes | What's new

[…] to be with respect to the parameter, e.g. means that for some fixed . (In the language of this previous blog post, we are thus implicitly using “cheap nonstandard analysis”, although we will not […]

25 March, 2016 at 3:14 am

saghe

https://hal.inria.fr/hal-01248379/

in this link, we can find a new non approach to nonstandard analysis without using the model theory

18 October, 2017 at 6:35 am

@no identd (@no_identd)

You might wish to edit the following sentence from the post above:

“it is constructive (in the sense of not requiring any sort of choice-type axiom)”

…in lieu of the following result by Jon M. Sterling, showing that there does exist a version of the Axiom of Choice which DOES NOT imply the law of the excluded middle (and/or vice versa): http://www.jonmsterling.com/posts/2015-04-24-note-on-diaconescus-theorem.html

Martin-Löf previously showed this in his paper “100 years of Zermelo’s axiom of choice: what was the problem with it?”, Martin-Löf’s paper falsely pinned the ‘blame’, so to speak, on extensionality, where really, it sat somewhere else entirely.

Technically, of course, this fails to in any way make that quoted sentence /wrong/ in any way, but the sentence would then seem to propagates the – now shown by Sterling as such – myth that Constructivism=Absence of AC; whereas really, and as you get to later anyway, Constructivism=Absence of LEM (or, as Sterling calls it, PEM).

15 November, 2017 at 1:46 pm

An inverse theorem for Kemperman’s inequality | What's new

[…] the language of “cheap” nonstandard analysis (aka asymptotic analysis), as discussed in this previous blog post; one could also have used the full-strength version of nonstandard analysis, but this does not seem […]

9 December, 2018 at 2:32 pm

254A, Supplemental: Weak solutions from the perspective of nonstandard analysis (optional) | What's new

[…] I discussed in a previous post, the manipulation of sequences and their limits is analogous to a “cheap” version of […]

12 February, 2019 at 3:03 pm

Christopher

Instead of using a nonintuionisitc logic, could one instead use a standard theory that is incomplete? So instead of “some things are neither true nor false”, you would say “some things are neither provable nor disprovable”?

26 November, 2020 at 10:20 am

Michelangelo

A little bibliographic note: the cheap non-standard analysis was invented in 1910 by Giuseppe Peano.

Giuseppe Peano, “Sugli ordini degli infiniti”, Atti della Reale Accademia dei Lincei: Rendiconti, 1910, s. 5, v. 19, pp. 778-781.

Click to access S5V19T1A1910P778_781.pdf

The article is in Italian and is not known by the Italians themselves, so it is not surprising that it is not known in the English-speaking world.

The key point is formula (3), page 780.

26 November, 2020 at 11:52 pm

Mikhail Katz

Some of the comments in this thread involve an implicit assumption that working with Robinson-style infinitesimals in the context of a number system which is actually a field, necessarily requires a nonprincipal ultrafilter. Such an assumption turns out to be incorrect. Karel Hrbacek and I developed an axiom system SPOT, conservative over ZF (and therefore requiring neither ultrafilters nor the axiom of choice), which enables infinitesimal calculus a la Robinson. There is also a stronger system SCOT, conservative over ZF+ADC (dependent choice), which enables infinitesimal analysis and much else, including an infinitesimal treatment of the Lebesgue measure a la Loeb. See https://u.math.biu.ac.il/~katzmik/spot.html

8 December, 2020 at 7:07 pm

Sendov’s conjecture for sufficiently high degree polynomials | What's new

[…] There are many ways to effect such a strategy; we will use a formalism that I call “cheap nonstandard analysis” and which is common in the PDE literature, in which one repeatedly passes to subsequences as […]

20 December, 2020 at 8:33 pm

adityaguharoy

Reblogged this on Aditya Guha Roy's weblog and commented:

Here is a blog post on non-standard analysis which I just read today. For more details on non-standard analysis one can check prof. Terence Tao’s book titled “Compactness and contradiction”.

22 December, 2020 at 2:33 pm

Anonymous

… for instance, a nonstandard real is a real number that depends on the asymptotic parameter

that depends on the asymptotic parameter  ;…

;…

How should one understand the identity here?

here?

Is the object “ ” understood as a sequence

” understood as a sequence  of real number?

of real number?

22 December, 2020 at 7:14 pm

Terence Tao

The notation indicates that the dependence on

indicates that the dependence on  will often be suppressed, so that

will often be suppressed, so that  will be abbreviated as

will be abbreviated as  when it is not necessary to make the dependence on

when it is not necessary to make the dependence on  explicit.

explicit.

As stated in the text (see in particular Example 1), it is slightly better to think about as a quantity that depends on

as a quantity that depends on  as an ambient parameter, rather than as a sequence over all possible values of

as an ambient parameter, rather than as a sequence over all possible values of  . This is somewhat analogous (though not identical) to how a function

. This is somewhat analogous (though not identical) to how a function  is usually best thought conceptually of as a transformation that maps arbitrary elements in

is usually best thought conceptually of as a transformation that maps arbitrary elements in  to various points in

to various points in  , rather than a tuple

, rather than a tuple  or a graph

or a graph  , even if from a purely set theoretic point of view these models are a faithful representation of the transformation concept.

, even if from a purely set theoretic point of view these models are a faithful representation of the transformation concept.

17 March, 2022 at 8:28 pm

clique principle - Interpreting Conway's comment about utilizing the surreals for non-standard evaluation Answer - Lord Web

[…] with a bunch of definable properties and depart the relaxation launch; Terry Tao has written some very attention-grabbing stuff in that path which he calls “cheap nonstandard analysis.” So if Conway’s […]

14 August, 2023 at 7:43 pm

What Are Numbers? | Physics Forums

[…] https://terrytao.wordpress.com/2012/04/02/a-cheap-version-of-nonstandard-analysis/ […]

17 November, 2023 at 4:01 pm

What Are Numbers? -

[…] https://terrytao.wordpress.com/2012/04/02/a-cheap-version-of-nonstandard-analysis/ […]