You are currently browsing the category archive for the ‘math.ST’ category.

This is a spinoff from the previous post. In that post, we remarked that whenever one receives a new piece of information , the prior odds

between an alternative hypothesis

and a null hypothesis

is updated to a posterior odds

, which can be computed via Bayes’ theorem by the formula

A PDF version of the worksheet and instructions can be found here. One can fill in this worksheet in the following order:

- In Box 1, one enters in the precise statement of the null hypothesis

.

- In Box 2, one enters in the precise statement of the alternative hypothesis

. (This step is very important! As discussed in the previous post, Bayesian calculations can become extremely inaccurate if the alternative hypothesis is vague.)

- In Box 3, one enters in the prior probability

(or the best estimate thereof) of the null hypothesis

.

- In Box 4, one enters in the prior probability

(or the best estimate thereof) of the alternative hypothesis

. If only two hypotheses are being considered, we of course have

.

- In Box 5, one enters in the ratio

between Box 4 and Box 3.

- In Box 6, one enters in the precise new information

that one has acquired since the prior state. (As discussed in the previous post, it is important that all relevant information

– both supporting and invalidating the alternative hypothesis – are reported accurately. If one cannot be certain that key information has not been withheld to you, then Bayesian calculations become highly unreliable.)

- In Box 7, one enters in the likelihood

(or the best estimate thereof) of the new information

under the null hypothesis

.

- In Box 8, one enters in the likelihood

(or the best estimate thereof) of the new information

under the null hypothesis

. (This can be difficult to compute, particularly if

is not specified precisely.)

- In Box 9, one enters in the ratio

betwen Box 8 and Box 7.

- In Box 10, one enters in the product of Box 5 and Box 9.

- (Assuming there are no other hypotheses than

and

) In Box 11, enter in

divided by

plus Box 10.

- (Assuming there are no other hypotheses than

and

) In Box 12, enter in Box 10 divided by

plus Box 10. (Alternatively, one can enter in

minus Box 11.)

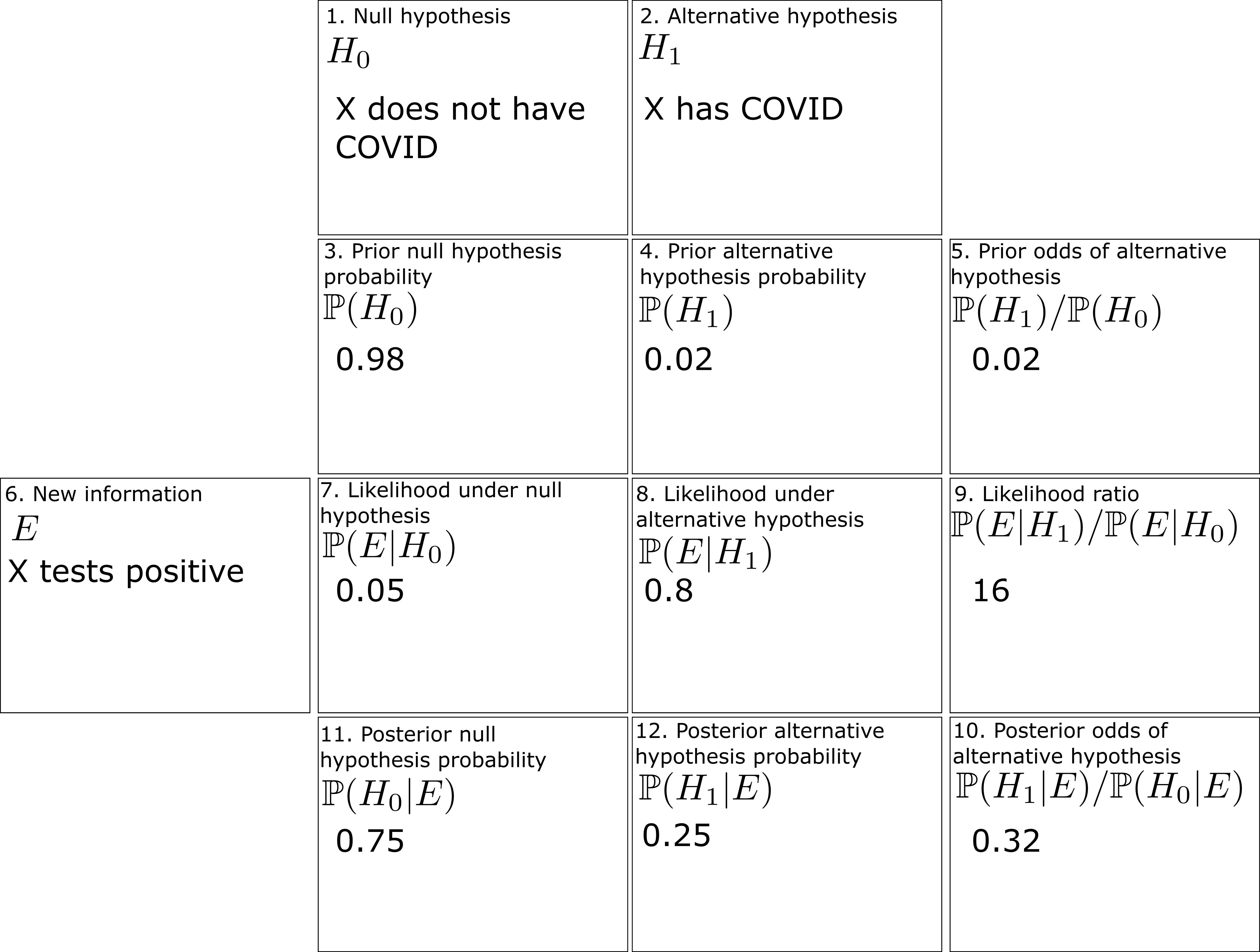

To illustrate this procedure, let us consider a standard Bayesian update problem. Suppose that a given point in time, of the population is infected with COVID-19. In response to this, a company mandates COVID-19 testing of its workforce, using a cheap COVID-19 test. This test has a

chance of a false negative (testing negative when one has COVID) and a

chance of a false positive (testing positive when one does not have COVID). An employee

takes the mandatory test, which turns out to be positive. What is the probability that

actually has COVID?

We can fill out the entries in the worksheet one at a time:

- Box 1: The null hypothesis

is that

does not have COVID.

- Box 2: The alternative hypothesis

is that

does have COVID.

- Box 3: In the absence of any better information, the prior probability

of the null hypothesis is

, or

.

- Box 4: Similarly, the prior probability

of the alternative hypothesis is

, or

.

- Box 5: The prior odds

are

.

- Box 6: The new information

is that

has tested positive for COVID.

- Box 7: The likelihood

of

under the null hypothesis is

, or

(the false positive rate).

- Box 8: The likelihood

of

under the alternative is

, or

(one minus the false negative rate).

- Box 9: The likelihood ratio

is

.

- Box 10: The product of Box 5 and Box 9 is approximately

.

- Box 11: The posterior probability

is approximately

.

- Box 12: The posterior probability

is approximately

.

The filled worksheet looks like this:

Perhaps surprisingly, despite the positive COVID test, the employee only has a

chance of actually having COVID! This is due to the relatively large false positive rate of this cheap test, and is an illustration of the base rate fallacy in statistics.

We remark that if we switch the roles of the null hypothesis and alternative hypothesis, then some of the odds in the worksheet change, but the ultimate conclusions remain unchanged:

So the question of which hypothesis to designate as the null hypothesis and which one to designate as the alternative hypothesis is largely a matter of convention.

Now let us take a superficially similar situation in which a mother observers her daughter exhibiting COVID-like symptoms, to the point where she estimates the probability of her daughter having COVID at . She then administers the same cheap COVID-19 test as before, which returns positive. What is the posterior probability of her daughter having COVID?

One can fill out the worksheet much as before, but now with the prior probability of the alternative hypothesis raised from to

(and the prior probablity of the null hypothesis dropping from

to

). One now gets that the probability that the daughter has COVID has increased all the way to

:

Thus we see that prior probabilities can make a significant impact on the posterior probabilities.

Now we use the worksheet to analyze an infamous probability puzzle, the Monty Hall problem. Let us use the formulation given in that Wikipedia page:

Problem 1 Suppose you’re on a game show, and you’re given the choice of three doors: Behind one door is a car; behind the others, goats. You pick a door, say No. 1, and the host, who knows what’s behind the doors, opens another door, say No. 3, which has a goat. He then says to you, “Do you want to pick door No. 2?” Is it to your advantage to switch your choice?

For this problem, the precise formulation of the null hypothesis and the alternative hypothesis become rather important. Suppose we take the following two hypotheses:

- Null hypothesis

: The car is behind door number 1, and no matter what door you pick, the host will randomly reveal another door that contains a goat.

- Alternative hypothesis

: The car is behind door number 2 or 3, and no matter what door you pick, the host will randomly reveal another door that contains a goat.

However, consider the following different set of hypotheses:

- Null hypothesis

: The car is behind door number 1, and if you pick the door with the car, the host will reveal another door to entice you to switch. Otherwise, the host will not reveal a door.

- Alternative hypothesis

: The car is behind door number 2 or 3, and if you pick the door with the car, the host will reveal another door to entice you to switch. Otherwise, the host will not reveal a door.

Here we still have and

, but while

remains equal to

,

has dropped to zero (since if the car is not behind door 1, the host will not reveal a door). So now

has increased all the way to

, and it is not advantageous to switch! This dramatically illustrates the importance of specifying the hypotheses precisely. The worksheet is now filled out as follows:

Finally, we consider another famous probability puzzle, the Sleeping Beauty problem. Again we quote the problem as formulated on the Wikipedia page:

Problem 2 Sleeping Beauty volunteers to undergo the following experiment and is told all of the following details: On Sunday she will be put to sleep. Once or twice, during the experiment, Sleeping Beauty will be awakened, interviewed, and put back to sleep with an amnesia-inducing drug that makes her forget that awakening. A fair coin will be tossed to determine which experimental procedure to undertake:Any time Sleeping Beauty is awakened and interviewed she will not be able to tell which day it is or whether she has been awakened before. During the interview Sleeping Beauty is asked: “What is your credence now for the proposition that the coin landed heads?”‘

- If the coin comes up heads, Sleeping Beauty will be awakened and interviewed on Monday only.

- If the coin comes up tails, she will be awakened and interviewed on Monday and Tuesday.

- In either case, she will be awakened on Wednesday without interview and the experiment ends.

Here the situation can be confusing because there are key portions of this experiment in which the observer is unconscious, but nevertheless Bayesian probability continues to operate regardless of whether the observer is conscious. To make this issue more precise, let us assume that the awakenings mentioned in the problem always occur at 8am, so in particular at 7am, Sleeping beauty will always be unconscious.

Here, the null and alternative hypotheses are easy to state precisely:

- Null hypothesis

: The coin landed tails.

- Alternative hypothesis

: The coin landed heads.

The subtle thing here is to work out what the correct prior state is (in most other applications of Bayesian probability, this state is obvious from the problem). It turns out that the most reasonable choice of prior state is “unconscious at 7am, on either Monday or Tuesday, with an equal chance of each”. (Note that whatever the outcome of the coin flip is, Sleeping Beauty will be unconscious at 7am Monday and unconscious again at 7am Tuesday, so it makes sense to give each of these two states an equal probability.) The new information is then

- New information

: One hour after the prior state, Sleeping Beauty is awakened.

With this formulation, we see that ,

, and

, so on working through the worksheet one eventually arrives at

, so that Sleeping Beauty should only assign a probability of

to the event that the coin landed as heads.

There are arguments advanced in the literature to adopt the position that should instead be equal to

, but I do not see a way to interpret them in this Bayesian framework without a substantial alteration to either the notion of the prior state, or by not presenting the new information

properly.

If one has multiple pieces of information that one wishes to use to update one’s priors, one can do so by filling out one copy of the worksheet for each new piece of information, or by using a multi-row version of the worksheet using such identities as

An unusual lottery result made the news recently: on October 1, 2022, the PCSO Grand Lotto in the Philippines, which draws six numbers from to

at random, managed to draw the numbers

(though the balls were actually drawn in the order

). In other words, they drew exactly six multiples of nine from

to

. In addition, a total of

tickets were bought with this winning combination, whose owners then had to split the

million peso jackpot (about

million USD) among themselves. This raised enough suspicion that there were calls for an inquiry into the Philippine lottery system, including from the minority leader of the Senate.

Whenever an event like this happens, journalists often contact mathematicians to ask the question: “What are the odds of this happening?”, and in fact I myself received one such inquiry this time around. This is a number that is not too difficult to compute – in this case, the probability of the lottery producing the six numbers in some order turn out to be

in

– and such a number is often dutifully provided to such journalists, who in turn report it as some sort of quantitative demonstration of how remarkable the event was.

But on the previous draw of the same lottery, on September 28, 2022, the unremarkable sequence of numbers were drawn (again in a different order), and no tickets ended up claiming the jackpot. The probability of the lottery producing the six numbers

is also

in

– just as likely or as unlikely as the October 1 numbers

. Indeed, the whole point of drawing the numbers randomly is to make each of the

possible outcomes (whether they be “unusual” or “unremarkable”) equally likely. So why is it that the October 1 lottery attracted so much attention, but the September 28 lottery did not?

Part of the explanation surely lies in the unusually large number () of lottery winners on October 1, but I will set that aspect of the story aside until the end of this post. The more general points that I want to make with these sorts of situations are:

- The question “what are the odds of happening” is often easy to answer mathematically, but it is not the correct question to ask.

- The question “what is the probability that an alternative hypothesis is the truth” is (one of) the correct questions to ask, but is very difficult to answer (it involves both mathematical and non-mathematical considerations).

- The answer to the first question is one of the quantities needed to calculate the answer to the second, but it is far from the only such quantity. Most of the other quantities involved cannot be calculated exactly.

- However, by making some educated guesses, one can still sometimes get a very rough gauge of which events are “more surprising” than others, in that they would lead to relatively higher answers to the second question.

To explain these points it is convenient to adopt the framework of Bayesian probability. In this framework, one imagines that there are competing hypotheses to explain the world, and that one assigns a probability to each such hypothesis representing one’s belief in the truth of that hypothesis. For simplicity, let us assume that there are just two competing hypotheses to be entertained: the null hypothesis , and an alternative hypothesis

. For instance, in our lottery example, the two hypotheses might be:

- Null hypothesis

: The lottery is run in a completely fair and random fashion.

- Alternative hypothesis

: The lottery is rigged by some corrupt officials for their personal gain.

At any given point in time, a person would have a probability assigned to the null hypothesis, and a probability

assigned to the alternative hypothesis; in this simplified model where there are only two hypotheses under consideration, these probabilities must add to one, but of course if there were additional hypotheses beyond these two then this would no longer be the case.

Bayesian probability does not provide a rule for calculating the initial (or prior) probabilities ,

that one starts with; these may depend on the subjective experiences and biases of the person considering the hypothesis. For instance, one person might have quite a bit of prior faith in the lottery system, and assign the probabilities

and

. Another person might have quite a bit of prior cynicism, and perhaps assign

and

. One cannot use purely mathematical arguments to determine which of these two people is “correct” (or whether they are both “wrong”); it depends on subjective factors.

What Bayesian probability does do, however, is provide a rule to update these probabilities ,

in view of new information

to provide posterior probabilities

,

. In our example, the new information

would be the fact that the October 1 lottery numbers were

(in some order). The update is given by the famous Bayes theorem

- The prior odds

of the alternative hypothesis;

- The probability

that the event

occurs under the null hypothesis

; and

- The probability

that the event

occurs under the alternative hypothesis

.

As previously discussed, the prior odds of the alternative hypothesis are subjective and vary from person to person; in the example earlier, the person with substantial faith in the lottery may only give prior odds of

(99 to 1 against) of the alternative hypothesis, whereas the cynic might give odds of

(even odds). The probability

is the quantity that can often be calculated by straightforward mathematics; as discussed before, in this specific example we have

For instance, suppose we replace the alternative hypothesis by the following very specific (and somewhat bizarre) hypothesis:

- Alternative hypothesis

: The lottery is rigged by a cult that worships the multiples of

, and views October 1 as their holiest day. On this day, they will manipulate the lottery to only select those balls that are multiples of

.

Under this alternative hypothesis , we have

. So, when

happens, the odds of this alternative hypothesis

will increase by the dramatic factor of

. So, for instance, someone who already was entertaining odds of

of this hypothesis

would now have these odds multiply dramatically to

, so that the probability of

would have jumped from a mere

to a staggering

. This is about as strong a shift in belief as one could imagine. However, this hypothesis

is so specific and bizarre that one’s prior odds of this hypothesis would be nowhere near as large as

(unless substantial prior evidence of this cult and its hold on the lottery system existed, of course). A more realistic prior odds for

would be something like

– which is so miniscule that even multiplying it by a factor such as

barely moves the needle.

Remark 1 The contrast between alternative hypothesisand alternative hypothesis

illustrates a common demagogical rhetorical technique when an advocate is trying to convince an audience of an alternative hypothesis, namely to use suggestive language (“`I’m just asking questions here”) rather than precise statements in order to leave the alternative hypothesis deliberately vague. In particular, the advocate may take advantage of the freedom to use a broad formulation of the hypothesis (such as

) in order to maximize the audience’s prior odds of the hypothesis, simultaneously with a very specific formulation of the hypothesis (such as

) in order to maximize the probability of the actual event

occuring under this hypothesis. (A related technique is to be deliberately vague about the hypothesized competency of some suspicious actor, so that this actor could be portrayed as being extraordinarily competent when convenient to do so, while simultaneously being portrayed as extraordinarily incompetent when that instead is the more useful hypothesis.) This can lead to wildly inaccurate Bayesian updates of this vague alternative hypothesis, and so precise formulation of such hypothesis is important if one is to approach a topic from anything remotely resembling a scientific approach. [EDIT: as pointed out to me by a reader, this technique is a Bayesian analogue of the motte and bailey fallacy.]

At the opposite extreme, consider instead the following hypothesis:

- Alternative hypothesis

: The lottery is rigged by some corrupt officials, who on October 1 decide to randomly determine the winning numbers in advance, share these numbers with their collaborators, and then manipulate the lottery to choose those numbers that they selected.

If these corrupt officials are indeed choosing their predetermined winning numbers randomly, then the probability would in fact be just the same probability

as

, and in this case the seemingly unusual event

would in fact have no effect on the odds of the alternative hypothesis, because it was just as unlikely for the alternative hypothesis to generate this multiples-of-nine pattern as for the null hypothesis to. In fact, one would imagine that these corrupt officials would avoid “suspicious” numbers, such as the multiples of

, and only choose numbers that look random, in which case

would in fact be less than

and so the event

would actually lower the odds of the alternative hypothesis in this case. (In fact, one can sometimes use this tendency of fraudsters to not generate truly random data as a statistical tool to detect such fraud; violations of Benford’s law for instance can be used in this fashion, though only in situations where the null hypothesis is expected to obey Benford’s law, as discussed in this previous blog post.)

Now let us consider a third alternative hypothesis:

- Alternative hypothesis

: On October 1, the lottery machine developed a fault and now only selects numbers that exhibit unusual patterns.

Setting aside the question of precisely what faulty mechanism could induce this sort of effect, it is not clear at all how to compute in this case. Using the principle of indifference as a crude rule of thumb, one might expect

Remark 2 This example demonstrates another demagogical rhetorical technique that one sometimes sees (particularly in political or other emotionally charged contexts), which is to cherry-pick the information presented to their audience by informing them of eventswhich have a relatively high probability of occurring under their alternative hypothesis, but withholding information about other relevant events

that have a relatively low probability of occurring under their alternative hypothesis. When confronted with such new information

, a common defense of a demogogue is to modify the alternative hypothesis

to a more specific hypothesis

that can “explain” this information

(“Oh, clearly we heard about

because the conspiracy in fact extends to the additional organizations

that reported

“), taking advantage of the vagueness discussed in Remark 1.

Let us consider a superficially similar hypothesis:

- Alternative hypothesis

: On October 1, a divine being decided to send a sign to humanity by placing an unusual pattern in a lottery.

Here we (literally) stay agnostic on the prior odds of this hypothesis, and do not address the theological question of why a divine being should choose to use the medium of a lottery to send their signs. At first glance, the probability here should be similar to the probability

, and so perhaps one could use this event

to improve the odds of the existence of a divine being by a factor of a thousand or so. But note carefully that the hypothesis

did not specify which lottery the divine being chose to use. The PSCO Grand Lotto is just one of a dozen lotteries run by the Philippine Charity Sweepstakes Office (PCSO), and of course there are over a hundred other countries and thousands of states within these countries, each of which often run their own lotteries. Taking into account these thousands or tens of thousands of additional lotteries to choose from, the probability

now drops by several orders of magnitude, and is now basically comparable to the probability

coming from the null hypothesis. As such one does not expect the event

to have a significant impact on the odds of the hypothesis

, despite the small-looking nature

of the probability

.

In summary, we have failed to locate any alternative hypothesis which

- Has some non-negligible prior odds of being true (and in particular is not excessively specific, as with hypothesis

);

- Has a significantly higher probability of producing the specific event

than the null hypothesis; AND

- Does not struggle to also produce other events

that have since been observed.

We now return to the fact that for this specific October 1 lottery, there were tickets that managed to select the winning numbers. Let us call this event

. In view of this additional information, we should now consider the ratio of the probabilities

and

, rather than the ratio of the probabilities

and

. If we augment the null hypothesis to

- Null hypothesis

: The lottery is run in a completely fair and random fashion, and the purchasers of lottery tickets also select their numbers in a completely random fashion.

Then is indeed of the “insanely improbable” category mentioned previously. I was not able to get official numbers on how many tickets are purchased per lottery, but let us say for sake of argument that it is 1 million (the conclusion will not be extremely sensitive to this choice). Then the expected number of tickets that would have the winning numbers would be

- Null hypothesis

: The lottery is run in a completely fair and random fashion, but a significant fraction of the purchasers of lottery tickets only select “unusual” numbers.

then it can now become quite plausible that a highly unusual set of numbers such as could be selected by as many as

purchasers of tickets; for instance, if

of the 1 million ticket holders chose to select their numbers according to some sort of pattern, then only

of those holders would have to pick

in order for the event

to hold (given

), and this is not extremely implausible. Given that this reasonable version of the null hypothesis already gives a plausible explanation for

, there does not seem to be a pressing need to locate an alternate hypothesis

that gives some other explanation (cf. Occam’s razor). [UPDATE: Indeed, given the actual layout of the tickets of ths lottery, the numbers

form a diagonal, and so all that is needed in order for the modified null hypothesis

to explain the event

is to postulate that a significant fraction of ticket purchasers decided to lay out their numbers in a simple geometric pattern, such as a row or diagonal.]

Remark 3 In view of the above discussion, one can propose a systematic way to evaluate (in as objective a fashion as possible) rhetorical claims in which an advocate is presenting evidence to support some alternative hypothesis:

- State the null hypothesis

and the alternative hypothesis

as precisely as possible. In particular, avoid conflating an extremely broad hypothesis (such as the hypothesis

in our running example) with an extremely specific one (such as

in our example).

- With the hypotheses precisely stated, give an honest estimate to the prior odds of this formulation of the alternative hypothesis.

- Consider if all the relevant information

(or at least a representative sample thereof) has been presented to you before proceeding further. If not, consider gathering more information

from further sources.

- Estimate how likely the information

was to have occurred under the null hypothesis.

- Estimate how likely the information

was to have occurred under the alternative hypothesis (using exactly the same wording of this hypothesis as you did in previous steps).

- If the second estimate is significantly larger than the first, then you have cause to update your prior odds of this hypothesis (though if those prior odds were already vanishingly unlikely, this may not move the needle significantly). If not, the argument is unconvincing and no significant adjustment to the odds (except perhaps in a downwards direction) needs to be made.

In everyday usage, we rely heavily on percentages to quantify probabilities and proportions: we might say that a prediction is accurate or

accurate, that there is a

chance of dying from some disease, and so forth. However, for those without extensive mathematical training, it can sometimes be difficult to assess whether a given percentage amounts to a “good” or “bad” outcome, because this depends very much on the context of how the percentage is used. For instance:

- (i) In a two-party election, an outcome of say

to

might be considered close, but

to

would probably be viewed as a convincing mandate, and

to

would likely be viewed as a landslide.

- (ii) Similarly, if one were to poll an upcoming election, a poll of

to

would be too close to call,

to

would be an extremely favorable result for the candidate, and

to

would mean that it would be a major upset if the candidate lost the election.

- (iii) On the other hand, a medical operation that only had a

,

, or

chance of success would be viewed as being incredibly risky, especially if failure meant death or permanent injury to the patient. Even an operation that was

or

likely to be non-fatal (i.e., a

or

chance of death) would not be conducted lightly.

- (iv) A weather prediction of, say,

chance of rain during a vacation trip might be sufficient cause to pack an umbrella, even though it is more likely than not that rain would not occur. On the other hand, if the prediction was for an

chance of rain, and it ended up that the skies remained clear, this does not seriously damage the accuracy of the prediction – indeed, such an outcome would be expected in one out of every five such predictions.

- (v) Even extremely tiny percentages of toxic chemicals in everyday products can be considered unacceptable. For instance, EPA rules require action to be taken when the percentage of lead in drinking water exceeds

(15 parts per billion). At the opposite extreme, recycling contamination rates as high as

are often considered acceptable.

Because of all the very different ways in which percentages could be used, I think it may make sense to propose an alternate system of units to measure one class of probabilities, namely the probabilities of avoiding some highly undesirable outcome, such as death, accident or illness. The units I propose are that of “nines“, which are already commonly used to measure availability of some service or purity of a material, but can be equally used to measure the safety (i.e., lack of risk) of some activity. Informally, nines measure how many consecutive appearances of the digit are in the probability of successfully avoiding the negative outcome, thus

-

success = one nine of safety

-

success = two nines of safety

-

success = three nines of safety

Definition 1 (Nines of safety) An activity (affecting one or more persons, over some given period of time) that has a probabilityof the “safe” outcome and probability

of the “unsafe” outcome will have

nines of safety against the unsafe outcome, where

is defined by the formula

(where

is the logarithm to base ten), or equivalently

Remark 2 Because of the various uncertainties in measuring probabilities, as well as the inaccuracies in some of the assumptions and approximations we will be making later, we will not attempt to measure the number of nines of safety beyond the first decimal point; thus we will round to the nearest tenth of a nine of safety throughout this post.

Here is a conversion table between percentage rates of success (the safe outcome), failure (the unsafe outcome), and the number of nines of safety one has:

| Success rate | Failure rate | Number of nines |

| | | |

| | | |

| | | |

| | | |

| | | |

| | | |

| | | |

| | | |

| | | |

| | | |

| | | |

| | | |

| | | |

| | | |

| | | |

| | | |

| | | |

| | | infinite |

Thus, if one has no nines of safety whatsoever, one is guaranteed to fail; but each nine of safety one has reduces the failure rate by a factor of . In an ideal world, one would have infinitely many nines of safety against any risk, but in practice there are no

guarantees against failure, and so one can only expect a finite amount of nines of safety in any given situation. Realistically, one should thus aim to have as many nines of safety as one can reasonably expect to have, but not to demand an infinite amount.

Remark 3 The number of nines of safety against a certain risk is not absolute; it will depend not only on the risk itself, but (a) the number of people exposed to the risk, and (b) the length of time one is exposed to the risk. Exposing more people or increasing the duration of exposure will reduce the number of nines, and conversely exposing fewer people or reducing the duration will increase the number of nines; see Proposition 7 below for a rough rule of thumb in this regard.

Remark 4 Nines of safety are a logarithmic scale of measurement, rather than a linear scale. Other familiar examples of logarithmic scales of measurement include the Richter scale of earthquake magnitude, the pH scale of acidity, the decibel scale of sound level, octaves in music, and the magnitude scale for stars.

Remark 5 One way to think about nines of safety is via the Swiss cheese model that was created recently to describe pandemic risk management. In this model, each nine of safety can be thought of as a slice of Swiss cheese, with holes occupyingof that slice. Having

nines of safety is then analogous to standing behind

such slices of Swiss cheese. In order for a risk to actually impact you, it must pass through each of these

slices. A fractional nine of safety corresponds to a fractional slice of Swiss cheese that covers the amount of space given by the above table. For instance,

nines of safety corresponds to a fractional slice that covers about

of the given area (leaving

uncovered).

Now to give some real-world examples of nines of safety. Using data for deaths in the US in 2019 (without attempting to account for factors such as age and gender), a random US citizen will have had the following amount of safety from dying from some selected causes in that year:

| Cause of death | Mortality rate per | Nines of safety |

| All causes | | |

| Heart disease | | |

| Cancer | | |

| Accidents | | |

| Drug overdose | | |

| Influenza/Pneumonia | | |

| Suicide | | |

| Gun violence | | |

| Car accident | | |

| Murder | | |

| Airplane crash | | |

| Lightning strike | | |

The safety of air travel is particularly remarkable: a given hour of flying in general aviation has a fatality rate of , or about

nines of safety, while for the major carriers the fatality rate drops down to

, or about

nines of safety.

Of course, in 2020, COVID-19 deaths became significant. In this year in the US, the mortality rate for COVID-19 (as the underlying or contributing cause of death) was per

, corresponding to

nines of safety, which was less safe than all other causes of death except for heart disease and cancer. At this time of writing, data for all of 2021 is of course not yet available, but it seems likely that the safety level would be even lower for this year.

Some further illustrations of the concept of nines of safety:

- Each round of Russian roulette has a success rate of

, providing only

nines of safety. Of course, the safety will decrease with each additional round: one has only

nines of safety after two rounds,

nines after three rounds, and so forth. (See also Proposition 7 below.)

- The ancient Roman punishment of decimation, by definition, provided exactly one nine of safety to each soldier being punished.

- Rolling a

on a

-sided die is a risk that carries about

nines of safety.

- Rolling a double one (“snake eyes“) from two six-sided dice carries about

nines of safety.

- One has about

nines of safety against the risk of someone randomly guessing your birthday on the first attempt.

- A null hypothesis has

nines of safety against producing a

statistically significant result, and

nines against producing a

statistically significant result. (However, one has to be careful when reversing the conditional; a

statistically significant result does not necessarily have

nines of safety against the null hypothesis. In Bayesian statistics, the precise relationship between the two risks is given by Bayes’ theorem.)

- If a poker opponent is dealt a five-card hand, one has

nines of safety against that opponent being dealt a royal flush,

against a straight flush or higher,

against four-of-a-kind or higher,

against a full house or higher,

against a flush or higher,

against a straight or higher,

against three-of-a-kind or higher,

against two pairs or higher, and just

against one pair or higher. (This data was converted from this Wikipedia table.)

- A

-digit PIN number (or a

-digit combination lock) carries

nines of safety against each attempt to randomly guess the PIN. A length

password that allows for numbers, upper and lower case letters, and punctuation carries about

nines of safety against a single guess. (For the reduction in safety caused by multiple guesses, see Proposition 7 below.)

Here is another way to think about nines of safety:

Proposition 6 (Nines of safety extend expected onset of risk) Suppose a certain risky activity hasnines of safety. If one repeatedly indulges in this activity until the risk occurs, then the expected number of trials before the risk occurs is

.

Proof: The probability that the risk is activated after exactly trials is

, which is a geometric distribution of parameter

. The claim then follows from the standard properties of that distribution.

Thus, for instance, if one performs some risky activity daily, then the expected length of time before the risk occurs is given by the following table:

| Daily nines of safety | Expected onset of risk |

| | One day |

| | One week |

| | One month |

| | One year |

| | Two years |

| | Five years |

| | Ten years |

| | Twenty years |

| | Fifty years |

| | A century |

Or, if one wants to convert the yearly risks of dying from a specific cause into expected years before that cause of death would occur (assuming for sake of discussion that no other cause of death exists):

| Yearly nines of safety | Expected onset of risk |

| | One year |

| | Two years |

| | Five years |

| | Ten years |

| | Twenty years |

| | Fifty years |

| | A century |

These tables suggest a relationship between the amount of safety one would have in a short timeframe, such as a day, and a longer time frame, such as a year. Here is an approximate formalisation of that relationship:

Proposition 7 (Repeated exposure reduces nines of safety) If a risky activity withnines of safety is (independently) repeated

times, then (assuming

is large enough depending on

), the repeated activity will have approximately

nines of safety. Conversely: if the repeated activity has

nines of safety, the individual activity will have approximately

nines of safety.

Proof: An activity with nines of safety will be safe with probability

, hence safe with probability

if repeated independently

times. For

large, we can approximate

Remark 8 The hypothesis of independence here is key. If there is a lot of correlation between the risks between different repetitions of the activity, then there can be much less reduction in safety caused by that repetition. As a simple example, suppose thatof a workforce are trained to perform some task flawlessly no matter how many times they repeat the task, but the remaining

are untrained and will always fail at that task. If one selects a random worker and asks them to perform the task, one has

nines of safety against the task failing. If one took that same random worker and asked them to perform the task

times, the above proposition might suggest that the number of nines of safety would drop to approximately

; but in this case there is perfect correlation, and in fact the number of nines of safety remains steady at

since it is the same

of the workforce that would fail each time.

Because of this caveat, one should view the above proposition as only a crude first approximation that can be used as a simple rule of thumb, but should not be relied upon for more precise calculations.

One can repeat a risk either in time (extending the time of exposure to the risk, say from a day to a year), or in space (by exposing the risk to more people). The above proposition then gives an additive conversion law for nines of safety in either case. Here are some conversion tables for time:

| From/to | Daily | Weekly | Monthly | Yearly |

| Daily | 0 | -0.8 | -1.5 | -2.6 |

| Weekly | +0.8 | 0 | -0.6 | -1.7 |

| Monthly | +1.5 | +0.6 | 0 | -1.1 |

| Yearly | +2.6 | +1.7 | +1.1 | 0 |

| From/to | Yearly | Per 5 yr | Per decade | Per century |

| Yearly | 0 | -0.7 | -1.0 | -2.0 |

| Per 5 yr | +0.7 | 0 | -0.3 | -1.3 |

| Per decade | +1.0 | + -0.3 | 0 | -1.0 |

| Per century | +2.0 | +1.3 | +1.0 | 0 |

For instance, as mentioned before, the yearly amount of safety against cancer is about . Using the above table (and making the somewhat unrealistic hypothesis of independence), we then predict the daily amount of safety against cancer to be about

nines, the weekly amount to be about

nines, and the amount of safety over five years to drop to about

nines.

Now we turn to conversions in space. If one knows the level of safety against a certain risk for an individual, and then one (independently) exposes a group of such individuals to that risk, then the reduction in nines of safety when considering the possibility that at least one group member experiences this risk is given by the following table:

| Group | Reduction in safety |

| You ( | |

| You and your partner ( | |

| You and your parents ( | |

| You, your partner, and three children ( | |

| An extended family of | |

| A class of | |

| A workplace of | |

| A school of | |

| A university of | |

| A town of | |

| A city of | |

| A state of | |

| A country of | |

| A continent of | |

| The entire planet | |

For instance, in a given year (and making the somewhat implausible assumption of independence), you might have nines of safety against cancer, but you and your partner collectively only have about

nines of safety against this risk, your family of five might only have about

nines of safety, and so forth. By the time one gets to a group of

people, it actually becomes very likely that at least one member of the group will die of cancer in that year. (Here the precise conversion table breaks down, because a negative number of nines such as

is not possible, but one should interpret a prediction of a negative number of nines as an assertion that failure is very likely to happen. Also, in practice the reduction in safety is less than this rule predicts, due to correlations such as risk factors that are common to the group being considered that are incompatible with the assumption of independence.)

In the opposite direction, any reduction in exposure (either in time or space) to a risk will increase one’s safety level, as per the following table:

| Reduction in exposure | Additional nines of safety |

| | |

| | |

| | |

| | |

| | |

| | |

For instance, a five-fold reduction in exposure will reclaim about additional nines of safety.

Here is a slightly different way to view nines of safety:

Proposition 9 Suppose that a group ofpeople are independently exposed to a given risk. If there are at most

nines of individual safety against that risk, then there is at least a

chance that one member of the group is affected by the risk.

Proof: If individually there are nines of safety, then the probability that all the members of the group avoid the risk is

. Since the inequality

Thus, for a group to collectively avoid a risk with at least a chance, one needs the following level of individual safety:

| Group | Individual safety level required |

| You ( | |

| You and your partner ( | |

| You and your parents ( | |

| You, your partner, and three children ( | |

| An extended family of | |

| A class of | |

| A workplace of | |

| A school of | |

| A university of | |

| A town of | |

| A city of | |

| A state of | |

| A country of | |

| A continent of | |

| The entire planet | |

For large , the level

of nines of individual safety required to protect a group of size

with probability at least

is approximately

.

Precautions that can work to prevent a certain risk from occurring will add additional nines of safety against that risk, even if the precaution is not effective. Here is the precise rule:

Proposition 10 (Precautions add nines of safety) Suppose an activity carriesnines of safety against a certain risk, and a separate precaution can independently protect against that risk with

nines of safety (that is to say, the probability that the protection is effective is

). Then applying that precaution increases the number of nines in the activity from

to

.

Proof: The probability that the precaution fails and the risk then occurs is . The claim now follows from Definition 1.

In particular, we can repurpose the table at the start of this post as a conversion chart for effectiveness of a precaution:

| Effectiveness | Failure rate | Additional nines provided |

| | | |

| | | |

| | | |

| | | |

| | | |

| | | |

| | | |

| | | |

| | | |

| | | |

| | | |

| | | |

| | | |

| | | |

| | | |

| | | |

| | | |

| | | infinite |

Thus for instance a precaution that is effective will add

nines of safety, a precaution that is

effective will add

nines of safety, and so forth. The mRNA COVID vaccines by Pfizer and Moderna have somewhere between

effectiveness against symptomatic COVID illness, providing about

nines of safety against that risk, and over

effectiveness against severe illness, thus adding at least

nines of safety in this regard.

A slight variant of the above rule can be stated using the concept of relative risk:

Proposition 11 (Relative risk and nines of safety) Suppose an activity carriesnines of safety against a certain risk, and an action multiplies the chance of failure by some relative risk

. Then the action removes

nines of safety (if

) or adds

nines of safety (if

) to the original activity.

Proof: The additional action adjusts the probability of failure from to

. The claim now follows from Definition 1.

Here is a conversion chart between relative risk and change in nines of safety:

| Relative risk | Change in nines of safety |

| | |

| | |

| | |

| | |

| | |

| | |

| | |

| | |

| | |

| | |

| | |

| | |

| | |

Some examples:

- Smoking increases the fatality rate of lung cancer by a factor of about

, thus removing about

nines of safety from this particular risk; it also increases the fatality rates of several other diseases, though not quite as dramatically an extent.

- Seatbelts reduce the fatality rate in car accidents by a factor of about two, adding about

nines of safety. Airbags achieve a reduction of about

, adding about

additional nines of safety.

- As far as transmission of COVID is concerned, it seems that constant use of face masks reduces transmission by a factor of about five (thus adding about

nines of safety), and similarly for constant adherence to social distancing; whereas for instance a

compliance with mask usage reduced transmission by about

(adding only

or so nines of safety).

The effect of combining multiple (independent) precautions together is cumulative; one can achieve quite a high level of safety by stacking together several precautions that individually have relatively low levels of effectiveness. Again, see the “swiss cheese model” referred to in Remark 5. For instance, if face masks add nines of safety against contracting COVID, social distancing adds another

nines, and the vaccine provide another

nine of safety, implementing all three mitigation methods would (assuming independence) add a net of

nines of safety against contracting COVID.

In summary, when debating the value of a given risk mitigation measure, the correct question to ask is not quite “Is it certain to work” or “Can it fail?”, but rather “How many extra nines of safety does it add?”.

As one final comparison between nines of safety and other standard risk measures, we give the following proposition regarding large deviations from the mean.

Proposition 12 Letbe a normally distributed random variable of standard deviation

, and let

. Then the “one-sided risk” of

exceeding its mean

by at least

(i.e.,

) carries

nines of safety, the “two-sided risk” of

deviating (in either direction) from its mean by at least

(i.e.,

) carries

nines of safety, where

is the error function.

Proof: This is a routine calculation using the cumulative distribution function of the normal distribution.

Here is a short table illustrating this proposition:

| Number | One-sided nines of safety | Two-sided nines of safety |

| | | |

| | | |

| | | |

| | | |

| | | |

| | | |

| | | |

Thus, for instance, the risk of a five sigma event (deviating by more than five standard deviations from the mean in either direction) should carry nines of safety assuming a normal distribution, and so one would ordinarily feel extremely safe against the possibility of such an event, unless one started doing hundreds of thousands of trials. (However, we caution that this conclusion relies heavily on the assumption that one has a normal distribution!)

See also this older essay I wrote on anonymity on the internet, using bits as a measure of anonymity in much the same way that nines are used here as a measure of safety.

After some discussion with the applied math research groups here at UCLA (in particular the groups led by Andrea Bertozzi and Deanna Needell), one of the members of these groups, Chris Strohmeier, has produced a proposal for a Polymath project to crowdsource in a single repository (a) a collection of public data sets relating to the COVID-19 pandemic, (b) requests for such data sets, (c) requests for data cleaning of such sets, and (d) submissions of cleaned data sets. (The proposal can be viewed as a PDF, and is also available on Overleaf). As mentioned in the proposal, this database would be slightly different in focus than existing data sets such as the COVID-19 data sets hosted on Kaggle, with a focus on producing high quality cleaned data sets. (Another relevant data set that I am aware of is the SafeGraph aggregated foot traffic data, although this data set, while open, is not quite public as it requires a non-commercial agreement to execute. Feel free to mention further relevant data sets in the comments.)

This seems like a very interesting and timely proposal to me and I would like to open it up for discussion, for instance by proposing some seed requests for data and data cleaning and to discuss possible platforms that such a repository could be built on. In the spirit of “building the plane while flying it”, one could begin by creating a basic github repository as a prototype and use the comments in this blog post to handle requests, and then migrate to a more high quality platform once it becomes clear what direction this project might move in. (For instance one might eventually move beyond data cleaning to more sophisticated types of data analysis.)

UPDATE, Mar 25: a prototype page for such a clearinghouse is now up at this wiki page.

UPDATE, Mar 27: the data cleaning aspect of this project largely duplicates the existing efforts at the United against COVID-19 project, so we are redirecting requests of this type to that project (and specifically to their data discourse page). The polymath proposal will now refocus on crowdsourcing a list of public data sets relating to the COVID-19 pandemic.

At the most recent MSRI board of trustees meeting on Mar 7 (conducted online, naturally), Nicolas Jewell (a Professor of Biostatistics and Statistics at Berkeley, also affiliated with the Berkeley School of Public Health and the London School of Health and Tropical Disease), gave a presentation on the current coronavirus epidemic entitled “2019-2020 Novel Coronavirus outbreak: mathematics of epidemics, and what it can and cannot tell us”. The presentation (updated with Mar 18 data), hosted by David Eisenbud (the director of MSRI), together with a question and answer session, is now on Youtube:

(I am on this board, but could not make it to this particular meeting; I caught up on the presentation later, and thought it would of interest to several readers of this blog.) While there is some mathematics in the presentation, it is relatively non-technical.

Note: the following is a record of some whimsical mathematical thoughts and computations I had after doing some grading. It is likely that the sort of problems discussed here are in fact well studied in the appropriate literature; I would appreciate knowing of any links to such.

Suppose one assigns true-false questions on an examination, with the answers randomised so that each question is equally likely to have “true” as the correct answer as “false”, with no correlation between different questions. Suppose that the students taking the examination must answer each question with exactly one of “true” or “false” (they are not allowed to skip any question). Then it is easy to see how to grade the exam: one can simply count how many questions each student answered correctly (i.e. each correct answer scores one point, and each incorrect answer scores zero points), and give that number

as the final grade of the examination. More generally, one could assign some score of

points to each correct answer and some score (possibly negative) of

points to each incorrect answer, giving a total grade of

points. As long as

, this grade is simply an affine rescaling of the simple grading scheme

and would serve just as well for the purpose of evaluating the students, as well as encouraging each student to answer the questions as correctly as possible.

In practice, though, a student will probably not know the answer to each individual question with absolute certainty. One can adopt a probabilistic model, where for a given student and a given question

, the student

may think that the answer to question

is true with probability

and false with probability

, where

is some quantity that can be viewed as a measure of confidence

has in the answer (with

being confident that the answer is true if

is close to

, and confident that the answer is false if

is close to

); for simplicity let us assume that in

‘s probabilistic model, the answers to each question are independent random variables. Given this model, and assuming that the student

wishes to maximise his or her expected grade on the exam, it is an easy matter to see that the optimal strategy for

to take is to answer question

true if

and false if

. (If

, the student

can answer arbitrarily.)

[Important note: here we are not using the term “confidence” in the technical sense used in statistics, but rather as an informal term for “subjective probability”.]

This is fine as far as it goes, but for the purposes of evaluating how well the student actually knows the material, it provides only a limited amount of information, in particular we do not get to directly see the student’s subjective probabilities for each question. If for instance

answered

out of

questions correctly, was it because he or she actually knew the right answer for seven of the questions, or was it because he or she was making educated guesses for the ten questions that turned out to be slightly better than random chance? There seems to be no way to discern this if the only input the student is allowed to provide for each question is the single binary choice of true/false.

But what if the student were able to give probabilistic answers to any given question? That is to say, instead of being forced to answer just “true” or “false” for a given question , the student was allowed to give answers such as “

confident that the answer is true” (and hence

confidence the answer is false). Such answers would give more insight as to how well the student actually knew the material; in particular, we would theoretically be able to actually see the student’s subjective probabilities

.

But now it becomes less clear what the right grading scheme to pick is. Suppose for instance we wish to extend the simple grading scheme in which an correct answer given in confidence is awarded one point. How many points should one award a correct answer given in

confidence? How about an incorrect answer given in

confidence (or equivalently, a correct answer given in

confidence)?

Mathematically, one could design a grading scheme by selecting some grading function and then awarding a student

points whenever they indicate the correct answer with a confidence of

. For instance, if the student was

confident that the answer was “true” (and hence

confident that the answer was “false”), then this grading scheme would award the student

points if the correct answer actually was “true”, and

points if the correct answer actually was “false”. One can then ask the question of what functions

would be “best” for this scheme?

Intuitively, one would expect that should be monotone increasing – one should be rewarded more for being correct with high confidence, than correct with low confidence. On the other hand, some sort of “partial credit” should still be assigned in the latter case. One obvious proposal is to just use a linear grading function

– thus for instance a correct answer given with

confidence might be worth

points. But is this the “best” option?

To make the problem more mathematically precise, one needs an objective criterion with which to evaluate a given grading scheme. One criterion that one could use here is the avoidance of perverse incentives. If a grading scheme is designed badly, a student may end up overstating or understating his or her confidence in an answer in order to optimise the (expected) grade: the optimal level of confidence for a student

to report on a question may differ from that student’s subjective confidence

. So one could ask to design a scheme so that

is always equal to

, so that the incentive is for the student to honestly report his or her confidence level in the answer.

This turns out to give a precise constraint on the grading function . If a student

thinks that the answer to a question

is true with probability

and false with probability

, and enters in an answer of “true” with confidence

(and thus “false” with confidence

), then student would expect a grade of

on average for this question. To maximise this expected grade (assuming differentiability of , which is a reasonable hypothesis for a partial credit grading scheme), one performs the usual maneuvre of differentiating in the independent variable

and setting the result to zero, thus obtaining

In order to avoid perverse incentives, the maximum should occur at , thus we should have

for all . This suggests that the function

should be constant. (Strictly speaking, it only gives the weaker constraint that

is symmetric around

; but if one generalised the problem to allow for multiple-choice questions with more than two possible answers, with a grading scheme that depended only on the confidence assigned to the correct answer, the same analysis would in fact force

to be constant in

; we leave this computation to the interested reader.) In other words,

should be of the form

for some

; by monotonicity we expect

to be positive. If we make the normalisation

(so that no points are awarded for a

split in confidence between true and false) and

, one arrives at the grading scheme

Thus, if a student believes that an answer is “true” with confidence and “false” with confidence

, he or she will be awarded

points when the correct answer is “true”, and

points if the correct answer is “false”. The following table gives some illustrative values for this scheme:

| Confidence that answer is “true” | Points awarded if answer is “true” | Points awarded if answer is “false” |

Note the large penalties for being extremely confident of an answer that ultimately turns out to be incorrect; in particular, answers of confidence should be avoided unless one really is absolutely certain as to the correctness of one’s answer.

The total grade given under such a scheme to a student who answers each question

to be “true” with confidence

, and “false” with confidence

, is

This grade can also be written as

where

is the likelihood of the student ‘s subjective probability model, given the outcome of the correct answers. Thus the grade system here has another natural interpretation, as being an affine rescaling of the log-likelihood. The incentive is thus for the student to maximise the likelihood of his or her own subjective model, which aligns well with standard practices in statistics. From the perspective of Bayesian probability, the grade given to a student can then be viewed as a measurement (in logarithmic scale) of how much the posterior probability that the student’s model was correct has improved over the prior probability.

One could propose using the above grading scheme to evaluate predictions to binary events, such as an upcoming election with only two viable candidates, to see in hindsight just how effective each predictor was in calling these events. One difficulty in doing so is that many predictions do not come with explicit probabilities attached to them, and attaching a default confidence level of to any prediction made without any such qualification would result in an automatic grade of

if even one of these predictions turned out to be incorrect. But perhaps if a predictor refuses to attach confidence level to his or her predictions, one can assign some default level

of confidence to these predictions, and then (using some suitable set of predictions from this predictor as “training data”) find the value of

that maximises this predictor’s grade. This level can then be used going forward as the default level of confidence to apply to any future predictions from this predictor.

The above grading scheme extends easily enough to multiple-choice questions. But one question I had trouble with was how to deal with uncertainty, in which the student does not know enough about a question to venture even a probability of being true or false. Here, it is natural to allow a student to leave a question blank (i.e. to answer “I don’t know”); a more advanced option would be to allow the student to enter his or her confidence level as an interval range (e.g. “I am between and

confident that the answer is “true””). But now I do not have a good proposal for a grading scheme; once there is uncertainty in the student’s subjective model, the problem of that student maximising his or her expected grade becomes ill-posed due to the “unknown unknowns”, and so the previous criterion of avoiding perverse incentives becomes far less useful.

I recently learned about a curious operation on square matrices known as sweeping, which is used in numerical linear algebra (particularly in applications to statistics), as a useful and more robust variant of the usual Gaussian elimination operations seen in undergraduate linear algebra courses. Given an matrix

(with, say, complex entries) and an index

, with the entry

non-zero, the sweep

of

at

is the matrix given by the formulae

for all . Thus for instance if

, and

is written in block form as

for some row vector

,

column vector

, and

minor

, one has

The inverse sweep operation is given by a nearly identical set of formulae:

for all . One can check that these operations invert each other. Actually, each sweep turns out to have order

, so that

: an inverse sweep performs the same operation as three forward sweeps. Sweeps also preserve the space of symmetric matrices (allowing one to cut down computational run time in that case by a factor of two), and behave well with respect to principal minors; a sweep of a principal minor is a principal minor of a sweep, after adjusting indices appropriately.

Remarkably, the sweep operators all commute with each other: . If

and we perform the first

sweeps (in any order) to a matrix

with a

minor,

a

matrix,

a

matrix, and

a

matrix, one obtains the new matrix

Note the appearance of the Schur complement in the bottom right block. Thus, for instance, one can essentially invert a matrix by performing all

sweeps:

If a matrix has the form

for a minor

,

column vector

,

row vector

, and scalar

, then performing the first

sweeps gives

and all the components of this matrix are usable for various numerical linear algebra applications in statistics (e.g. in least squares regression). Given that sweeps behave well with inverses, it is perhaps not surprising that sweeps also behave well under determinants: the determinant of can be factored as the product of the entry

and the determinant of the

matrix formed from

by removing the

row and column. As a consequence, one can compute the determinant of

fairly efficiently (so long as the sweep operations don’t come close to dividing by zero) by sweeping the matrix for

in turn, and multiplying together the

entry of the matrix just before the

sweep for

to obtain the determinant.

It turns out that there is a simple geometric explanation for these seemingly magical properties of the sweep operation. Any matrix

creates a graph

(where we think of

as the space of column vectors). This graph is an

-dimensional subspace of

. Conversely, most subspaces of

arises as graphs; there are some that fail the vertical line test, but these are a positive codimension set of counterexamples.

We use to denote the standard basis of

, with

the standard basis for the first factor of

and

the standard basis for the second factor. The operation of sweeping the

entry then corresponds to a ninety degree rotation

in the

plane, that sends

to

(and

to

), keeping all other basis vectors fixed: thus we have

for generic

(more precisely, those

with non-vanishing entry

). For instance, if

and

is of the form (1), then

is the set of tuples

obeying the equations

The image of under

is

. Since we can write the above system of equations (for

) as

we see from (2) that is the graph of

. Thus the sweep operation is a multidimensional generalisation of the high school geometry fact that the line

in the plane becomes

after applying a ninety degree rotation.

It is then an instructive exercise to use this geometric interpretation of the sweep operator to recover all the remarkable properties about these operations listed above. It is also useful to compare the geometric interpretation of sweeping as rotation of the graph to that of Gaussian elimination, which instead shears and reflects the graph by various elementary transformations (this is what is going on geometrically when one performs Gaussian elimination on an augmented matrix). Rotations are less distorting than shears, so one can see geometrically why sweeping can produce fewer numerical artefacts than Gaussian elimination.

Given two unit vectors in a real inner product space, one can define the correlation between these vectors to be their inner product

, or in more geometric terms, the cosine of the angle

subtended by

and

. By the Cauchy-Schwarz inequality, this is a quantity between

and

, with the extreme positive correlation

occurring when

are identical, the extreme negative correlation

occurring when

are diametrically opposite, and the zero correlation

occurring when

are orthogonal. This notion is closely related to the notion of correlation between two non-constant square-integrable real-valued random variables

, which is the same as the correlation between two unit vectors

lying in the Hilbert space

of square-integrable random variables, with

being the normalisation of

defined by subtracting off the mean

and then dividing by the standard deviation of

, and similarly for

and

.

One can also define correlation for complex (Hermitian) inner product spaces by taking the real part of the complex inner product to recover a real inner product.

While reading the (highly recommended) recent popular maths book “How not to be wrong“, by my friend and co-author Jordan Ellenberg, I came across the (important) point that correlation is not necessarily transitive: if correlates with

, and

correlates with

, then this does not imply that

correlates with

. A simple geometric example is provided by the three unit vectors

in the Euclidean plane :

and

have a positive correlation of

, as does

and

, but

and

are not correlated with each other. Or: for a typical undergraduate course, it is generally true that good exam scores are correlated with a deep understanding of the course material, and memorising from flash cards are correlated with good exam scores, but this does not imply that memorising flash cards is correlated with deep understanding of the course material.

However, there are at least two situations in which some partial version of transitivity of correlation can be recovered. The first is in the “99%” regime in which the correlations are very close to : if

are unit vectors such that

is very highly correlated with

, and

is very highly correlated with

, then this does imply that

is very highly correlated with

. Indeed, from the identity

(and similarly for and

) and the triangle inequality

Thus, for instance, if and

, then

. This is of course closely related to (though slightly weaker than) the triangle inequality for angles:

Remark 1 (Thanks to Andrew Granville for conversations leading to this observation.) The inequality (1) also holds for sub-unit vectors, i.e. vectors

with

. This comes by extending

in directions orthogonal to all three original vectors and to each other in order to make them unit vectors, enlarging the ambient Hilbert space

if necessary. More concretely, one can apply (1) to the unit vectors

in

.

But even in the “” regime in which correlations are very weak, there is still a version of transitivity of correlation, known as the van der Corput lemma, which basically asserts that if a unit vector

is correlated with many unit vectors

, then many of the pairs

will then be correlated with each other. Indeed, from the Cauchy-Schwarz inequality

Thus, for instance, if for at least

values of

, then (after removing those indices

for which

)

must be at least

, which implies that

for at least

pairs

. Or as another example: if a random variable

exhibits at least

positive correlation with

other random variables

, then if

, at least two distinct

must have positive correlation with each other (although this argument does not tell you which pair

are so correlated). Thus one can view this inequality as a sort of `pigeonhole principle” for correlation.

A similar argument (multiplying each by an appropriate sign

) shows the related van der Corput inequality

and this inequality is also true for complex inner product spaces. (Also, the do not need to be unit vectors for this inequality to hold.)

Geometrically, the picture is this: if positively correlates with all of the

, then the

are all squashed into a somewhat narrow cone centred at

. The cone is still wide enough to allow a few pairs

to be orthogonal (or even negatively correlated) with each other, but (when

is large enough) it is not wide enough to allow all of the

to be so widely separated. Remarkably, the bound here does not depend on the dimension of the ambient inner product space; while increasing the number of dimensions should in principle add more “room” to the cone, this effect is counteracted by the fact that in high dimensions, almost all pairs of vectors are close to orthogonal, and the exceptional pairs that are even weakly correlated to each other become exponentially rare. (See this previous blog post for some related discussion; in particular, Lemma 2 from that post is closely related to the van der Corput inequality presented here.)

A particularly common special case of the van der Corput inequality arises when is a unit vector fixed by some unitary operator

, and the

are shifts

of a single unit vector

. In this case, the inner products

are all equal, and we arrive at the useful van der Corput inequality

(In fact, one can even remove the absolute values from the right-hand side, by using (2) instead of (4).) Thus, to show that has negligible correlation with

, it suffices to show that the shifts of

have negligible correlation with each other.

Here is a basic application of the van der Corput inequality:

Proposition 2 (Weyl equidistribution estimate) Let

be a polynomial with at least one non-constant coefficient irrational. Then one has

where

.

Note that this assertion implies the more general assertion

for any non-zero integer (simply by replacing

by

), which by the Weyl equidistribution criterion is equivalent to the sequence

being asymptotically equidistributed in

.

Proof: We induct on the degree of the polynomial

, which must be at least one. If

is equal to one, the claim is easily established from the geometric series formula, so suppose that

and that the claim has already been proven for

. If the top coefficient

of

is rational, say

, then by partitioning the natural numbers into residue classes modulo

, we see that the claim follows from the induction hypothesis; so we may assume that the top coefficient

is irrational.

In order to use the van der Corput inequality as stated above (i.e. in the formalism of inner product spaces) we will need a non-principal ultrafilter (see e.g this previous blog post for basic theory of ultrafilters); we leave it as an exercise to the reader to figure out how to present the argument below without the use of ultrafilters (or similar devices, such as Banach limits). The ultrafilter

defines an inner product

on bounded complex sequences

by setting

Strictly speaking, this inner product is only positive semi-definite rather than positive definite, but one can quotient out by the null vectors to obtain a positive-definite inner product. To establish the claim, it will suffice to show that

for every non-principal ultrafilter .

Note that the space of bounded sequences (modulo null vectors) admits a shift , defined by

This shift becomes unitary once we quotient out by null vectors, and the constant sequence is clearly a unit vector that is invariant with respect to the shift. So by the van der Corput inequality, we have

for any . But we may rewrite

. Then observe that if

,

is a polynomial of degree

whose

coefficient is irrational, so by induction hypothesis we have

for

. For

we of course have

, and so

for any . Letting

, we obtain the claim.

A remarkable phenomenon in probability theory is that of universality – that many seemingly unrelated probability distributions, which ostensibly involve large numbers of unknown parameters, can end up converging to a universal law that may only depend on a small handful of parameters. One of the most famous examples of the universality phenomenon is the central limit theorem; another rich source of examples comes from random matrix theory, which is one of the areas of my own research.

Analogous universality phenomena also show up in empirical distributions – the distributions of a statistic from a large population of “real-world” objects. Examples include Benford’s law, Zipf’s law, and the Pareto distribution (of which the Pareto principle or 80-20 law is a special case). These laws govern the asymptotic distribution of many statistics

which

- (i) take values as positive numbers;

- (ii) range over many different orders of magnitude;

- (iiii) arise from a complicated combination of largely independent factors (with different samples of

arising from different independent factors); and

- (iv) have not been artificially rounded, truncated, or otherwise constrained in size.

Examples here include the population of countries or cities, the frequency of occurrence of words in a language, the mass of astronomical objects, or the net worth of individuals or corporations. The laws are then as follows:

- Benford’s law: For

, the proportion of

whose first digit is

is approximately

. Thus, for instance,

should have a first digit of

about

of the time, but a first digit of

only about

of the time.

- Zipf’s law: The

largest value of

should obey an approximate power law, i.e. it should be approximately

for the first few

and some parameters

. In many cases,

is close to

.

- Pareto distribution: The proportion of

with at least